Why do linear SVMs trained on HOG features perform so well?

Linear Support Vector Machines trained on HOG features are now a de facto standard across many visual perception tasks. Their popularisation can largely be attributed to the step-change in performance they brought to pedestrian detection, and their subsequent successes in deformable parts models. This paper explores the interactions that make the HOG-SVM symbiosis perform so well. By connecting the feature extraction and learning processes rather than treating them as disparate plugins, we show that HOG features can be viewed as doing two things: (i) inducing capacity in, and (ii) adding prior to a linear SVM trained on pixels. From this perspective, preserving second-order statistics and locality of interactions are key to good performance. We demonstrate surprising accuracy on expression recognition and pedestrian detection tasks, by assuming only the importance of preserving such local second-order interactions.

PDF Abstract

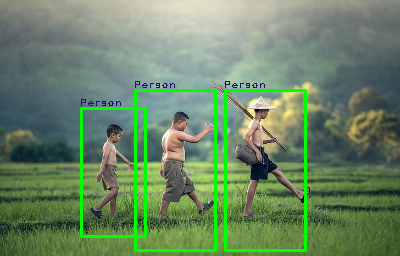

INRIA Person

INRIA Person