Search Results for author: Annan Wang

Found 10 papers, 9 papers with code

Towards Open-ended Visual Quality Comparison

no code implementations • 26 Feb 2024 • HaoNing Wu, Hanwei Zhu, ZiCheng Zhang, Erli Zhang, Chaofeng Chen, Liang Liao, Chunyi Li, Annan Wang, Wenxiu Sun, Qiong Yan, Xiaohong Liu, Guangtao Zhai, Shiqi Wang, Weisi Lin

Comparative settings (e. g. pairwise choice, listwise ranking) have been adopted by a wide range of subjective studies for image quality assessment (IQA), as it inherently standardizes the evaluation criteria across different observers and offer more clear-cut responses.

Q-Align: Teaching LMMs for Visual Scoring via Discrete Text-Defined Levels

1 code implementation • 28 Dec 2023 • HaoNing Wu, ZiCheng Zhang, Weixia Zhang, Chaofeng Chen, Liang Liao, Chunyi Li, Yixuan Gao, Annan Wang, Erli Zhang, Wenxiu Sun, Qiong Yan, Xiongkuo Min, Guangtao Zhai, Weisi Lin

The explosion of visual content available online underscores the requirement for an accurate machine assessor to robustly evaluate scores across diverse types of visual contents.

Ranked #1 on

Video Quality Assessment

on LIVE-FB LSVQ

Ranked #1 on

Video Quality Assessment

on LIVE-FB LSVQ

Enhancing Diffusion Models with Text-Encoder Reinforcement Learning

1 code implementation • 27 Nov 2023 • Chaofeng Chen, Annan Wang, HaoNing Wu, Liang Liao, Wenxiu Sun, Qiong Yan, Weisi Lin

While fine-tuning the U-Net can partially improve performance, it remains suffering from the suboptimal text encoder.

Q-Instruct: Improving Low-level Visual Abilities for Multi-modality Foundation Models

1 code implementation • 12 Nov 2023 • HaoNing Wu, ZiCheng Zhang, Erli Zhang, Chaofeng Chen, Liang Liao, Annan Wang, Kaixin Xu, Chunyi Li, Jingwen Hou, Guangtao Zhai, Geng Xue, Wenxiu Sun, Qiong Yan, Weisi Lin

Multi-modality foundation models, as represented by GPT-4V, have brought a new paradigm for low-level visual perception and understanding tasks, that can respond to a broad range of natural human instructions in a model.

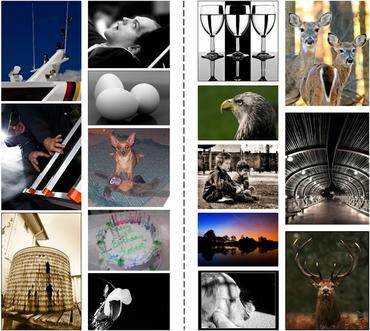

Q-Bench: A Benchmark for General-Purpose Foundation Models on Low-level Vision

1 code implementation • 25 Sep 2023 • HaoNing Wu, ZiCheng Zhang, Erli Zhang, Chaofeng Chen, Liang Liao, Annan Wang, Chunyi Li, Wenxiu Sun, Qiong Yan, Guangtao Zhai, Weisi Lin

To address this gap, we present Q-Bench, a holistic benchmark crafted to systematically evaluate potential abilities of MLLMs on three realms: low-level visual perception, low-level visual description, and overall visual quality assessment.

Towards Explainable In-the-Wild Video Quality Assessment: A Database and a Language-Prompted Approach

1 code implementation • 22 May 2023 • HaoNing Wu, Erli Zhang, Liang Liao, Chaofeng Chen, Jingwen Hou, Annan Wang, Wenxiu Sun, Qiong Yan, Weisi Lin

Though subjective studies have collected overall quality scores for these videos, how the abstract quality scores relate with specific factors is still obscure, hindering VQA methods from more concrete quality evaluations (e. g. sharpness of a video).

Towards Robust Text-Prompted Semantic Criterion for In-the-Wild Video Quality Assessment

2 code implementations • 28 Apr 2023 • HaoNing Wu, Liang Liao, Annan Wang, Chaofeng Chen, Jingwen Hou, Wenxiu Sun, Qiong Yan, Weisi Lin

The proliferation of videos collected during in-the-wild natural settings has pushed the development of effective Video Quality Assessment (VQA) methodologies.

Exploring Opinion-unaware Video Quality Assessment with Semantic Affinity Criterion

2 code implementations • 26 Feb 2023 • HaoNing Wu, Liang Liao, Jingwen Hou, Chaofeng Chen, Erli Zhang, Annan Wang, Wenxiu Sun, Qiong Yan, Weisi Lin

Recent learning-based video quality assessment (VQA) algorithms are expensive to implement due to the cost of data collection of human quality opinions, and are less robust across various scenarios due to the biases of these opinions.

Exploring Video Quality Assessment on User Generated Contents from Aesthetic and Technical Perspectives

3 code implementations • ICCV 2023 • HaoNing Wu, Erli Zhang, Liang Liao, Chaofeng Chen, Jingwen Hou, Annan Wang, Wenxiu Sun, Qiong Yan, Weisi Lin

In light of this, we propose the Disentangled Objective Video Quality Evaluator (DOVER) to learn the quality of UGC videos based on the two perspectives.

Ranked #1 on

Video Quality Assessment

on LIVE-VQC

Ranked #1 on

Video Quality Assessment

on LIVE-VQC

FAST-VQA: Efficient End-to-end Video Quality Assessment with Fragment Sampling

4 code implementations • 6 Jul 2022 • HaoNing Wu, Chaofeng Chen, Jingwen Hou, Liang Liao, Annan Wang, Wenxiu Sun, Qiong Yan, Weisi Lin

Consisting of fragments and FANet, the proposed FrAgment Sample Transformer for VQA (FAST-VQA) enables efficient end-to-end deep VQA and learns effective video-quality-related representations.

Ranked #3 on

Video Quality Assessment

on LIVE-VQC

(using extra training data)

Ranked #3 on

Video Quality Assessment

on LIVE-VQC

(using extra training data)