Search Results for author: Chenghao Liu

Found 51 papers, 27 papers with code

PEMT: Multi-Task Correlation Guided Mixture-of-Experts Enables Parameter-Efficient Transfer Learning

no code implementations • 23 Feb 2024 • Zhisheng Lin, Han Fu, Chenghao Liu, Zhuo Li, Jianling Sun

However, current approaches typically either train adapters on individual tasks or distill shared knowledge from source tasks, failing to fully exploit task-specific knowledge and the correlation between source and target tasks.

MTSA-SNN: A Multi-modal Time Series Analysis Model Based on Spiking Neural Network

1 code implementation • 8 Feb 2024 • Chengzhi Liu, Zheng Tao, Zihong Luo, Chenghao Liu

To address these challenges, we propose a Multi-modal Time Series Analysis Model Based on Spiking Neural Network (MTSA-SNN).

CompeteSMoE - Effective Training of Sparse Mixture of Experts via Competition

no code implementations • 4 Feb 2024 • Quang Pham, Giang Do, Huy Nguyen, TrungTin Nguyen, Chenghao Liu, Mina Sartipi, Binh T. Nguyen, Savitha Ramasamy, XiaoLi Li, Steven Hoi, Nhat Ho

Sparse mixture of experts (SMoE) offers an appealing solution to scale up the model complexity beyond the mean of increasing the network's depth or width.

Unified Training of Universal Time Series Forecasting Transformers

1 code implementation • 4 Feb 2024 • Gerald Woo, Chenghao Liu, Akshat Kumar, Caiming Xiong, Silvio Savarese, Doyen Sahoo

Deep learning for time series forecasting has traditionally operated within a one-model-per-dataset framework, limiting its potential to leverage the game-changing impact of large pre-trained models.

Advancing Graph Representation Learning with Large Language Models: A Comprehensive Survey of Techniques

no code implementations • 4 Feb 2024 • Qiheng Mao, Zemin Liu, Chenghao Liu, Zhuo Li, Jianling Sun

This collaboration harnesses the sophisticated linguistic capabilities of LLMs to improve the contextual understanding and adaptability of graph models, thereby broadening the scope and potential of GRL.

HyperRouter: Towards Efficient Training and Inference of Sparse Mixture of Experts

1 code implementation • 12 Dec 2023 • Giang Do, Khiem Le, Quang Pham, TrungTin Nguyen, Thanh-Nam Doan, Bint T. Nguyen, Chenghao Liu, Savitha Ramasamy, XiaoLi Li, Steven Hoi

By routing input tokens to only a few split experts, Sparse Mixture-of-Experts has enabled efficient training of large language models.

Calibration of Time-Series Forecasting Transformers: Detecting and Adapting Context-Driven Distribution Shift

no code implementations • 23 Oct 2023 • Mouxiang Chen, Lefei Shen, Han Fu, Zhuo Li, Jianling Sun, Chenghao Liu

In this paper, we introduce a universal calibration methodology for the detection and adaptation of CDS with a trained Transformer model.

ULTRA-DP: Unifying Graph Pre-training with Multi-task Graph Dual Prompt

1 code implementation • 23 Oct 2023 • Mouxiang Chen, Zemin Liu, Chenghao Liu, Jundong Li, Qiheng Mao, Jianling Sun

Based on this framework, we propose a prompt-based transferability test to find the most relevant pretext task in order to reduce the semantic gap.

Pushing the Limits of Pre-training for Time Series Forecasting in the CloudOps Domain

1 code implementation • 8 Oct 2023 • Gerald Woo, Chenghao Liu, Akshat Kumar, Doyen Sahoo

Time series has been left behind in the era of pre-training and transfer learning.

Identifiability Matters: Revealing the Hidden Recoverable Condition in Unbiased Learning to Rank

no code implementations • 27 Sep 2023 • Mouxiang Chen, Chenghao Liu, Zemin Liu, Zhuo Li, Jianling Sun

Unbiased Learning to Rank (ULTR) aims to train unbiased ranking models from biased click logs, by explicitly modeling a generation process for user behavior and fitting click data based on examination hypothesis.

FedET: A Communication-Efficient Federated Class-Incremental Learning Framework Based on Enhanced Transformer

no code implementations • 27 Jun 2023 • Chenghao Liu, Xiaoyang Qu, Jianzong Wang, Jing Xiao

To address local forgetting caused by new classes of new tasks and global forgetting brought by non-i. i. d (non-independent and identically distributed) class imbalance across different local clients, we proposed an Enhancer distillation method to modify the imbalance between old and new knowledge and repair the non-i. i. d.

PyRCA: A Library for Metric-based Root Cause Analysis

1 code implementation • 20 Jun 2023 • Chenghao Liu, Wenzhuo Yang, Himanshu Mittal, Manpreet Singh, Doyen Sahoo, Steven C. H. Hoi

We introduce PyRCA, an open-source Python machine learning library of Root Cause Analysis (RCA) for Artificial Intelligence for IT Operations (AIOps).

OTW: Optimal Transport Warping for Time Series

no code implementations • 1 Jun 2023 • Fabian Latorre, Chenghao Liu, Doyen Sahoo, Steven C. H. Hoi

Dynamic Time Warping (DTW) has become the pragmatic choice for measuring distance between time series.

AI for IT Operations (AIOps) on Cloud Platforms: Reviews, Opportunities and Challenges

no code implementations • 10 Apr 2023 • Qian Cheng, Doyen Sahoo, Amrita Saha, Wenzhuo Yang, Chenghao Liu, Gerald Woo, Manpreet Singh, Silvio Saverese, Steven C. H. Hoi

There are a wide variety of problems to address, and multiple use-cases, where AI capabilities can be leveraged to enhance operational efficiency.

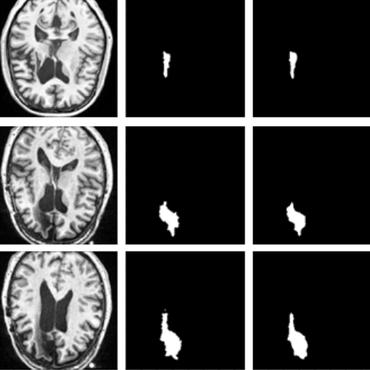

Unsupervised Brain Tumor Segmentation with Image-based Prompts

no code implementations • 4 Apr 2023 • Xinru Zhang, Ni Ou, Chenghao Liu, Zhizheng Zhuo, Yaou Liu, Chuyang Ye

Specifically, instead of directly training a model for brain tumor segmentation with a large amount of annotated data, we seek to train a model that can answer the question: is a voxel in the input image associated with tumor-like hyper-/hypo-intensity?

HINormer: Representation Learning On Heterogeneous Information Networks with Graph Transformer

1 code implementation • 22 Feb 2023 • Qiheng Mao, Zemin Liu, Chenghao Liu, Jianling Sun

To bridge this gap, in this paper we investigate the representation learning on HINs with Graph Transformer, and propose a novel model named HINormer, which capitalizes on a larger-range aggregation mechanism for node representation learning.

LogAI: A Library for Log Analytics and Intelligence

1 code implementation • 31 Jan 2023 • Qian Cheng, Amrita Saha, Wenzhuo Yang, Chenghao Liu, Doyen Sahoo, Steven Hoi

In order to enable users to perform multiple types of AI-based log analysis tasks in a uniform manner, we introduce LogAI (https://github. com/salesforce/logai), a one-stop open source library for log analytics and intelligence.

Salesforce CausalAI Library: A Fast and Scalable Framework for Causal Analysis of Time Series and Tabular Data

1 code implementation • 25 Jan 2023 • Devansh Arpit, Matthew Fernandez, Itai Feigenbaum, Weiran Yao, Chenghao Liu, Wenzhuo Yang, Paul Josel, Shelby Heinecke, Eric Hu, Huan Wang, Stephen Hoi, Caiming Xiong, Kun Zhang, Juan Carlos Niebles

Finally, we provide a user interface (UI) that allows users to perform causal analysis on data without coding.

Continual Learning, Fast and Slow

1 code implementation • 6 Sep 2022 • Quang Pham, Chenghao Liu, Steven C. H. Hoi

Motivated by this theory, we propose \emph{DualNets} (for Dual Networks), a general continual learning framework comprising a fast learning system for supervised learning of pattern-separated representation from specific tasks and a slow learning system for representation learning of task-agnostic general representation via Self-Supervised Learning (SSL).

Learning Deep Time-index Models for Time Series Forecasting

1 code implementation • 13 Jul 2022 • Gerald Woo, Chenghao Liu, Doyen Sahoo, Akshat Kumar, Steven Hoi

Deep learning has been actively applied to time series forecasting, leading to a deluge of new methods, belonging to the class of historical-value models.

Scalar is Not Enough: Vectorization-based Unbiased Learning to Rank

1 code implementation • 3 Jun 2022 • Mouxiang Chen, Chenghao Liu, Zemin Liu, Jianling Sun

Most of the current ULTR methods are based on the examination hypothesis (EH), which assumes that the click probability can be factorized into two scalar functions, one related to ranking features and the other related to bias factors.

Continual Normalization: Rethinking Batch Normalization for Online Continual Learning

1 code implementation • ICLR 2022 • Quang Pham, Chenghao Liu, Steven Hoi

Existing continual learning methods use Batch Normalization (BN) to facilitate training and improve generalization across tasks.

Learning Fast and Slow for Online Time Series Forecasting

1 code implementation • 23 Feb 2022 • Quang Pham, Chenghao Liu, Doyen Sahoo, Steven C. H. Hoi

The fast adaptation capability of deep neural networks in non-stationary environments is critical for online time series forecasting.

CoST: Contrastive Learning of Disentangled Seasonal-Trend Representations for Time Series Forecasting

1 code implementation • ICLR 2022 • Gerald Woo, Chenghao Liu, Doyen Sahoo, Akshat Kumar, Steven Hoi

Motivated by the recent success of representation learning in computer vision and natural language processing, we argue that a more promising paradigm for time series forecasting, is to first learn disentangled feature representations, followed by a simple regression fine-tuning step -- we justify such a paradigm from a causal perspective.

ETSformer: Exponential Smoothing Transformers for Time-series Forecasting

2 code implementations • 3 Feb 2022 • Gerald Woo, Chenghao Liu, Doyen Sahoo, Akshat Kumar, Steven Hoi

Transformers have been actively studied for time-series forecasting in recent years.

Node-wise Localization of Graph Neural Networks

1 code implementation • 27 Oct 2021 • Zemin Liu, Yuan Fang, Chenghao Liu, Steven C. H. Hoi

Ideally, how a node receives its neighborhood information should be a function of its local context, to diverge from the global GNN model shared by all nodes.

DualNet: Continual Learning, Fast and Slow

1 code implementation • NeurIPS 2021 • Quang Pham, Chenghao Liu, Steven Hoi

According to Complementary Learning Systems (CLS) theory~\citep{mcclelland1995there} in neuroscience, humans do effective \emph{continual learning} through two complementary systems: a fast learning system centered on the hippocampus for rapid learning of the specifics and individual experiences, and a slow learning system located in the neocortex for the gradual acquisition of structured knowledge about the environment.

Modeling Dynamic Attributes for Next Basket Recommendation

no code implementations • 23 Sep 2021 • Yongjun Chen, Jia Li, Chenghao Liu, Chenxi Li, Markus Anderle, Julian McAuley, Caiming Xiong

However, properly integrating them into user interest models is challenging since attribute dynamics can be diverse such as time-interval aware, periodic patterns (etc.

Merlion: A Machine Learning Library for Time Series

2 code implementations • 20 Sep 2021 • Aadyot Bhatnagar, Paul Kassianik, Chenghao Liu, Tian Lan, Wenzhuo Yang, Rowan Cassius, Doyen Sahoo, Devansh Arpit, Sri Subramanian, Gerald Woo, Amrita Saha, Arun Kumar Jagota, Gokulakrishnan Gopalakrishnan, Manpreet Singh, K C Krithika, Sukumar Maddineni, Daeki Cho, Bo Zong, Yingbo Zhou, Caiming Xiong, Silvio Savarese, Steven Hoi, Huan Wang

We introduce Merlion, an open-source machine learning library for time series.

CarveMix: A Simple Data Augmentation Method for Brain Lesion Segmentation

1 code implementation • 16 Aug 2021 • Xinru Zhang, Chenghao Liu, Ni Ou, Xiangzhu Zeng, Xiaoliang Xiong, Yizhou Yu, Zhiwen Liu, Chuyang Ye

Data augmentation is a widely used strategy that improves the training of CNNs, and the design of the augmentation method for brain lesion segmentation is still an open problem.

Online Continual Learning Under Domain Shift

no code implementations • 1 Jan 2021 • Quang Pham, Chenghao Liu, Steven Hoi

CIER employs an adversarial training to correct the shift in $P(X, Y)$ by matching $P(X|Y)$, which results in an invariant representation that can generalize to unseen domains during inference.

Contextual Transformation Networks for Online Continual Learning

no code implementations • ICLR 2021 • Quang Pham, Chenghao Liu, Doyen Sahoo, Steven Hoi

Continual learning methods with fixed architectures rely on a single network to learn models that can perform well on all tasks.

Localized Meta-Learning: A PAC-Bayes Analysis for Meta-Learning Beyond Global Prior

no code implementations • 1 Jan 2021 • Chenghao Liu, Tao Lu, Doyen Sahoo, Yuan Fang, Kun Zhang, Steven Hoi

Meta-learning methods learn the meta-knowledge among various training tasks and aim to promote the learning of new tasks under the task similarity assumption.

Learning Transferrable Parameters for Long-tailed Sequential User Behavior Modeling

no code implementations • 22 Oct 2020 • Jianwen Yin, Chenghao Liu, Weiqing Wang, Jianling Sun, Steven C. H. Hoi

Sequential user behavior modeling plays a crucial role in online user-oriented services, such as product purchasing, news feed consumption, and online advertising.

Bilevel Continual Learning

1 code implementation • 30 Jul 2020 • Quang Pham, Doyen Sahoo, Chenghao Liu, Steven C. H. Hoi

Continual learning aims to learn continuously from a stream of tasks and data in an online-learning fashion, being capable of exploiting what was learned previously to improve current and future tasks while still being able to perform well on the previous tasks.

Adaptive Task Sampling for Meta-Learning

no code implementations • ECCV 2020 • Chenghao Liu, Zhihao Wang, Doyen Sahoo, Yuan Fang, Kun Zhang, Steven C. H. Hoi

Meta-learning methods have been extensively studied and applied in computer vision, especially for few-shot classification tasks.

Graph Prototypical Networks for Few-shot Learning on Attributed Networks

1 code implementation • 23 Jun 2020 • Kaize Ding, Jianling Wang, Jundong Li, Kai Shu, Chenghao Liu, Huan Liu

By constructing a pool of semi-supervised node classification tasks to mimic the real test environment, GPN is able to perform \textit{meta-learning} on an attributed network and derive a highly generalizable model for handling the target classification task.

UniConv: A Unified Conversational Neural Architecture for Multi-domain Task-oriented Dialogues

1 code implementation • EMNLP 2020 • Hung Le, Doyen Sahoo, Chenghao Liu, Nancy F. Chen, Steven C. H. Hoi

Building an end-to-end conversational agent for multi-domain task-oriented dialogues has been an open challenge for two main reasons.

MCEN: Bridging Cross-Modal Gap between Cooking Recipes and Dish Images with Latent Variable Model

no code implementations • CVPR 2020 • Han Fu, Rui Wu, Chenghao Liu, Jianling Sun

Nowadays, driven by the increasing concern on diet and health, food computing has attracted enormous attention from both industry and research community.

A^2-GCN: An Attribute-aware Attentive GCN Model for Recommendation

no code implementations • 20 Mar 2020 • Fan Liu, Zhiyong Cheng, Lei Zhu, Chenghao Liu, Liqiang Nie

Considering the fact that for different users, the attributes of an item have different influence on their preference for this item, we design a novel attention mechanism to filter the message passed from an item to a target user by considering the attribute information.

Cross-Modal Food Retrieval: Learning a Joint Embedding of Food Images and Recipes with Semantic Consistency and Attention Mechanism

no code implementations • 9 Mar 2020 • Hao Wang, Doyen Sahoo, Chenghao Liu, Ke Shu, Palakorn Achananuparp, Ee-Peng Lim, Steven C. H. Hoi

Food retrieval is an important task to perform analysis of food-related information, where we are interested in retrieving relevant information about the queried food item such as ingredients, cooking instructions, etc.

Ranked #7 on

Cross-Modal Retrieval

on Recipe1M

Ranked #7 on

Cross-Modal Retrieval

on Recipe1M

Localized Meta-Learning: A PAC-Bayes Analysis for Meta-Leanring Beyond Global Prior

no code implementations • 25 Sep 2019 • Chenghao Liu, Tao Lu, Doyen Sahoo, Yuan Fang, Steven C.H. Hoi.

Meta-learning methods learn the meta-knowledge among various training tasks and aim to promote the learning of new tasks under the task similarity assumption.

Reference Network for Neural Machine Translation

no code implementations • ACL 2019 • Han Fu, Chenghao Liu, Jianling Sun

Neural Machine Translation (NMT) has achieved notable success in recent years.

Feature Interaction-aware Graph Neural Networks

no code implementations • 19 Aug 2019 • Kaize Ding, Yichuan Li, Jundong Li, Chenghao Liu, Huan Liu

Inspired by the immense success of deep learning, graph neural networks (GNNs) are widely used to learn powerful node representations and have demonstrated promising performance on different graph learning tasks.

Compositional Coding for Collaborative Filtering

1 code implementation • 9 May 2019 • Chenghao Liu, Tao Lu, Xin Wang, Zhiyong Cheng, Jianling Sun, Steven C. H. Hoi

However, CF with binary codes naturally suffers from low accuracy due to limited representation capability in each bit, which impedes it from modeling complex structure of the data.

Learning Cross-Modal Embeddings with Adversarial Networks for Cooking Recipes and Food Images

2 code implementations • CVPR 2019 • Hao Wang, Doyen Sahoo, Chenghao Liu, Ee-Peng Lim, Steven C. H. Hoi

Food computing is playing an increasingly important role in human daily life, and has found tremendous applications in guiding human behavior towards smart food consumption and healthy lifestyle.

Ranked #8 on

Cross-Modal Retrieval

on Recipe1M

Ranked #8 on

Cross-Modal Retrieval

on Recipe1M

Meta-Learning with Domain Adaptation for Few-Shot Learning under Domain Shift

no code implementations • ICLR 2019 • Doyen Sahoo, Hung Le, Chenghao Liu, Steven C. H. Hoi

Most existing work assumes that both training and test tasks are drawn from the same distribution, and a large amount of labeled data is available in the training tasks.

SL$^2$MF: Predicting Synthetic Lethality in Human Cancers via Logistic Matrix Factorization

no code implementations • 20 Oct 2018 • Yong Liu, Min Wu, Chenghao Liu, Xiao-Li Li, Jie Zheng

Moreover, we also incorporate biological knowledge about genes from protein-protein interaction (PPI) data and Gene Ontology (GO).

Malicious URL Detection using Machine Learning: A Survey

1 code implementation • 25 Jan 2017 • Doyen Sahoo, Chenghao Liu, Steven C. H. Hoi

This article aims to provide a comprehensive survey and a structural understanding of Malicious URL Detection techniques using machine learning.

SOL: A Library for Scalable Online Learning Algorithms

1 code implementation • 28 Oct 2016 • Yue Wu, Steven C. H. Hoi, Chenghao Liu, Jing Lu, Doyen Sahoo, Nenghai Yu

SOL is an open-source library for scalable online learning algorithms, and is particularly suitable for learning with high-dimensional data.

Online Bayesian Collaborative Topic Regression

no code implementations • 28 May 2016 • Chenghao Liu, Tao Jin, Steven C. H. Hoi, Peilin Zhao, Jianling Sun

In this paper, we propose a novel scheme of Online Bayesian Collaborative Topic Regression (OBCTR) which is efficient and scalable for learning from data streams.