Search Results for author: Doina Precup

Found 194 papers, 64 papers with code

Offline Multitask Representation Learning for Reinforcement Learning

no code implementations • 18 Mar 2024 • Haque Ishfaq, Thanh Nguyen-Tang, Songtao Feng, Raman Arora, Mengdi Wang, Ming Yin, Doina Precup

We study offline multitask representation learning in reinforcement learning (RL), where a learner is provided with an offline dataset from different tasks that share a common representation and is asked to learn the shared representation.

Discrete Probabilistic Inference as Control in Multi-path Environments

1 code implementation • 15 Feb 2024 • Tristan Deleu, Padideh Nouri, Nikolay Malkin, Doina Precup, Yoshua Bengio

We consider the problem of sampling from a discrete and structured distribution as a sequential decision problem, where the objective is to find a stochastic policy such that objects are sampled at the end of this sequential process proportionally to some predefined reward.

Mixtures of Experts Unlock Parameter Scaling for Deep RL

no code implementations • 13 Feb 2024 • Johan Obando-Ceron, Ghada Sokar, Timon Willi, Clare Lyle, Jesse Farebrother, Jakob Foerster, Gintare Karolina Dziugaite, Doina Precup, Pablo Samuel Castro

The recent rapid progress in (self) supervised learning models is in large part predicted by empirical scaling laws: a model's performance scales proportionally to its size.

On the Privacy of Selection Mechanisms with Gaussian Noise

1 code implementation • 9 Feb 2024 • Jonathan Lebensold, Doina Precup, Borja Balle

In this work, we revisit the analysis of Report Noisy Max and Above Threshold with Gaussian noise and show that, under the additional assumption that the underlying queries are bounded, it is possible to provide pure ex-ante DP bounds for Report Noisy Max and pure ex-post DP bounds for Above Threshold.

QGFN: Controllable Greediness with Action Values

no code implementations • 7 Feb 2024 • Elaine Lau, Stephen Zhewen Lu, Ling Pan, Doina Precup, Emmanuel Bengio

Generative Flow Networks (GFlowNets; GFNs) are a family of reward/energy-based generative methods for combinatorial objects, capable of generating diverse and high-utility samples.

Code as Reward: Empowering Reinforcement Learning with VLMs

no code implementations • 7 Feb 2024 • David Venuto, Sami Nur Islam, Martin Klissarov, Doina Precup, Sherry Yang, Ankit Anand

Pre-trained Vision-Language Models (VLMs) are able to understand visual concepts, describe and decompose complex tasks into sub-tasks, and provide feedback on task completion.

Effective Protein-Protein Interaction Exploration with PPIretrieval

no code implementations • 6 Feb 2024 • Chenqing Hua, Connor Coley, Guy Wolf, Doina Precup, Shuangjia Zheng

Protein-protein interactions (PPIs) are crucial in regulating numerous cellular functions, including signal transduction, transportation, and immune defense.

Prediction and Control in Continual Reinforcement Learning

1 code implementation • NeurIPS 2023 • Nishanth Anand, Doina Precup

Temporal difference (TD) learning is often used to update the estimate of the value function which is used by RL agents to extract useful policies.

Nash Learning from Human Feedback

no code implementations • 1 Dec 2023 • Rémi Munos, Michal Valko, Daniele Calandriello, Mohammad Gheshlaghi Azar, Mark Rowland, Zhaohan Daniel Guo, Yunhao Tang, Matthieu Geist, Thomas Mesnard, Andrea Michi, Marco Selvi, Sertan Girgin, Nikola Momchev, Olivier Bachem, Daniel J. Mankowitz, Doina Precup, Bilal Piot

We term this approach Nash learning from human feedback (NLHF).

Learning domain-invariant classifiers for infant cry sounds

no code implementations • 30 Nov 2023 • Charles C. Onu, Hemanth K. Sheetha, Arsenii Gorin, Doina Precup

The issue of domain shift remains a problematic phenomenon in most real-world datasets and clinical audio is no exception.

Finding Increasingly Large Extremal Graphs with AlphaZero and Tabu Search

no code implementations • 6 Nov 2023 • Abbas Mehrabian, Ankit Anand, Hyunjik Kim, Nicolas Sonnerat, Matej Balog, Gheorghe Comanici, Tudor Berariu, Andrew Lee, Anian Ruoss, Anna Bulanova, Daniel Toyama, Sam Blackwell, Bernardino Romera Paredes, Petar Veličković, Laurent Orseau, Joonkyung Lee, Anurag Murty Naredla, Doina Precup, Adam Zsolt Wagner

This work studies a central extremal graph theory problem inspired by a 1975 conjecture of Erd\H{o}s, which aims to find graphs with a given size (number of nodes) that maximize the number of edges without having 3- or 4-cycles.

Conditions on Preference Relations that Guarantee the Existence of Optimal Policies

no code implementations • 3 Nov 2023 • Jonathan Colaço Carr, Prakash Panangaden, Doina Precup

Current results guaranteeing the existence of optimal policies in LfPF problems assume that both the preferences and transition dynamics are determined by a Markov Decision Process.

DGFN: Double Generative Flow Networks

no code implementations • 30 Oct 2023 • Elaine Lau, Nikhil Vemgal, Doina Precup, Emmanuel Bengio

Deep learning is emerging as an effective tool in drug discovery, with potential applications in both predictive and generative models.

Forecaster: Towards Temporally Abstract Tree-Search Planning from Pixels

no code implementations • 16 Oct 2023 • Thomas Jiralerspong, Flemming Kondrup, Doina Precup, Khimya Khetarpal

The ability to plan at many different levels of abstraction enables agents to envision the long-term repercussions of their decisions and thus enables sample-efficient learning.

A cry for help: Early detection of brain injury in newborns

no code implementations • 12 Oct 2023 • Charles C. Onu, Samantha Latremouille, Arsenii Gorin, Junhao Wang, Innocent Udeogu, Uchenna Ekwochi, Peter O. Ubuane, Omolara A. Kehinde, Muhammad A. Salisu, Datonye Briggs, Yoshua Bengio, Doina Precup

Since the 1960s, neonatal clinicians have known that newborns suffering from certain neurological conditions exhibit altered crying patterns such as the high-pitched cry in birth asphyxia.

Consciousness-Inspired Spatio-Temporal Abstractions for Better Generalization in Reinforcement Learning

1 code implementation • 30 Sep 2023 • Mingde Zhao, Safa Alver, Harm van Seijen, Romain Laroche, Doina Precup, Yoshua Bengio

Inspired by human conscious planning, we propose Skipper, a model-based reinforcement learning framework utilizing spatio-temporal abstractions to generalize better in novel situations.

Policy composition in reinforcement learning via multi-objective policy optimization

no code implementations • 29 Aug 2023 • Shruti Mishra, Ankit Anand, Jordan Hoffmann, Nicolas Heess, Martin Riedmiller, Abbas Abdolmaleki, Doina Precup

In two domains with continuous observation and action spaces, our agents successfully compose teacher policies in sequence and in parallel, and are also able to further extend the policies of the teachers in order to solve the task.

On the Convergence of Bounded Agents

no code implementations • 20 Jul 2023 • David Abel, André Barreto, Hado van Hasselt, Benjamin Van Roy, Doina Precup, Satinder Singh

Standard models of the reinforcement learning problem give rise to a straightforward definition of convergence: An agent converges when its behavior or performance in each environment state stops changing.

A Definition of Continual Reinforcement Learning

no code implementations • NeurIPS 2023 • David Abel, André Barreto, Benjamin Van Roy, Doina Precup, Hado van Hasselt, Satinder Singh

Using this new language, we define a continual learning agent as one that can be understood as carrying out an implicit search process indefinitely, and continual reinforcement learning as the setting in which the best agents are all continual learning agents.

An Empirical Study of the Effectiveness of Using a Replay Buffer on Mode Discovery in GFlowNets

no code implementations • 15 Jul 2023 • Nikhil Vemgal, Elaine Lau, Doina Precup

GFlowNets are a special class of algorithms designed to generate diverse candidates, $x$, from a discrete set, by learning a policy that approximates the proportional sampling of $R(x)$.

Acceleration in Policy Optimization

no code implementations • 18 Jun 2023 • Veronica Chelu, Tom Zahavy, Arthur Guez, Doina Precup, Sebastian Flennerhag

We work towards a unifying paradigm for accelerating policy optimization methods in reinforcement learning (RL) by integrating foresight in the policy improvement step via optimistic and adaptive updates.

For SALE: State-Action Representation Learning for Deep Reinforcement Learning

2 code implementations • NeurIPS 2023 • Scott Fujimoto, Wei-Di Chang, Edward J. Smith, Shixiang Shane Gu, Doina Precup, David Meger

In the field of reinforcement learning (RL), representation learning is a proven tool for complex image-based tasks, but is often overlooked for environments with low-level states, such as physical control problems.

Provable and Practical: Efficient Exploration in Reinforcement Learning via Langevin Monte Carlo

1 code implementation • 29 May 2023 • Haque Ishfaq, Qingfeng Lan, Pan Xu, A. Rupam Mahmood, Doina Precup, Anima Anandkumar, Kamyar Azizzadenesheli

One of the key shortcomings of existing Thompson sampling algorithms is the need to perform a Gaussian approximation of the posterior distribution, which is not a good surrogate in most practical settings.

Policy Gradient Methods in the Presence of Symmetries and State Abstractions

2 code implementations • 9 May 2023 • Prakash Panangaden, Sahand Rezaei-Shoshtari, Rosie Zhao, David Meger, Doina Precup

Our policy gradient results allow for leveraging approximate symmetries of the environment for policy optimization.

CryCeleb: A Speaker Verification Dataset Based on Infant Cry Sounds

2 code implementations • 1 May 2023 • David Budaghyan, Charles C. Onu, Arsenii Gorin, Cem Subakan, Doina Precup

This paper describes the Ubenwa CryCeleb dataset - a labeled collection of infant cries - and the accompanying CryCeleb 2023 task, which is a public speaker verification challenge based on cry sounds.

MUDiff: Unified Diffusion for Complete Molecule Generation

no code implementations • 28 Apr 2023 • Chenqing Hua, Sitao Luan, Minkai Xu, Rex Ying, Jie Fu, Stefano Ermon, Doina Precup

Our model is a promising approach for designing stable and diverse molecules and can be applied to a wide range of tasks in molecular modeling.

When Do Graph Neural Networks Help with Node Classification? Investigating the Impact of Homophily Principle on Node Distinguishability

1 code implementation • 25 Apr 2023 • Sitao Luan, Chenqing Hua, Minkai Xu, Qincheng Lu, Jiaqi Zhu, Xiao-Wen Chang, Jie Fu, Jure Leskovec, Doina Precup

Homophily principle, i. e., nodes with the same labels are more likely to be connected, has been believed to be the main reason for the performance superiority of Graph Neural Networks (GNNs) over Neural Networks on node classification tasks.

Accelerating exploration and representation learning with offline pre-training

no code implementations • 31 Mar 2023 • Bogdan Mazoure, Jake Bruce, Doina Precup, Rob Fergus, Ankit Anand

In this work, we follow the hypothesis that exploration and representation learning can be improved by separately learning two different models from a single offline dataset.

The Stable Entropy Hypothesis and Entropy-Aware Decoding: An Analysis and Algorithm for Robust Natural Language Generation

no code implementations • 14 Feb 2023 • Kushal Arora, Timothy J. O'Donnell, Doina Precup, Jason Weston, Jackie C. K. Cheung

State-of-the-art language generation models can degenerate when applied to open-ended generation problems such as text completion, story generation, or dialog modeling.

Minimal Value-Equivalent Partial Models for Scalable and Robust Planning in Lifelong Reinforcement Learning

no code implementations • 24 Jan 2023 • Safa Alver, Doina Precup

Learning models of the environment from pure interaction is often considered an essential component of building lifelong reinforcement learning agents.

Model-based Reinforcement Learning

Model-based Reinforcement Learning

reinforcement-learning

+1

reinforcement-learning

+1

On the Challenges of using Reinforcement Learning in Precision Drug Dosing: Delay and Prolongedness of Action Effects

1 code implementation • 2 Jan 2023 • Sumana Basu, Marc-André Legault, Adriana Romero-Soriano, Doina Precup

Drug dosing is an important application of AI, which can be formulated as a Reinforcement Learning (RL) problem.

Offline Policy Optimization in RL with Variance Regularizaton

no code implementations • 29 Dec 2022 • Riashat Islam, Samarth Sinha, Homanga Bharadhwaj, Samin Yeasar Arnob, Zhuoran Yang, Animesh Garg, Zhaoran Wang, Lihong Li, Doina Precup

Learning policies from fixed offline datasets is a key challenge to scale up reinforcement learning (RL) algorithms towards practical applications.

Complete the Missing Half: Augmenting Aggregation Filtering with Diversification for Graph Convolutional Neural Networks

no code implementations • 21 Dec 2022 • Sitao Luan, Mingde Zhao, Chenqing Hua, Xiao-Wen Chang, Doina Precup

The core operation of current Graph Neural Networks (GNNs) is the aggregation enabled by the graph Laplacian or message passing, which filters the neighborhood information of nodes.

Multi-Environment Pretraining Enables Transfer to Action Limited Datasets

no code implementations • 23 Nov 2022 • David Venuto, Sherry Yang, Pieter Abbeel, Doina Precup, Igor Mordatch, Ofir Nachum

Using massive datasets to train large-scale models has emerged as a dominant approach for broad generalization in natural language and vision applications.

Simulating Human Gaze with Neural Visual Attention

no code implementations • 22 Nov 2022 • Leo Schwinn, Doina Precup, Bjoern Eskofier, Dario Zanca

Existing models of human visual attention are generally unable to incorporate direct task guidance and therefore cannot model an intent or goal when exploring a scene.

On learning history based policies for controlling Markov decision processes

no code implementations • 6 Nov 2022 • Gandharv Patil, Aditya Mahajan, Doina Precup

Reinforcementlearning(RL)folkloresuggeststhathistory-basedfunctionapproximationmethods, suchas recurrent neural nets or history-based state abstraction, perform better than their memory-less counterparts, due to the fact that function approximation in Markov decision processes (MDP) can be viewed as inducing a Partially observable MDP.

When Do We Need Graph Neural Networks for Node Classification?

no code implementations • 30 Oct 2022 • Sitao Luan, Chenqing Hua, Qincheng Lu, Jiaqi Zhu, Xiao-Wen Chang, Doina Precup

Graph Neural Networks (GNNs) extend basic Neural Networks (NNs) by additionally making use of graph structure based on the relational inductive bias (edge bias), rather than treating the nodes as collections of independent and identically distributed (i. i. d.)

Revisiting Heterophily For Graph Neural Networks

1 code implementation • 14 Oct 2022 • Sitao Luan, Chenqing Hua, Qincheng Lu, Jiaqi Zhu, Mingde Zhao, Shuyuan Zhang, Xiao-Wen Chang, Doina Precup

ACM is more powerful than the commonly used uni-channel framework for node classification tasks on heterophilic graphs and is easy to be implemented in baseline GNN layers.

Inductive Bias

Inductive Bias

Node Classification on Non-Homophilic (Heterophilic) Graphs

Node Classification on Non-Homophilic (Heterophilic) Graphs

Finite time analysis of temporal difference learning with linear function approximation: Tail averaging and regularisation

no code implementations • 12 Oct 2022 • Gandharv Patil, Prashanth L. A., Dheeraj Nagaraj, Doina Precup

We study the finite-time behaviour of the popular temporal difference (TD) learning algorithm when combined with tail-averaging.

Towards Safe Mechanical Ventilation Treatment Using Deep Offline Reinforcement Learning

1 code implementation • 5 Oct 2022 • Flemming Kondrup, Thomas Jiralerspong, Elaine Lau, Nathan de Lara, Jacob Shkrob, My Duc Tran, Doina Precup, Sumana Basu

We design a clinically relevant intermediate reward that encourages continuous improvement of the patient vitals as well as addresses the challenge of sparse reward in RL.

Bayesian Q-learning With Imperfect Expert Demonstrations

no code implementations • 1 Oct 2022 • Fengdi Che, Xiru Zhu, Doina Precup, David Meger, Gregory Dudek

Guided exploration with expert demonstrations improves data efficiency for reinforcement learning, but current algorithms often overuse expert information.

Continuous MDP Homomorphisms and Homomorphic Policy Gradient

1 code implementation • 15 Sep 2022 • Sahand Rezaei-Shoshtari, Rosie Zhao, Prakash Panangaden, David Meger, Doina Precup

Abstraction has been widely studied as a way to improve the efficiency and generalization of reinforcement learning algorithms.

Understanding Decision-Time vs. Background Planning in Model-Based Reinforcement Learning

no code implementations • 16 Jun 2022 • Safa Alver, Doina Precup

After viewing them through the lens of dynamic programming, we first consider the classical instantiations of these planning styles and provide theoretical results and hypotheses on which one will perform better in the pure planning, planning & learning, and transfer learning settings.

Model-based Reinforcement Learning

Model-based Reinforcement Learning

reinforcement-learning

+2

reinforcement-learning

+2

Improving Robustness against Real-World and Worst-Case Distribution Shifts through Decision Region Quantification

no code implementations • 19 May 2022 • Leo Schwinn, Leon Bungert, An Nguyen, René Raab, Falk Pulsmeyer, Doina Precup, Björn Eskofier, Dario Zanca

The reliability of neural networks is essential for their use in safety-critical applications.

Learning how to Interact with a Complex Interface using Hierarchical Reinforcement Learning

no code implementations • 21 Apr 2022 • Gheorghe Comanici, Amelia Glaese, Anita Gergely, Daniel Toyama, Zafarali Ahmed, Tyler Jackson, Philippe Hamel, Doina Precup

While the native action space is completely intractable for simple DQN agents, our architecture can be used to establish an effective way to interact with different tasks, significantly improving the performance of the same DQN agent over different levels of abstraction.

Hierarchical Reinforcement Learning

Hierarchical Reinforcement Learning

reinforcement-learning

+1

reinforcement-learning

+1

Behind the Machine's Gaze: Neural Networks with Biologically-inspired Constraints Exhibit Human-like Visual Attention

no code implementations • 19 Apr 2022 • Leo Schwinn, Doina Precup, Björn Eskofier, Dario Zanca

By and large, existing computational models of visual attention tacitly assume perfect vision and full access to the stimulus and thereby deviate from foveated biological vision.

COptiDICE: Offline Constrained Reinforcement Learning via Stationary Distribution Correction Estimation

1 code implementation • ICLR 2022 • Jongmin Lee, Cosmin Paduraru, Daniel J. Mankowitz, Nicolas Heess, Doina Precup, Kee-Eung Kim, Arthur Guez

We consider the offline constrained reinforcement learning (RL) problem, in which the agent aims to compute a policy that maximizes expected return while satisfying given cost constraints, learning only from a pre-collected dataset.

Towards Painless Policy Optimization for Constrained MDPs

1 code implementation • 11 Apr 2022 • Arushi Jain, Sharan Vaswani, Reza Babanezhad, Csaba Szepesvari, Doina Precup

We propose a generic primal-dual framework that allows us to bound the reward sub-optimality and constraint violation for arbitrary algorithms in terms of their primal and dual regret on online linear optimization problems.

Selective Credit Assignment

no code implementations • 20 Feb 2022 • Veronica Chelu, Diana Borsa, Doina Precup, Hado van Hasselt

Efficient credit assignment is essential for reinforcement learning algorithms in both prediction and control settings.

Improving Sample Efficiency of Value Based Models Using Attention and Vision Transformers

no code implementations • 1 Feb 2022 • Amir Ardalan Kalantari, Mohammad Amini, Sarath Chandar, Doina Precup

Much of recent Deep Reinforcement Learning success is owed to the neural architecture's potential to learn and use effective internal representations of the world.

Why Should I Trust You, Bellman? The Bellman Error is a Poor Replacement for Value Error

no code implementations • 28 Jan 2022 • Scott Fujimoto, David Meger, Doina Precup, Ofir Nachum, Shixiang Shane Gu

In this work, we study the use of the Bellman equation as a surrogate objective for value prediction accuracy.

The Paradox of Choice: Using Attention in Hierarchical Reinforcement Learning

1 code implementation • 24 Jan 2022 • Andrei Nica, Khimya Khetarpal, Doina Precup

Decision-making AI agents are often faced with two important challenges: the depth of the planning horizon, and the branching factor due to having many choices.

Attention Option-Critic

no code implementations • ICML Workshop LifelongML 2020 • Raviteja Chunduru, Doina Precup

Temporal abstraction in reinforcement learning is the ability of an agent to learn and use high-level behaviors, called options.

Single-Shot Pruning for Offline Reinforcement Learning

no code implementations • 31 Dec 2021 • Samin Yeasar Arnob, Riyasat Ohib, Sergey Plis, Doina Precup

We leverage a fixed dataset to prune neural networks before the start of RL training.

Importance of Empirical Sample Complexity Analysis for Offline Reinforcement Learning

no code implementations • 31 Dec 2021 • Samin Yeasar Arnob, Riashat Islam, Doina Precup

We hypothesize that empirically studying the sample complexity of offline reinforcement learning (RL) is crucial for the practical applications of RL in the real world.

Constructing a Good Behavior Basis for Transfer using Generalized Policy Updates

no code implementations • ICLR 2022 • Safa Alver, Doina Precup

We study the problem of learning a good set of policies, so that when combined together, they can solve a wide variety of unseen reinforcement learning tasks with no or very little new data.

Proving Theorems using Incremental Learning and Hindsight Experience Replay

no code implementations • 20 Dec 2021 • Eser Aygün, Laurent Orseau, Ankit Anand, Xavier Glorot, Vlad Firoiu, Lei M. Zhang, Doina Precup, Shibl Mourad

Traditional automated theorem provers for first-order logic depend on speed-optimized search and many handcrafted heuristics that are designed to work best over a wide range of domains.

Flexible Option Learning

1 code implementation • NeurIPS 2021 • Martin Klissarov, Doina Precup

Temporal abstraction in reinforcement learning (RL), offers the promise of improving generalization and knowledge transfer in complex environments, by propagating information more efficiently over time.

Hierarchical Reinforcement Learning

Hierarchical Reinforcement Learning

reinforcement-learning

+2

reinforcement-learning

+2

On the Expressivity of Markov Reward

no code implementations • NeurIPS 2021 • David Abel, Will Dabney, Anna Harutyunyan, Mark K. Ho, Michael L. Littman, Doina Precup, Satinder Singh

We then provide a set of polynomial-time algorithms that construct a Markov reward function that allows an agent to optimize tasks of each of these three types, and correctly determine when no such reward function exists.

Temporal Abstraction in Reinforcement Learning with the Successor Representation

no code implementations • 12 Oct 2021 • Marlos C. Machado, Andre Barreto, Doina Precup, Michael Bowling

In this paper, we argue that the successor representation (SR), which encodes states based on the pattern of state visitation that follows them, can be seen as a natural substrate for the discovery and use of temporal abstractions.

Why Should I Trust You, Bellman? Evaluating the Bellman Objective with Off-Policy Data

no code implementations • 29 Sep 2021 • Scott Fujimoto, David Meger, Doina Precup, Ofir Nachum, Shixiang Shane Gu

In this work, we analyze the effectiveness of the Bellman equation as a proxy objective for value prediction accuracy in off-policy evaluation.

Is Heterophily A Real Nightmare For Graph Neural Networks on Performing Node Classification?

no code implementations • 29 Sep 2021 • Sitao Luan, Chenqing Hua, Qincheng Lu, Jiaqi Zhu, Mingde Zhao, Shuyuan Zhang, Xiao-Wen Chang, Doina Precup

In this paper, we first show that not all cases of heterophily are harmful for GNNs with aggregation operation.

Is Heterophily A Real Nightmare For Graph Neural Networks To Do Node Classification?

no code implementations • 12 Sep 2021 • Sitao Luan, Chenqing Hua, Qincheng Lu, Jiaqi Zhu, Mingde Zhao, Shuyuan Zhang, Xiao-Wen Chang, Doina Precup

In this paper, we first show that not all cases of heterophily are harmful for GNNs with aggregation operation.

Ranked #1 on

Node Classification

on Pubmed

Ranked #1 on

Node Classification

on Pubmed

Membership Inference Attacks Against Temporally Correlated Data in Deep Reinforcement Learning

no code implementations • 8 Sep 2021 • Maziar Gomrokchi, Susan Amin, Hossein Aboutalebi, Alexander Wong, Doina Precup

To address this gap, we propose an adversarial attack framework designed for testing the vulnerability of a state-of-the-art deep reinforcement learning algorithm to a membership inference attack.

A Survey of Exploration Methods in Reinforcement Learning

no code implementations • 1 Sep 2021 • Susan Amin, Maziar Gomrokchi, Harsh Satija, Herke van Hoof, Doina Precup

Exploration is an essential component of reinforcement learning algorithms, where agents need to learn how to predict and control unknown and often stochastic environments.

Temporally Abstract Partial Models

1 code implementation • NeurIPS 2021 • Khimya Khetarpal, Zafarali Ahmed, Gheorghe Comanici, Doina Precup

Humans and animals have the ability to reason and make predictions about different courses of action at many time scales.

Policy Gradients Incorporating the Future

no code implementations • ICLR 2022 • David Venuto, Elaine Lau, Doina Precup, Ofir Nachum

Reasoning about the future -- understanding how decisions in the present time affect outcomes in the future -- is one of the central challenges for reinforcement learning (RL), especially in highly-stochastic or partially observable environments.

The Option Keyboard: Combining Skills in Reinforcement Learning

no code implementations • NeurIPS 2019 • André Barreto, Diana Borsa, Shaobo Hou, Gheorghe Comanici, Eser Aygün, Philippe Hamel, Daniel Toyama, Jonathan Hunt, Shibl Mourad, David Silver, Doina Precup

Building on this insight and on previous results on transfer learning, we show how to approximate options whose cumulants are linear combinations of the cumulants of known options.

Randomized Exploration for Reinforcement Learning with General Value Function Approximation

1 code implementation • 15 Jun 2021 • Haque Ishfaq, Qiwen Cui, Viet Nguyen, Alex Ayoub, Zhuoran Yang, Zhaoran Wang, Doina Precup, Lin F. Yang

We propose a model-free reinforcement learning algorithm inspired by the popular randomized least squares value iteration (RLSVI) algorithm as well as the optimism principle.

A Deep Reinforcement Learning Approach to Marginalized Importance Sampling with the Successor Representation

1 code implementation • 12 Jun 2021 • Scott Fujimoto, David Meger, Doina Precup

We bridge the gap between MIS and deep reinforcement learning by observing that the density ratio can be computed from the successor representation of the target policy.

Preferential Temporal Difference Learning

1 code implementation • 11 Jun 2021 • Nishanth Anand, Doina Precup

When the agent lands in a state, its value can be used to compute the TD-error, which is then propagated to other states.

Flow Network based Generative Models for Non-Iterative Diverse Candidate Generation

4 code implementations • NeurIPS 2021 • Emmanuel Bengio, Moksh Jain, Maksym Korablyov, Doina Precup, Yoshua Bengio

Using insights from Temporal Difference learning, we propose GFlowNet, based on a view of the generative process as a flow network, making it possible to handle the tricky case where different trajectories can yield the same final state, e. g., there are many ways to sequentially add atoms to generate some molecular graph.

Correcting Momentum in Temporal Difference Learning

1 code implementation • 7 Jun 2021 • Emmanuel Bengio, Joelle Pineau, Doina Precup

A common optimization tool used in deep reinforcement learning is momentum, which consists in accumulating and discounting past gradients, reapplying them at each iteration.

A Consciousness-Inspired Planning Agent for Model-Based Reinforcement Learning

1 code implementation • NeurIPS 2021 • Mingde Zhao, Zhen Liu, Sitao Luan, Shuyuan Zhang, Doina Precup, Yoshua Bengio

We present an end-to-end, model-based deep reinforcement learning agent which dynamically attends to relevant parts of its state during planning.

Model-based Reinforcement Learning

Model-based Reinforcement Learning

Out-of-Distribution Generalization

+2

Out-of-Distribution Generalization

+2

Improving Long-Term Metrics in Recommendation Systems using Short-Horizon Reinforcement Learning

no code implementations • 1 Jun 2021 • Bogdan Mazoure, Paul Mineiro, Pavithra Srinath, Reza Sharifi Sedeh, Doina Precup, Adith Swaminathan

Targeting immediately measurable proxies such as clicks can lead to suboptimal recommendations due to misalignment with the long-term metric.

AndroidEnv: A Reinforcement Learning Platform for Android

2 code implementations • 27 May 2021 • Daniel Toyama, Philippe Hamel, Anita Gergely, Gheorghe Comanici, Amelia Glaese, Zafarali Ahmed, Tyler Jackson, Shibl Mourad, Doina Precup

We introduce AndroidEnv, an open-source platform for Reinforcement Learning (RL) research built on top of the Android ecosystem.

Is Heterophily A Real Nightmare For Graph Neural Networks Performing Node Classification?

no code implementations • NeurIPS 2021 • Sitao Luan, Chenqing Hua, Qincheng Lu, Jiaqi Zhu, Mingde Zhao, Shuyuan Zhang, Xiao-Wen Chang, Doina Precup

In this paper, we first show that not all cases of heterophily are harmful for GNNs with aggregation operation.

What is Going on Inside Recurrent Meta Reinforcement Learning Agents?

no code implementations • 29 Apr 2021 • Safa Alver, Doina Precup

Recurrent meta reinforcement learning (meta-RL) agents are agents that employ a recurrent neural network (RNN) for the purpose of "learning a learning algorithm".

Training a First-Order Theorem Prover from Synthetic Data

no code implementations • 5 Mar 2021 • Vlad Firoiu, Eser Aygun, Ankit Anand, Zafarali Ahmed, Xavier Glorot, Laurent Orseau, Lei Zhang, Doina Precup, Shibl Mourad

A major challenge in applying machine learning to automated theorem proving is the scarcity of training data, which is a key ingredient in training successful deep learning models.

Variance Penalized On-Policy and Off-Policy Actor-Critic

1 code implementation • 3 Feb 2021 • Arushi Jain, Gandharv Patil, Ayush Jain, Khimya Khetarpal, Doina Precup

Reinforcement learning algorithms are typically geared towards optimizing the expected return of an agent.

Offline Policy Optimization with Variance Regularization

no code implementations • 1 Jan 2021 • Riashat Islam, Samarth Sinha, Homanga Bharadhwaj, Samin Yeasar Arnob, Zhuoran Yang, Zhaoran Wang, Animesh Garg, Lihong Li, Doina Precup

Learning policies from fixed offline datasets is a key challenge to scale up reinforcement learning (RL) algorithms towards practical applications.

Conditional Networks

no code implementations • 1 Jan 2021 • Anthony Ortiz, Kris Sankaran, Olac Fuentes, Christopher Kiekintveld, Pascal Vincent, Yoshua Bengio, Doina Precup

In this work we tackle the problem of out-of-distribution generalization through conditional computation.

Practical Marginalized Importance Sampling with the Successor Representation

no code implementations • 1 Jan 2021 • Scott Fujimoto, David Meger, Doina Precup

We bridge the gap between MIS and deep reinforcement learning by observing that the density ratio can be computed from the successor representation of the target policy.

Locally Persistent Exploration in Continuous Control Tasks with Sparse Rewards

1 code implementation • 26 Dec 2020 • Susan Amin, Maziar Gomrokchi, Hossein Aboutalebi, Harsh Satija, Doina Precup

A major challenge in reinforcement learning is the design of exploration strategies, especially for environments with sparse reward structures and continuous state and action spaces.

Towards Continual Reinforcement Learning: A Review and Perspectives

no code implementations • 25 Dec 2020 • Khimya Khetarpal, Matthew Riemer, Irina Rish, Doina Precup

In this article, we aim to provide a literature review of different formulations and approaches to continual reinforcement learning (RL), also known as lifelong or non-stationary RL.

On Efficiency in Hierarchical Reinforcement Learning

no code implementations • NeurIPS 2020 • Zheng Wen, Doina Precup, Morteza Ibrahimi, Andre Barreto, Benjamin Van Roy, Satinder Singh

Hierarchical Reinforcement Learning (HRL) approaches promise to provide more efficient solutions to sequential decision making problems, both in terms of statistical as well as computational efficiency.

Gradient Starvation: A Learning Proclivity in Neural Networks

2 code implementations • NeurIPS 2021 • Mohammad Pezeshki, Sékou-Oumar Kaba, Yoshua Bengio, Aaron Courville, Doina Precup, Guillaume Lajoie

We identify and formalize a fundamental gradient descent phenomenon resulting in a learning proclivity in over-parameterized neural networks.

Ranked #1 on

Out-of-Distribution Generalization

on ImageNet-W

Ranked #1 on

Out-of-Distribution Generalization

on ImageNet-W

Diversity-Enriched Option-Critic

1 code implementation • 4 Nov 2020 • Anand Kamat, Doina Precup

We show empirically that our proposed method is capable of learning options end-to-end on several discrete and continuous control tasks, outperforms option-critic by a wide margin.

A Study of Policy Gradient on a Class of Exactly Solvable Models

no code implementations • 3 Nov 2020 • Gavin McCracken, Colin Daniels, Rosie Zhao, Anna Brandenberger, Prakash Panangaden, Doina Precup

Policy gradient methods are extensively used in reinforcement learning as a way to optimize expected return.

Forethought and Hindsight in Credit Assignment

no code implementations • NeurIPS 2020 • Veronica Chelu, Doina Precup, Hado van Hasselt

We address the problem of credit assignment in reinforcement learning and explore fundamental questions regarding the way in which an agent can best use additional computation to propagate new information, by planning with internal models of the world to improve its predictions.

Connecting Weighted Automata, Tensor Networks and Recurrent Neural Networks through Spectral Learning

no code implementations • 19 Oct 2020 • Tianyu Li, Doina Precup, Guillaume Rabusseau

In this paper, we present connections between three models used in different research fields: weighted finite automata~(WFA) from formal languages and linguistics, recurrent neural networks used in machine learning, and tensor networks which encompasses a set of optimization techniques for high-order tensors used in quantum physics and numerical analysis.

A Fully Tensorized Recurrent Neural Network

1 code implementation • 8 Oct 2020 • Charles C. Onu, Jacob E. Miller, Doina Precup

Recurrent neural networks (RNNs) are powerful tools for sequential modeling, but typically require significant overparameterization and regularization to achieve optimal performance.

Reward Propagation Using Graph Convolutional Networks

1 code implementation • NeurIPS 2020 • Martin Klissarov, Doina Precup

Potential-based reward shaping provides an approach for designing good reward functions, with the purpose of speeding up learning.

Training Matters: Unlocking Potentials of Deeper Graph Convolutional Neural Networks

no code implementations • 20 Aug 2020 • Sitao Luan, Mingde Zhao, Xiao-Wen Chang, Doina Precup

The performance limit of Graph Convolutional Networks (GCNs) and the fact that we cannot stack more of them to increase the performance, which we usually do for other deep learning paradigms, are pervasively thought to be caused by the limitations of the GCN layers, including insufficient expressive power, etc.

Complete the Missing Half: Augmenting Aggregation Filtering with Diversification for Graph Convolutional Networks

no code implementations • 20 Aug 2020 • Sitao Luan, Mingde Zhao, Chenqing Hua, Xiao-Wen Chang, Doina Precup

The core operation of current Graph Neural Networks (GNNs) is the aggregation enabled by the graph Laplacian or message passing, which filters the neighborhood node information.

An Equivalence between Loss Functions and Non-Uniform Sampling in Experience Replay

1 code implementation • NeurIPS 2020 • Scott Fujimoto, David Meger, Doina Precup

Prioritized Experience Replay (PER) is a deep reinforcement learning technique in which agents learn from transitions sampled with non-uniform probability proportionate to their temporal-difference error.

What can I do here? A Theory of Affordances in Reinforcement Learning

1 code implementation • ICML 2020 • Khimya Khetarpal, Zafarali Ahmed, Gheorghe Comanici, David Abel, Doina Precup

Gibson (1977) coined the term "affordances" to describe the fact that certain states enable an agent to do certain actions, in the context of embodied agents.

Learning to Prove from Synthetic Theorems

no code implementations • 19 Jun 2020 • Eser Aygün, Zafarali Ahmed, Ankit Anand, Vlad Firoiu, Xavier Glorot, Laurent Orseau, Doina Precup, Shibl Mourad

A major challenge in applying machine learning to automated theorem proving is the scarcity of training data, which is a key ingredient in training successful deep learning models.

A Brief Look at Generalization in Visual Meta-Reinforcement Learning

no code implementations • ICML Workshop LifelongML 2020 • Safa Alver, Doina Precup

Due to the realization that deep reinforcement learning algorithms trained on high-dimensional tasks can strongly overfit to their training environments, there have been several studies that investigated the generalization performance of these algorithms.

Gifting in multi-agent reinforcement learning

1 code implementation • AAMAS '20: Proceedings of the 19th International Conference on Autonomous Agents and MultiAgent Systems 2020 • Andrei Lupu, Doina Precup

Multi-agent reinforcement learning has generally been studied under an assumption inherited from classical reinforcement learning: that the reward function is the exclusive property of the environment, and is only altered by external factors.

Multi-agent Reinforcement Learning

Multi-agent Reinforcement Learning

reinforcement-learning

+1

reinforcement-learning

+1

Learning to cooperate: Emergent communication in multi-agent navigation

no code implementations • 2 Apr 2020 • Ivana Kajić, Eser Aygün, Doina Precup

Emergent communication in artificial agents has been studied to understand language evolution, as well as to develop artificial systems that learn to communicate with humans.

A Distributional Analysis of Sampling-Based Reinforcement Learning Algorithms

no code implementations • 27 Mar 2020 • Philip Amortila, Doina Precup, Prakash Panangaden, Marc G. Bellemare

We present a distributional approach to theoretical analyses of reinforcement learning algorithms for constant step-sizes.

Interference and Generalization in Temporal Difference Learning

no code implementations • ICML 2020 • Emmanuel Bengio, Joelle Pineau, Doina Precup

We study the link between generalization and interference in temporal-difference (TD) learning.

Invariant Causal Prediction for Block MDPs

1 code implementation • ICML 2020 • Amy Zhang, Clare Lyle, Shagun Sodhani, Angelos Filos, Marta Kwiatkowska, Joelle Pineau, Yarin Gal, Doina Precup

Generalization across environments is critical to the successful application of reinforcement learning algorithms to real-world challenges.

Policy Evaluation Networks

no code implementations • 26 Feb 2020 • Jean Harb, Tom Schaul, Doina Precup, Pierre-Luc Bacon

The core idea of this paper is to flip this convention and estimate the value of many policies, for a single set of states.

oIRL: Robust Adversarial Inverse Reinforcement Learning with Temporally Extended Actions

no code implementations • 20 Feb 2020 • David Venuto, Jhelum Chakravorty, Leonard Boussioux, Junhao Wang, Gavin McCracken, Doina Precup

Explicit engineering of reward functions for given environments has been a major hindrance to reinforcement learning methods.

Value-driven Hindsight Modelling

no code implementations • NeurIPS 2020 • Arthur Guez, Fabio Viola, Théophane Weber, Lars Buesing, Steven Kapturowski, Doina Precup, David Silver, Nicolas Heess

Value estimation is a critical component of the reinforcement learning (RL) paradigm.

Representation of Reinforcement Learning Policies in Reproducing Kernel Hilbert Spaces

no code implementations • 7 Feb 2020 • Bogdan Mazoure, Thang Doan, Tianyu Li, Vladimir Makarenkov, Joelle Pineau, Doina Precup, Guillaume Rabusseau

We propose a general framework for policy representation for reinforcement learning tasks.

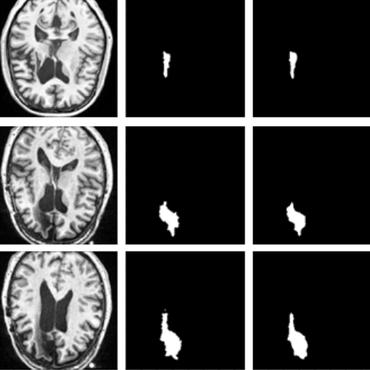

Exploring Bayesian Deep Learning Uncertainty Measures for Segmentation of New Lesions in Longitudinal MRIs

no code implementations • MIDL 2019 • Nazanin Mohammadi Sepahvand, Raghav Mehta, Douglas Lorne Arnold, Doina Precup, Tal Arbel

In this paper, we develop a modified U-Net architecture to accurately segment new and enlarging lesions in longitudinal MRI, based on multi-modal MRI inputs, as well as subtrac- tion images between timepoints, in the context of large-scale clinical trial data for patients with Multiple Sclerosis (MS).

Options of Interest: Temporal Abstraction with Interest Functions

3 code implementations • 1 Jan 2020 • Khimya Khetarpal, Martin Klissarov, Maxime Chevalier-Boisvert, Pierre-Luc Bacon, Doina Precup

Temporal abstraction refers to the ability of an agent to use behaviours of controllers which act for a limited, variable amount of time.

Shaping representations through communication: community size effect in artificial learning systems

no code implementations • 12 Dec 2019 • Olivier Tieleman, Angeliki Lazaridou, Shibl Mourad, Charles Blundell, Doina Precup

Motivated by theories of language and communication that explain why communities with large numbers of speakers have, on average, simpler languages with more regularity, we cast the representation learning problem in terms of learning to communicate.

Doubly Robust Off-Policy Actor-Critic Algorithms for Reinforcement Learning

no code implementations • 11 Dec 2019 • Riashat Islam, Raihan Seraj, Samin Yeasar Arnob, Doina Precup

Furthermore, in cases where the reward function is stochastic that can lead to high variance, doubly robust critic estimation can improve performance under corrupted, stochastic reward signals, indicating its usefulness for robust and safe reinforcement learning.

Marginalized State Distribution Entropy Regularization in Policy Optimization

no code implementations • 11 Dec 2019 • Riashat Islam, Zafarali Ahmed, Doina Precup

Entropy regularization is used to get improved optimization performance in reinforcement learning tasks.

Entropy Regularization with Discounted Future State Distribution in Policy Gradient Methods

no code implementations • 11 Dec 2019 • Riashat Islam, Raihan Seraj, Pierre-Luc Bacon, Doina Precup

In this work, we propose exploration in policy gradient methods based on maximizing entropy of the discounted future state distribution.

Hindsight Credit Assignment

1 code implementation • NeurIPS 2019 • Anna Harutyunyan, Will Dabney, Thomas Mesnard, Mohammad Azar, Bilal Piot, Nicolas Heess, Hado van Hasselt, Greg Wayne, Satinder Singh, Doina Precup, Remi Munos

We consider the problem of efficient credit assignment in reinforcement learning.

Algorithmic Improvements for Deep Reinforcement Learning applied to Interactive Fiction

no code implementations • 28 Nov 2019 • Vishal Jain, William Fedus, Hugo Larochelle, Doina Precup, Marc G. Bellemare

Empirically, we find that these techniques improve the performance of a baseline deep reinforcement learning agent applied to text-based games.

Option-Critic in Cooperative Multi-agent Systems

1 code implementation • 28 Nov 2019 • Jhelum Chakravorty, Nadeem Ward, Julien Roy, Maxime Chevalier-Boisvert, Sumana Basu, Andrei Lupu, Doina Precup

In this paper, we investigate learning temporal abstractions in cooperative multi-agent systems, using the options framework (Sutton et al, 1999).

Efficient Planning under Partial Observability with Unnormalized Q Functions and Spectral Learning

no code implementations • 12 Nov 2019 • Tianyu Li, Bogdan Mazoure, Doina Precup, Guillaume Rabusseau

Learning and planning in partially-observable domains is one of the most difficult problems in reinforcement learning.

Navigation Agents for the Visually Impaired: A Sidewalk Simulator and Experiments

1 code implementation • 29 Oct 2019 • Martin Weiss, Simon Chamorro, Roger Girgis, Margaux Luck, Samira E. Kahou, Joseph P. Cohen, Derek Nowrouzezahrai, Doina Precup, Florian Golemo, Chris Pal

In our endeavor to create a navigation assistant for the BVI, we found that existing Reinforcement Learning (RL) environments were unsuitable for the task.

Actor Critic with Differentially Private Critic

no code implementations • 14 Oct 2019 • Jonathan Lebensold, William Hamilton, Borja Balle, Doina Precup

Reinforcement learning algorithms are known to be sample inefficient, and often performance on one task can be substantially improved by leveraging information (e. g., via pre-training) on other related tasks.

Augmenting learning using symmetry in a biologically-inspired domain

no code implementations • 1 Oct 2019 • Shruti Mishra, Abbas Abdolmaleki, Arthur Guez, Piotr Trochim, Doina Precup

Invariances to translation, rotation and other spatial transformations are a hallmark of the laws of motion, and have widespread use in the natural sciences to reduce the dimensionality of systems of equations.

Assessing Generalization in TD methods for Deep Reinforcement Learning

no code implementations • 25 Sep 2019 • Emmanuel Bengio, Doina Precup, Joelle Pineau

Current Deep Reinforcement Learning (DRL) methods can exhibit both data inefficiency and brittleness, which seem to indicate that they generalize poorly.

Avoidance Learning Using Observational Reinforcement Learning

1 code implementation • 24 Sep 2019 • David Venuto, Leonard Boussioux, Junhao Wang, Rola Dali, Jhelum Chakravorty, Yoshua Bengio, Doina Precup

We define avoidance learning as the process of optimizing the agent's reward while avoiding dangerous behaviors given by a demonstrator.

Revisit Policy Optimization in Matrix Form

no code implementations • 19 Sep 2019 • Sitao Luan, Xiao-Wen Chang, Doina Precup

In tabular case, when the reward and environment dynamics are known, policy evaluation can be written as $\bm{V}_{\bm{\pi}} = (I - \gamma P_{\bm{\pi}})^{-1} \bm{r}_{\bm{\pi}}$, where $P_{\bm{\pi}}$ is the state transition matrix given policy ${\bm{\pi}}$ and $\bm{r}_{\bm{\pi}}$ is the reward signal given ${\bm{\pi}}$.

An Empirical Study of Batch Normalization and Group Normalization in Conditional Computation

no code implementations • 31 Jul 2019 • Vincent Michalski, Vikram Voleti, Samira Ebrahimi Kahou, Anthony Ortiz, Pascal Vincent, Chris Pal, Doina Precup

Batch normalization has been widely used to improve optimization in deep neural networks.

Self-supervised Learning of Distance Functions for Goal-Conditioned Reinforcement Learning

no code implementations • 5 Jul 2019 • Srinivas Venkattaramanujam, Eric Crawford, Thang Doan, Doina Precup

Goal-conditioned policies are used in order to break down complex reinforcement learning (RL) problems by using subgoals, which can be defined either in state space or in a latent feature space.

Neural Transfer Learning for Cry-based Diagnosis of Perinatal Asphyxia

no code implementations • 24 Jun 2019 • Charles C. Onu, Jonathan Lebensold, William L. Hamilton, Doina Precup

Despite continuing medical advances, the rate of newborn morbidity and mortality globally remains high, with over 6 million casualties every year.

SVRG for Policy Evaluation with Fewer Gradient Evaluations

1 code implementation • 9 Jun 2019 • Zilun Peng, Ahmed Touati, Pascal Vincent, Doina Precup

SVRG was later shown to work for policy evaluation, a problem in reinforcement learning in which one aims to estimate the value function of a given policy.

Break the Ceiling: Stronger Multi-scale Deep Graph Convolutional Networks

1 code implementation • NeurIPS 2019 • Sitao Luan, Mingde Zhao, Xiao-Wen Chang, Doina Precup

Recently, neural network based approaches have achieved significant improvement for solving large, complex, graph-structured problems.

Ranked #1 on

Node Classification

on PubMed (0.1%)

Ranked #1 on

Node Classification

on PubMed (0.1%)

Recurrent Value Functions

no code implementations • 23 May 2019 • Pierre Thodoroff, Nishanth Anand, Lucas Caccia, Doina Precup, Joelle Pineau

Despite recent successes in Reinforcement Learning, value-based methods often suffer from high variance hindering performance.

META-Learning State-based Eligibility Traces for More Sample-Efficient Policy Evaluation

2 code implementations • 25 Apr 2019 • Mingde Zhao, Sitao Luan, Ian Porada, Xiao-Wen Chang, Doina Precup

Temporal-Difference (TD) learning is a standard and very successful reinforcement learning approach, at the core of both algorithms that learn the value of a given policy, as well as algorithms which learn how to improve policies.

Learning proposals for sequential importance samplers using reinforced variational inference

no code implementations • ICLR Workshop drlStructPred 2019 • Zafarali Ahmed, Arjun Karuvally, Doina Precup, Simon Gravel

The problem of inferring unobserved values in a partially observed trajectory from a stochastic process can be considered as a structured prediction problem.

Uncertainty Aware Learning from Demonstrations in Multiple Contexts using Bayesian Neural Networks

1 code implementation • 13 Mar 2019 • Sanjay Thakur, Herke van Hoof, Juan Camilo Gamboa Higuera, Doina Precup, David Meger

Learned controllers such as neural networks typically do not have a notion of uncertainty that allows to diagnose an offset between training and testing conditions, and potentially intervene.

Learning Modular Safe Policies in the Bandit Setting with Application to Adaptive Clinical Trials

no code implementations • 4 Mar 2019 • Hossein Aboutalebi, Doina Precup, Tibor Schuster

We present a regret bound for our approach and evaluate it empirically both on synthetic problems as well as on a dataset from the clinical trial literature.

The Termination Critic

no code implementations • 26 Feb 2019 • Anna Harutyunyan, Will Dabney, Diana Borsa, Nicolas Heess, Remi Munos, Doina Precup

In this work, we consider the problem of autonomously discovering behavioral abstractions, or options, for reinforcement learning agents.

Clustering-Oriented Representation Learning with Attractive-Repulsive Loss

1 code implementation • 18 Dec 2018 • Kian Kenyon-Dean, Andre Cianflone, Lucas Page-Caccia, Guillaume Rabusseau, Jackie Chi Kit Cheung, Doina Precup

The standard loss function used to train neural network classifiers, categorical cross-entropy (CCE), seeks to maximize accuracy on the training data; building useful representations is not a necessary byproduct of this objective.

Off-Policy Deep Reinforcement Learning without Exploration

10 code implementations • 7 Dec 2018 • Scott Fujimoto, David Meger, Doina Precup

Many practical applications of reinforcement learning constrain agents to learn from a fixed batch of data which has already been gathered, without offering further possibility for data collection.

Temporal Regularization for Markov Decision Process

1 code implementation • NeurIPS 2018 • Pierre Thodoroff, Audrey Durand, Joelle Pineau, Doina Precup

Several applications of Reinforcement Learning suffer from instability due to high variance.

Environments for Lifelong Reinforcement Learning

2 code implementations • 26 Nov 2018 • Khimya Khetarpal, Shagun Sodhani, Sarath Chandar, Doina Precup

To achieve general artificial intelligence, reinforcement learning (RL) agents should learn not only to optimize returns for one specific task but also to constantly build more complex skills and scaffold their knowledge about the world, without forgetting what has already been learned.

The Barbados 2018 List of Open Issues in Continual Learning

no code implementations • 16 Nov 2018 • Tom Schaul, Hado van Hasselt, Joseph Modayil, Martha White, Adam White, Pierre-Luc Bacon, Jean Harb, Shibl Mourad, Marc Bellemare, Doina Precup

We want to make progress toward artificial general intelligence, namely general-purpose agents that autonomously learn how to competently act in complex environments.

Temporal Regularization in Markov Decision Process

2 code implementations • 1 Nov 2018 • Pierre Thodoroff, Audrey Durand, Joelle Pineau, Doina Precup

Several applications of Reinforcement Learning suffer from instability due to high variance.

Where Off-Policy Deep Reinforcement Learning Fails

no code implementations • 27 Sep 2018 • Scott Fujimoto, David Meger, Doina Precup

This work examines batch reinforcement learning--the task of maximally exploiting a given batch of off-policy data, without further data collection.

Combined Reinforcement Learning via Abstract Representations

1 code implementation • 12 Sep 2018 • Vincent François-Lavet, Yoshua Bengio, Doina Precup, Joelle Pineau

In the quest for efficient and robust reinforcement learning methods, both model-free and model-based approaches offer advantages.

Predicting Extubation Readiness in Extreme Preterm Infants based on Patterns of Breathing

no code implementations • 24 Aug 2018 • Charles C. Onu, Lara J. Kanbar, Wissam Shalish, Karen A. Brown, Guilherme M. Sant'Anna, Robert E. Kearney, Doina Precup

Extremely preterm infants commonly require intubation and invasive mechanical ventilation after birth.

Undersampling and Bagging of Decision Trees in the Analysis of Cardiorespiratory Behavior for the Prediction of Extubation Readiness in Extremely Preterm Infants

no code implementations • 24 Aug 2018 • Lara J. Kanbar, Charles C. Onu, Wissam Shalish, Karen A. Brown, Guilherme M. Sant'Anna, Robert E. Kearney, Doina Precup

Extremely preterm infants often require endotracheal intubation and mechanical ventilation during the first days of life.

A Semi-Markov Chain Approach to Modeling Respiratory Patterns Prior to Extubation in Preterm Infants

no code implementations • 24 Aug 2018 • Charles C. Onu, Lara J. Kanbar, Wissam Shalish, Karen A. Brown, Guilherme M. Sant'Anna, Robert E. Kearney, Doina Precup

After birth, extremely preterm infants often require specialized respiratory management in the form of invasive mechanical ventilation (IMV).

Exploring Uncertainty Measures in Deep Networks for Multiple Sclerosis Lesion Detection and Segmentation

1 code implementation • 3 Aug 2018 • Tanya Nair, Doina Precup, Douglas L. Arnold, Tal Arbel

We present the first exploration of multiple uncertainty estimates based on Monte Carlo (MC) dropout [4] in the context of deep networks for lesion detection and segmentation in medical images.

Attend Before you Act: Leveraging human visual attention for continual learning

1 code implementation • 25 Jul 2018 • Khimya Khetarpal, Doina Precup

When humans perform a task, such as playing a game, they selectively pay attention to certain parts of the visual input, gathering relevant information and sequentially combining it to build a representation from the sensory data.

Safe Option-Critic: Learning Safety in the Option-Critic Architecture

1 code implementation • 21 Jul 2018 • Arushi Jain, Khimya Khetarpal, Doina Precup

We propose an optimization objective that learns safe options by encouraging the agent to visit states with higher behavioural consistency.

Connecting Weighted Automata and Recurrent Neural Networks through Spectral Learning

no code implementations • 4 Jul 2018 • Guillaume Rabusseau, Tianyu Li, Doina Precup

In this paper, we unravel a fundamental connection between weighted finite automata~(WFAs) and second-order recurrent neural networks~(2-RNNs): in the case of sequences of discrete symbols, WFAs and 2-RNNs with linear activation functions are expressively equivalent.

Resolving Event Coreference with Supervised Representation Learning and Clustering-Oriented Regularization

1 code implementation • SEMEVAL 2018 • Kian Kenyon-Dean, Jackie Chi Kit Cheung, Doina Precup

This work provides insight and motivating results for a new general approach to solving coreference and clustering problems with representation learning.

Dyna Planning using a Feature Based Generative Model

no code implementations • 23 May 2018 • Ryan Faulkner, Doina Precup

Dyna-style reinforcement learning is a powerful approach for problems where not much real data is available.

Learning Safe Policies with Expert Guidance

no code implementations • NeurIPS 2018 • Jessie Huang, Fa Wu, Doina Precup, Yang Cai

We propose a framework for ensuring safe behavior of a reinforcement learning agent when the reward function may be difficult to specify.

Disentangling the independently controllable factors of variation by interacting with the world

no code implementations • 26 Feb 2018 • Valentin Thomas, Emmanuel Bengio, William Fedus, Jules Pondard, Philippe Beaudoin, Hugo Larochelle, Joelle Pineau, Doina Precup, Yoshua Bengio

It has been postulated that a good representation is one that disentangles the underlying explanatory factors of variation.

Learning Robust Options

no code implementations • 9 Feb 2018 • Daniel J. Mankowitz, Timothy A. Mann, Pierre-Luc Bacon, Doina Precup, Shie Mannor

We present a Robust Options Policy Iteration (ROPI) algorithm with convergence guarantees, which learns options that are robust to model uncertainty.

Learnings Options End-to-End for Continuous Action Tasks

3 code implementations • 30 Nov 2017 • Martin Klissarov, Pierre-Luc Bacon, Jean Harb, Doina Precup

We present new results on learning temporally extended actions for continuoustasks, using the options framework (Suttonet al.[1999b], Precup [2000]).

Ubenwa: Cry-based Diagnosis of Birth Asphyxia

no code implementations • 17 Nov 2017 • Charles C. Onu, Innocent Udeogu, Eyenimi Ndiomu, Urbain Kengni, Doina Precup, Guilherme M. Sant'Anna, Edward Alikor, Peace Opara

Every year, 3 million newborns die within the first month of life.

Learning with Options that Terminate Off-Policy

no code implementations • 10 Nov 2017 • Anna Harutyunyan, Peter Vrancx, Pierre-Luc Bacon, Doina Precup, Ann Nowe

Generally, learning with longer options (like learning with multi-step returns) is known to be more efficient.

OptionGAN: Learning Joint Reward-Policy Options using Generative Adversarial Inverse Reinforcement Learning

1 code implementation • 20 Sep 2017 • Peter Henderson, Wei-Di Chang, Pierre-Luc Bacon, David Meger, Joelle Pineau, Doina Precup

Inverse reinforcement learning offers a useful paradigm to learn the underlying reward function directly from expert demonstrations.

Deep Reinforcement Learning that Matters

4 code implementations • 19 Sep 2017 • Peter Henderson, Riashat Islam, Philip Bachman, Joelle Pineau, Doina Precup, David Meger

In recent years, significant progress has been made in solving challenging problems across various domains using deep reinforcement learning (RL).

When Waiting is not an Option : Learning Options with a Deliberation Cost

1 code implementation • 14 Sep 2017 • Jean Harb, Pierre-Luc Bacon, Martin Klissarov, Doina Precup

Recent work has shown that temporally extended actions (options) can be learned fully end-to-end as opposed to being specified in advance.

Neural Network Based Nonlinear Weighted Finite Automata

no code implementations • 13 Sep 2017 • Tianyu Li, Guillaume Rabusseau, Doina Precup

Weighted finite automata (WFA) can expressively model functions defined over strings but are inherently linear models.

World Knowledge for Reading Comprehension: Rare Entity Prediction with Hierarchical LSTMs Using External Descriptions

no code implementations • EMNLP 2017 • Teng Long, Emmanuel Bengio, Ryan Lowe, Jackie Chi Kit Cheung, Doina Precup

Humans interpret texts with respect to some background information, or world knowledge, and we would like to develop automatic reading comprehension systems that can do the same.

Reproducibility of Benchmarked Deep Reinforcement Learning Tasks for Continuous Control

1 code implementation • 10 Aug 2017 • Riashat Islam, Peter Henderson, Maziar Gomrokchi, Doina Precup

We investigate and discuss: the significance of hyper-parameters in policy gradients for continuous control, general variance in the algorithms, and reproducibility of reported results.

Independently Controllable Factors

no code implementations • 3 Aug 2017 • Valentin Thomas, Jules Pondard, Emmanuel Bengio, Marc Sarfati, Philippe Beaudoin, Marie-Jean Meurs, Joelle Pineau, Doina Precup, Yoshua Bengio

It has been postulated that a good representation is one that disentangles the underlying explanatory factors of variation.

Variational Generative Stochastic Networks with Collaborative Shaping

1 code implementation • 2 Aug 2017 • Philip Bachman, Doina Precup

We develop an approach to training generative models based on unrolling a variational auto-encoder into a Markov chain, and shaping the chain's trajectories using a technique inspired by recent work in Approximate Bayesian computation.

Convergent Tree Backup and Retrace with Function Approximation

no code implementations • ICML 2018 • Ahmed Touati, Pierre-Luc Bacon, Doina Precup, Pascal Vincent

Off-policy learning is key to scaling up reinforcement learning as it allows to learn about a target policy from the experience generated by a different behavior policy.

Investigating Recurrence and Eligibility Traces in Deep Q-Networks

no code implementations • 18 Apr 2017 • Jean Harb, Doina Precup

Eligibility traces in reinforcement learning are used as a bias-variance trade-off and can often speed up training time by propagating knowledge back over time-steps in a single update.

Independently Controllable Features

no code implementations • 22 Mar 2017 • Emmanuel Bengio, Valentin Thomas, Joelle Pineau, Doina Precup, Yoshua Bengio

Finding features that disentangle the different causes of variation in real data is a difficult task, that has nonetheless received considerable attention in static domains like natural images.

Multi-Timescale, Gradient Descent, Temporal Difference Learning with Linear Options

no code implementations • 19 Mar 2017 • Peeyush Kumar, Doina Precup

Deliberating on large or continuous state spaces have been long standing challenges in reinforcement learning.

A Matrix Splitting Perspective on Planning with Options

no code implementations • 3 Dec 2016 • Pierre-Luc Bacon, Doina Precup

We show that the Bellman operator underlying the options framework leads to a matrix splitting, an approach traditionally used to speed up convergence of iterative solvers for large linear systems of equations.

The Option-Critic Architecture

9 code implementations • 16 Sep 2016 • Pierre-Luc Bacon, Jean Harb, Doina Precup

Temporal abstraction is key to scaling up learning and planning in reinforcement learning.

Leveraging Lexical Resources for Learning Entity Embeddings in Multi-Relational Data

no code implementations • ACL 2016 • Teng Long, Ryan Lowe, Jackie Chi Kit Cheung, Doina Precup

Recent work in learning vector-space embeddings for multi-relational data has focused on combining relational information derived from knowledge bases with distributional information derived from large text corpora.

Differentially Private Policy Evaluation

no code implementations • 7 Mar 2016 • Borja Balle, Maziar Gomrokchi, Doina Precup

We present the first differentially private algorithms for reinforcement learning, which apply to the task of evaluating a fixed policy.

Policy Gradient Methods for Off-policy Control

no code implementations • 13 Dec 2015 • Lucas Lehnert, Doina Precup

Off-policy learning refers to the problem of learning the value function of a way of behaving, or policy, while following a different policy.

Basis refinement strategies for linear value function approximation in MDPs

no code implementations • NeurIPS 2015 • Gheorghe Comanici, Doina Precup, Prakash Panangaden

We provide a theoretical framework for analyzing basis function construction for linear value function approximation in Markov Decision Processes (MDPs).

Conditional Computation in Neural Networks for faster models

1 code implementation • 19 Nov 2015 • Emmanuel Bengio, Pierre-Luc Bacon, Joelle Pineau, Doina Precup

In this paper, we use reinforcement learning as a tool to optimize conditional computation policies.

Testing Visual Attention in Dynamic Environments

no code implementations • 30 Oct 2015 • Philip Bachman, David Krueger, Doina Precup

We investigate attention as the active pursuit of useful information.

Data Generation as Sequential Decision Making

1 code implementation • NeurIPS 2015 • Philip Bachman, Doina Precup

We connect a broad class of generative models through their shared reliance on sequential decision making.

Learning with Pseudo-Ensembles

no code implementations • NeurIPS 2014 • Philip Bachman, Ouais Alsharif, Doina Precup

We formalize the notion of a pseudo-ensemble, a (possibly infinite) collection of child models spawned from a parent model by perturbing it according to some noise process.

Optimizing Energy Production Using Policy Search and Predictive State Representations

no code implementations • NeurIPS 2014 • Yuri Grinberg, Doina Precup, Michel Gendreau

We consider the challenging practical problem of optimizing the power production of a complex of hydroelectric power plants, which involves control over three continuous action variables, uncertainty in the amount of water inflows and a variety of constraints that need to be satisfied.

Practical Kernel-Based Reinforcement Learning

no code implementations • 21 Jul 2014 • André M. S. Barreto, Doina Precup, Joelle Pineau

In this paper we introduce an algorithm that turns KBRL into a practical reinforcement learning tool.

Classification-based Approximate Policy Iteration: Experiments and Extended Discussions

no code implementations • 2 Jul 2014 • Amir-Massoud Farahmand, Doina Precup, André M. S. Barreto, Mohammad Ghavamzadeh

We introduce a general classification-based approximate policy iteration (CAPI) framework, which encompasses a large class of algorithms that can exploit regularities of both the value function and the policy space, depending on what is advantageous.

Iterative Multilevel MRF Leveraging Context and Voxel Information for Brain Tumour Segmentation in MRI

no code implementations • CVPR 2014 • Nagesh Subbanna, Doina Precup, Tal Arbel

In this paper, we introduce a fully automated multistage graphical probabilistic framework to segment brain tumours from multimodal Magnetic Resonance Images (MRIs) acquired from real patients.

Algorithms for multi-armed bandit problems

no code implementations • 25 Feb 2014 • Volodymyr Kuleshov, Doina Precup

Although the design of clinical trials has been one of the principal practical problems motivating research on multi-armed bandits, bandit algorithms have never been evaluated as potential treatment allocation strategies.

Learning from Limited Demonstrations

no code implementations • NeurIPS 2013 • Beomjoon Kim, Amir-Massoud Farahmand, Joelle Pineau, Doina Precup

We achieve this by integrating LfD in an approximate policy iteration algorithm.

Bellman Error Based Feature Generation using Random Projections on Sparse Spaces

no code implementations • NeurIPS 2013 • Mahdi Milani Fard, Yuri Grinberg, Amir-Massoud Farahmand, Joelle Pineau, Doina Precup

This paper addresses the problem of automatic generation of features for value function approximation in reinforcement learning.

On-line Reinforcement Learning Using Incremental Kernel-Based Stochastic Factorization

no code implementations • NeurIPS 2012 • Doina Precup, Joelle Pineau, Andre S. Barreto

The ability to learn a policy for a sequential decision problem with continuous state space using on-line data is a long-standing challenge.

Value Pursuit Iteration

no code implementations • NeurIPS 2012 • Amir M. Farahmand, Doina Precup

VPI has two main features: First, it is a nonparametric algorithm that finds a good sparse approximation of the optimal value function given a dictionary of features.

Reinforcement Learning using Kernel-Based Stochastic Factorization

no code implementations • NeurIPS 2011 • Andre S. Barreto, Doina Precup, Joelle Pineau

Kernel-based reinforcement-learning (KBRL) is a method for learning a decision policy from a set of sample transitions which stands out for its strong theoretical guarantees.

Convergent Temporal-Difference Learning with Arbitrary Smooth Function Approximation

no code implementations • NeurIPS 2009 • Shalabh Bhatnagar, Doina Precup, David Silver, Richard S. Sutton, Hamid R. Maei, Csaba Szepesvári

We introduce the first temporal-difference learning algorithms that converge with smooth value function approximators, such as neural networks.

Bounding Performance Loss in Approximate MDP Homomorphisms

no code implementations • NeurIPS 2008 • Jonathan Taylor, Doina Precup, Prakash Panagaden

We prove that the difference in the optimal value function of different states can be upper-bounded by the value of this metric, and that the bound is tighter than that provided by bisimulation metrics (Ferns et al. 2004, 2005).

Between MDPs and semi-MDPs: A framework for temporal abstraction in reinforcement learning

1 code implementation • Artificial Intelligence 1999 • Richard S. Sutton, Doina Precup, Satinder Singh

In particular, we show that options may be used interchangeably with primitive actions in planning methods such as dynamic programming and in learning methods such as Q-learning.