Search Results for author: Gaolei Li

Found 13 papers, 3 papers with code

Spikewhisper: Temporal Spike Backdoor Attacks on Federated Neuromorphic Learning over Low-power Devices

no code implementations • 27 Mar 2024 • Hanqing Fu, Gaolei Li, Jun Wu, Jianhua Li, Xi Lin, Kai Zhou, Yuchen Liu

Federated neuromorphic learning (FedNL) leverages event-driven spiking neural networks and federated learning frameworks to effectively execute intelligent analysis tasks over amounts of distributed low-power devices but also perform vulnerability to poisoning attacks.

What Makes Good Collaborative Views? Contrastive Mutual Information Maximization for Multi-Agent Perception

1 code implementation • 15 Mar 2024 • Wanfang Su, Lixing Chen, Yang Bai, Xi Lin, Gaolei Li, Zhe Qu, Pan Zhou

The core philosophy of CMiMC is to preserve discriminative information of individual views in the collaborative view by maximizing mutual information between pre- and post-collaboration features while enhancing the efficacy of collaborative views by minimizing the loss function of downstream tasks.

On-demand Quantization for Green Federated Generative Diffusion in Mobile Edge Networks

no code implementations • 7 Mar 2024 • Bingkun Lai, Jiayi He, Jiawen Kang, Gaolei Li, Minrui Xu, Tao Zhang, Shengli Xie

Federated learning is a promising technique for effectively training GAI models in mobile edge networks due to its data distribution.

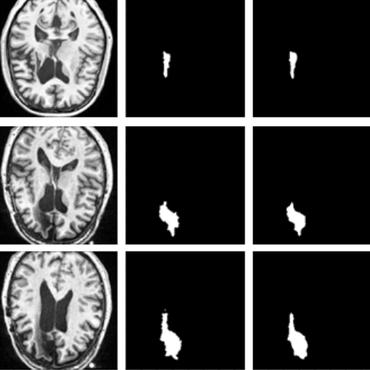

Slicer Networks

no code implementations • 18 Jan 2024 • Hang Zhang, Xiang Chen, Rongguang Wang, Renjiu Hu, Dongdong Liu, Gaolei Li

In medical imaging, scans often reveal objects with varied contrasts but consistent internal intensities or textures.

Toward the Tradeoffs between Privacy, Fairness and Utility in Federated Learning

no code implementations • 30 Nov 2023 • Kangkang Sun, Xiaojin Zhang, Xi Lin, Gaolei Li, Jing Wang, Jianhua Li

Researchers have struggled to design fair FL systems that ensure fairness of results.

Spatially Covariant Image Registration with Text Prompts

1 code implementation • 27 Nov 2023 • Xiang Chen, Min Liu, Rongguang Wang, Renjiu Hu, Dongdong Liu, Gaolei Li, Hang Zhang

Medical images are often characterized by their structured anatomical representations and spatially inhomogeneous contrasts.

Ranked #2 on

Image Registration

on Unpaired-abdomen-CT

(using extra training data)

Ranked #2 on

Image Registration

on Unpaired-abdomen-CT

(using extra training data)

Privacy-preserving Few-shot Traffic Detection against Advanced Persistent Threats via Federated Meta Learning

1 code implementation • IEEE Transactions on Network Science and Engineering 2023 • Yilun Hu, Jun Wu, Gaolei Li, Jianhua Li, Jinke Cheng

Extensive experiments based on multiple benchmark datasets like CICIDS2017 and DAPT 2020 prove the superiority of proposed PFTD.

Graph Anomaly Detection at Group Level: A Topology Pattern Enhanced Unsupervised Approach

no code implementations • 2 Aug 2023 • Xing Ai, Jialong Zhou, Yulin Zhu, Gaolei Li, Tomasz P. Michalak, Xiapu Luo, Kai Zhou

Graph anomaly detection (GAD) has achieved success and has been widely applied in various domains, such as fraud detection, cybersecurity, finance security, and biochemistry.

Few-shot Multi-domain Knowledge Rearming for Context-aware Defence against Advanced Persistent Threats

no code implementations • 13 Jun 2023 • Gaolei Li, YuanYuan Zhao, Wenqi Wei, Yuchen Liu

Secondly, to rearm current security strategies, an finetuning-based deployment mechanism is proposed to transfer learned knowledge into the student model, while minimizing the defense cost.

Privacy Inference-Empowered Stealthy Backdoor Attack on Federated Learning under Non-IID Scenarios

no code implementations • 13 Jun 2023 • Haochen Mei, Gaolei Li, Jun Wu, Longfei Zheng

In this paper, we propose a novel privacy inference-empowered stealthy backdoor attack (PI-SBA) scheme for FL under non-IID scenarios.

CATFL: Certificateless Authentication-based Trustworthy Federated Learning for 6G Semantic Communications

no code implementations • 1 Feb 2023 • Gaolei Li, YuanYuan Zhao, Yi Li

Most existing studies on trustworthy FL aim to eliminate data poisoning threats that are produced by malicious clients, but in many cases, eliminating model poisoning attacks brought by fake servers is also an important objective.

FocusedCleaner: Sanitizing Poisoned Graphs for Robust GNN-based Node Classification

no code implementations • 25 Oct 2022 • Yulin Zhu, Liang Tong, Gaolei Li, Xiapu Luo, Kai Zhou

Graph Neural Networks (GNNs) are vulnerable to data poisoning attacks, which will generate a poisoned graph as the input to the GNN models.

Automatically Lock Your Neural Networks When You're Away

no code implementations • 15 Mar 2021 • Ge Ren, Jun Wu, Gaolei Li, Shenghong Li

The smartphone and laptop can be unlocked by face or fingerprint recognition, while neural networks which confront numerous requests every day have little capability to distinguish between untrustworthy and credible users.