Search Results for author: Haeyong Kang

Found 10 papers, 9 papers with code

Continual Learning: Forget-free Winning Subnetworks for Video Representations

2 code implementations • 19 Dec 2023 • Haeyong Kang, Jaehong Yoon, Sung Ju Hwang, Chang D. Yoo

Inspired by the Lottery Ticket Hypothesis (LTH), which highlights the existence of efficient subnetworks within larger, dense networks, a high-performing Winning Subnetwork (WSN) in terms of task performance under appropriate sparsity conditions is considered for various continual learning tasks.

Progressive Fourier Neural Representation for Sequential Video Compilation

2 code implementations • 20 Jun 2023 • Haeyong Kang, Jaehong Yoon, Dahyun Kim, Sung Ju Hwang, Chang D Yoo

Motivated by continual learning, this work investigates how to accumulate and transfer neural implicit representations for multiple complex video data over sequential encoding sessions.

Forget-free Continual Learning with Soft-Winning SubNetworks

1 code implementation • 27 Mar 2023 • Haeyong Kang, Jaehong Yoon, Sultan Rizky Madjid, Sung Ju Hwang, Chang D. Yoo

Inspired by Regularized Lottery Ticket Hypothesis (RLTH), which states that competitive smooth (non-binary) subnetworks exist within a dense network in continual learning tasks, we investigate two proposed architecture-based continual learning methods which sequentially learn and select adaptive binary- (WSN) and non-binary Soft-Subnetworks (SoftNet) for each task.

Maximum margin learning of t-SPNs for cell classification with filtered input

no code implementations • 16 Mar 2023 • Haeyong Kang, Chang D. Yoo, Yongcheon Na

The t-SPN is constructed such that the unnormalized probability is represented as conditional probabilities of a subset of most similar cell classes.

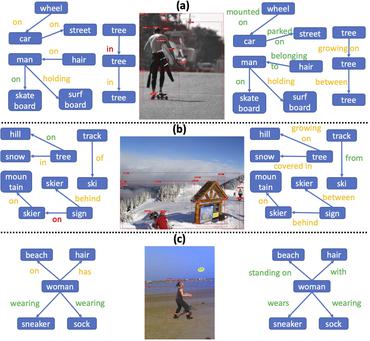

Skew Class-balanced Re-weighting for Unbiased Scene Graph Generation

1 code implementation • 1 Jan 2023 • Haeyong Kang, Chang D. Yoo

An unbiased scene graph generation (SGG) algorithm referred to as Skew Class-balanced Re-weighting (SCR) is proposed for considering the unbiased predicate prediction caused by the long-tailed distribution.

On the Soft-Subnetwork for Few-shot Class Incremental Learning

2 code implementations • 15 Sep 2022 • Haeyong Kang, Jaehong Yoon, Sultan Rizky Hikmawan Madjid, Sung Ju Hwang, Chang D. Yoo

Inspired by Regularized Lottery Ticket Hypothesis (RLTH), which hypothesizes that there exist smooth (non-binary) subnetworks within a dense network that achieve the competitive performance of the dense network, we propose a few-shot class incremental learning (FSCIL) method referred to as \emph{Soft-SubNetworks (SoftNet)}.

Forget-free Continual Learning with Winning Subnetworks

1 code implementation • International Conference on Machine Learning 2022 • Haeyong Kang, Rusty John Lloyd Mina, Sultan Rizky Hikmawan Madjid, Jaehong Yoon, Mark Hasegawa-Johnson, Sung Ju Hwang, Chang D. Yoo

Inspired by Lottery Ticket Hypothesis that competitive subnetworks exist within a dense network, we propose a continual learning method referred to as Winning SubNetworks (WSN), which sequentially learns and selects an optimal subnetwork for each task.

Learning Imbalanced Datasets with Maximum Margin Loss

1 code implementation • 11 Jun 2022 • Haeyong Kang, Thang Vu, Chang D. Yoo

A learning algorithm referred to as Maximum Margin (MM) is proposed for considering the class-imbalance data learning issue: the trained model tends to predict the majority of classes rather than the minority ones.

Semantic Grouping Network for Video Captioning

1 code implementation • 1 Feb 2021 • Hobin Ryu, Sunghun Kang, Haeyong Kang, Chang D. Yoo

This paper considers a video caption generating network referred to as Semantic Grouping Network (SGN) that attempts (1) to group video frames with discriminating word phrases of partially decoded caption and then (2) to decode those semantically aligned groups in predicting the next word.

SCNet: Training Inference Sample Consistency for Instance Segmentation

2 code implementations • 18 Dec 2020 • Thang Vu, Haeyong Kang, Chang D. Yoo

This paper proposes an architecture referred to as Sample Consistency Network (SCNet) to ensure that the IoU distribution of the samples at training time is close to that at inference time.