Search Results for author: Jianhua Han

Found 34 papers, 6 papers with code

DetCLIPv3: Towards Versatile Generative Open-vocabulary Object Detection

no code implementations • 14 Apr 2024 • Lewei Yao, Renjie Pi, Jianhua Han, Xiaodan Liang, Hang Xu, Wei zhang, Zhenguo Li, Dan Xu

This is followed by a fine-tuning stage that leverages a small number of high-resolution samples to further enhance detection performance.

LayerDiff: Exploring Text-guided Multi-layered Composable Image Synthesis via Layer-Collaborative Diffusion Model

no code implementations • 18 Mar 2024 • Runhui Huang, Kaixin Cai, Jianhua Han, Xiaodan Liang, Renjing Pei, Guansong Lu, Songcen Xu, Wei zhang, Hang Xu

Specifically, an inter-layer attention module is designed to encourage information exchange and learning between layers, while a text-guided intra-layer attention module incorporates layer-specific prompts to direct the specific-content generation for each layer.

NavCoT: Boosting LLM-Based Vision-and-Language Navigation via Learning Disentangled Reasoning

1 code implementation • 12 Mar 2024 • Bingqian Lin, Yunshuang Nie, Ziming Wei, Jiaqi Chen, Shikui Ma, Jianhua Han, Hang Xu, Xiaojun Chang, Xiaodan Liang

Vision-and-Language Navigation (VLN), as a crucial research problem of Embodied AI, requires an embodied agent to navigate through complex 3D environments following natural language instructions.

From Summary to Action: Enhancing Large Language Models for Complex Tasks with Open World APIs

no code implementations • 28 Feb 2024 • Yulong Liu, Yunlong Yuan, Chunwei Wang, Jianhua Han, Yongqiang Ma, Li Zhang, Nanning Zheng, Hang Xu

In this work, we introduce a novel tool invocation pipeline designed to control massive real-world APIs.

Task-customized Masked AutoEncoder via Mixture of Cluster-conditional Experts

no code implementations • 8 Feb 2024 • Zhili Liu, Kai Chen, Jianhua Han, Lanqing Hong, Hang Xu, Zhenguo Li, James T. Kwok

It also obtains new state-of-the-art self-supervised learning results on detection and segmentation.

PanGu-Draw: Advancing Resource-Efficient Text-to-Image Synthesis with Time-Decoupled Training and Reusable Coop-Diffusion

no code implementations • 27 Dec 2023 • Guansong Lu, Yuanfan Guo, Jianhua Han, Minzhe Niu, Yihan Zeng, Songcen Xu, Zeyi Huang, Zhao Zhong, Wei zhang, Hang Xu

Current large-scale diffusion models represent a giant leap forward in conditional image synthesis, capable of interpreting diverse cues like text, human poses, and edges.

G-LLaVA: Solving Geometric Problem with Multi-Modal Large Language Model

1 code implementation • 18 Dec 2023 • Jiahui Gao, Renjie Pi, Jipeng Zhang, Jiacheng Ye, Wanjun Zhong, YuFei Wang, Lanqing Hong, Jianhua Han, Hang Xu, Zhenguo Li, Lingpeng Kong

We first analyze the limitations of current Multimodal Large Language Models (MLLMs) in this area: they struggle to accurately comprehending basic geometric elements and their relationships.

Reason2Drive: Towards Interpretable and Chain-based Reasoning for Autonomous Driving

1 code implementation • 6 Dec 2023 • Ming Nie, Renyuan Peng, Chunwei Wang, Xinyue Cai, Jianhua Han, Hang Xu, Li Zhang

Large vision-language models (VLMs) have garnered increasing interest in autonomous driving areas, due to their advanced capabilities in complex reasoning tasks essential for highly autonomous vehicle behavior.

Gaining Wisdom from Setbacks: Aligning Large Language Models via Mistake Analysis

no code implementations • 16 Oct 2023 • Kai Chen, Chunwei Wang, Kuo Yang, Jianhua Han, Lanqing Hong, Fei Mi, Hang Xu, Zhengying Liu, Wenyong Huang, Zhenguo Li, Dit-yan Yeung, Lifeng Shang, Xin Jiang, Qun Liu

The rapid development of large language models (LLMs) has not only provided numerous opportunities but also presented significant challenges.

Implicit Concept Removal of Diffusion Models

no code implementations • 9 Oct 2023 • Zhili Liu, Kai Chen, Yifan Zhang, Jianhua Han, Lanqing Hong, Hang Xu, Zhenguo Li, Dit-yan Yeung, James Kwok

To address this, we utilize the intrinsic geometric characteristics of implicit concepts and present the Geom-Erasing, a novel concept removal method based on geometric-driven control.

HiLM-D: Towards High-Resolution Understanding in Multimodal Large Language Models for Autonomous Driving

no code implementations • 11 Sep 2023 • Xinpeng Ding, Jianhua Han, Hang Xu, Wei zhang, Xiaomeng Li

For the first time, we leverage singular multimodal large language models (MLLMs) to consolidate multiple autonomous driving tasks from videos, i. e., the Risk Object Localization and Intention and Suggestion Prediction (ROLISP) task.

Any-Size-Diffusion: Toward Efficient Text-Driven Synthesis for Any-Size HD Images

no code implementations • 31 Aug 2023 • Qingping Zheng, Yuanfan Guo, Jiankang Deng, Jianhua Han, Ying Li, Songcen Xu, Hang Xu

Stable diffusion, a generative model used in text-to-image synthesis, frequently encounters resolution-induced composition problems when generating images of varying sizes.

GrowCLIP: Data-aware Automatic Model Growing for Large-scale Contrastive Language-Image Pre-training

no code implementations • ICCV 2023 • Xinchi Deng, Han Shi, Runhui Huang, Changlin Li, Hang Xu, Jianhua Han, James Kwok, Shen Zhao, Wei zhang, Xiaodan Liang

Compared with the existing methods, GrowCLIP improves 2. 3% average top-1 accuracy on zero-shot image classification of 9 downstream tasks.

DiffDis: Empowering Generative Diffusion Model with Cross-Modal Discrimination Capability

no code implementations • ICCV 2023 • Runhui Huang, Jianhua Han, Guansong Lu, Xiaodan Liang, Yihan Zeng, Wei zhang, Hang Xu

DiffDis first formulates the image-text discriminative problem as a generative diffusion process of the text embedding from the text encoder conditioned on the image.

CorNav: Autonomous Agent with Self-Corrected Planning for Zero-Shot Vision-and-Language Navigation

no code implementations • 17 Jun 2023 • Xiwen Liang, Liang Ma, Shanshan Guo, Jianhua Han, Hang Xu, Shikui Ma, Xiaodan Liang

Understanding and following natural language instructions while navigating through complex, real-world environments poses a significant challenge for general-purpose robots.

RealignDiff: Boosting Text-to-Image Diffusion Model with Coarse-to-fine Semantic Re-alignment

1 code implementation • 31 May 2023 • Guian Fang, Zutao Jiang, Jianhua Han, Guansong Lu, Hang Xu, Shengcai Liao, Xiaodan Liang

Recent advances in text-to-image diffusion models have achieved remarkable success in generating high-quality, realistic images from textual descriptions.

DetGPT: Detect What You Need via Reasoning

1 code implementation • 23 May 2023 • Renjie Pi, Jiahui Gao, Shizhe Diao, Rui Pan, Hanze Dong, Jipeng Zhang, Lewei Yao, Jianhua Han, Hang Xu, Lingpeng Kong, Tong Zhang

Overall, our proposed paradigm and DetGPT demonstrate the potential for more sophisticated and intuitive interactions between humans and machines.

DetCLIPv2: Scalable Open-Vocabulary Object Detection Pre-training via Word-Region Alignment

no code implementations • CVPR 2023 • Lewei Yao, Jianhua Han, Xiaodan Liang, Dan Xu, Wei zhang, Zhenguo Li, Hang Xu

This paper presents DetCLIPv2, an efficient and scalable training framework that incorporates large-scale image-text pairs to achieve open-vocabulary object detection (OVD).

CLIP$^2$: Contrastive Language-Image-Point Pretraining from Real-World Point Cloud Data

no code implementations • 22 Mar 2023 • Yihan Zeng, Chenhan Jiang, Jiageng Mao, Jianhua Han, Chaoqiang Ye, Qingqiu Huang, Dit-yan Yeung, Zhen Yang, Xiaodan Liang, Hang Xu

Contrastive Language-Image Pre-training, benefiting from large-scale unlabeled text-image pairs, has demonstrated great performance in open-world vision understanding tasks.

Ranked #3 on

Zero-shot 3D Point Cloud Classification

on ScanNetV2

Ranked #3 on

Zero-shot 3D Point Cloud Classification

on ScanNetV2

Towards Universal Vision-language Omni-supervised Segmentation

no code implementations • 12 Mar 2023 • Bowen Dong, Jiaxi Gu, Jianhua Han, Hang Xu, WangMeng Zuo

To improve the open-world segmentation ability, we leverage omni-supervised data (i. e., panoptic segmentation data, object detection data, and image-text pairs data) into training, thus enriching the open-world segmentation ability and achieving better segmentation accuracy.

CapDet: Unifying Dense Captioning and Open-World Detection Pretraining

no code implementations • CVPR 2023 • Yanxin Long, Youpeng Wen, Jianhua Han, Hang Xu, Pengzhen Ren, Wei zhang, Shen Zhao, Xiaodan Liang

Besides, our CapDet also achieves state-of-the-art performance on dense captioning tasks, e. g., 15. 44% mAP on VG V1. 2 and 13. 98% on the VG-COCO dataset.

Visual Exemplar Driven Task-Prompting for Unified Perception in Autonomous Driving

no code implementations • CVPR 2023 • Xiwen Liang, Minzhe Niu, Jianhua Han, Hang Xu, Chunjing Xu, Xiaodan Liang

Multi-task learning has emerged as a powerful paradigm to solve a range of tasks simultaneously with good efficiency in both computation resources and inference time.

CLIP2: Contrastive Language-Image-Point Pretraining From Real-World Point Cloud Data

no code implementations • CVPR 2023 • Yihan Zeng, Chenhan Jiang, Jiageng Mao, Jianhua Han, Chaoqiang Ye, Qingqiu Huang, Dit-yan Yeung, Zhen Yang, Xiaodan Liang, Hang Xu

Contrastive Language-Image Pre-training, benefiting from large-scale unlabeled text-image pairs, has demonstrated great performance in open-world vision understanding tasks.

NLIP: Noise-robust Language-Image Pre-training

no code implementations • 14 Dec 2022 • Runhui Huang, Yanxin Long, Jianhua Han, Hang Xu, Xiwen Liang, Chunjing Xu, Xiaodan Liang

Large-scale cross-modal pre-training paradigms have recently shown ubiquitous success on a wide range of downstream tasks, e. g., zero-shot classification, retrieval and image captioning.

Fine-grained Visual-Text Prompt-Driven Self-Training for Open-Vocabulary Object Detection

no code implementations • 2 Nov 2022 • Yanxin Long, Jianhua Han, Runhui Huang, Xu Hang, Yi Zhu, Chunjing Xu, Xiaodan Liang

Inspired by the success of vision-language methods (VLMs) in zero-shot classification, recent works attempt to extend this line of work into object detection by leveraging the localization ability of pre-trained VLMs and generating pseudo labels for unseen classes in a self-training manner.

Generative Negative Text Replay for Continual Vision-Language Pretraining

no code implementations • 31 Oct 2022 • Shipeng Yan, Lanqing Hong, Hang Xu, Jianhua Han, Tinne Tuytelaars, Zhenguo Li, Xuming He

In this work, we focus on learning a VLP model with sequential chunks of image-text pair data.

DetCLIP: Dictionary-Enriched Visual-Concept Paralleled Pre-training for Open-world Detection

no code implementations • 20 Sep 2022 • Lewei Yao, Jianhua Han, Youpeng Wen, Xiaodan Liang, Dan Xu, Wei zhang, Zhenguo Li, Chunjing Xu, Hang Xu

We further design a concept dictionary~(with descriptions) from various online sources and detection datasets to provide prior knowledge for each concept.

Effective Adaptation in Multi-Task Co-Training for Unified Autonomous Driving

no code implementations • 19 Sep 2022 • Xiwen Liang, Yangxin Wu, Jianhua Han, Hang Xu, Chunjing Xu, Xiaodan Liang

Aiming towards a holistic understanding of multiple downstream tasks simultaneously, there is a need for extracting features with better transferability.

Open-world Semantic Segmentation via Contrasting and Clustering Vision-Language Embedding

no code implementations • 18 Jul 2022 • Quande Liu, Youpeng Wen, Jianhua Han, Chunjing Xu, Hang Xu, Xiaodan Liang

To bridge the gap between supervised semantic segmentation and real-world applications that acquires one model to recognize arbitrary new concepts, recent zero-shot segmentation attracts a lot of attention by exploring the relationships between unseen and seen object categories, yet requiring large amounts of densely-annotated data with diverse base classes.

Task-Customized Self-Supervised Pre-training with Scalable Dynamic Routing

no code implementations • 26 May 2022 • Zhili Liu, Jianhua Han, Lanqing Hong, Hang Xu, Kai Chen, Chunjing Xu, Zhenguo Li

On the other hand, for existing SSL methods, it is burdensome and infeasible to use different downstream-task-customized datasets in pre-training for different tasks.

ONCE-3DLanes: Building Monocular 3D Lane Detection

2 code implementations • CVPR 2022 • Fan Yan, Ming Nie, Xinyue Cai, Jianhua Han, Hang Xu, Zhen Yang, Chaoqiang Ye, Yanwei Fu, Michael Bi Mi, Li Zhang

We present ONCE-3DLanes, a real-world autonomous driving dataset with lane layout annotation in 3D space.

Laneformer: Object-aware Row-Column Transformers for Lane Detection

no code implementations • 18 Mar 2022 • Jianhua Han, Xiajun Deng, Xinyue Cai, Zhen Yang, Hang Xu, Chunjing Xu, Xiaodan Liang

We present Laneformer, a conceptually simple yet powerful transformer-based architecture tailored for lane detection that is a long-standing research topic for visual perception in autonomous driving.

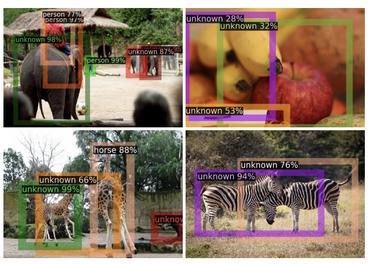

CODA: A Real-World Road Corner Case Dataset for Object Detection in Autonomous Driving

no code implementations • 15 Mar 2022 • Kaican Li, Kai Chen, Haoyu Wang, Lanqing Hong, Chaoqiang Ye, Jianhua Han, Yukuai Chen, Wei zhang, Chunjing Xu, Dit-yan Yeung, Xiaodan Liang, Zhenguo Li, Hang Xu

One main reason that impedes the development of truly reliably self-driving systems is the lack of public datasets for evaluating the performance of object detectors on corner cases.

SODA10M: A Large-Scale 2D Self/Semi-Supervised Object Detection Dataset for Autonomous Driving

no code implementations • 21 Jun 2021 • Jianhua Han, Xiwen Liang, Hang Xu, Kai Chen, Lanqing Hong, Jiageng Mao, Chaoqiang Ye, Wei zhang, Zhenguo Li, Xiaodan Liang, Chunjing Xu

Experiments show that SODA10M can serve as a promising pre-training dataset for different self-supervised learning methods, which gives superior performance when fine-tuning with different downstream tasks (i. e., detection, semantic/instance segmentation) in autonomous driving domain.