Search Results for author: Jilan Xu

Found 22 papers, 10 papers with code

Concept-Attention Whitening for Interpretable Skin Lesion Diagnosis

no code implementations • 9 Apr 2024 • Junlin Hou, Jilan Xu, Hao Chen

In the former branch, we train the CNN with a CAW layer inserted to perform skin lesion diagnosis.

QMix: Quality-aware Learning with Mixed Noise for Robust Retinal Disease Diagnosis

no code implementations • 8 Apr 2024 • Junlin Hou, Jilan Xu, Rui Feng, Hao Chen

Previous noise learning methods mainly considered noise arising from images being mislabeled, i. e. label noise, assuming that all mislabeled images are of high image quality.

EgoExoLearn: A Dataset for Bridging Asynchronous Ego- and Exo-centric View of Procedural Activities in Real World

1 code implementation • 24 Mar 2024 • Yifei HUANG, Guo Chen, Jilan Xu, Mingfang Zhang, Lijin Yang, Baoqi Pei, Hongjie Zhang, Lu Dong, Yali Wang, LiMin Wang, Yu Qiao

Along with the videos we record high-quality gaze data and provide detailed multimodal annotations, formulating a playground for modeling the human ability to bridge asynchronous procedural actions from different viewpoints.

InternVideo2: Scaling Video Foundation Models for Multimodal Video Understanding

2 code implementations • 22 Mar 2024 • Yi Wang, Kunchang Li, Xinhao Li, Jiashuo Yu, Yinan He, Guo Chen, Baoqi Pei, Rongkun Zheng, Jilan Xu, Zun Wang, Yansong Shi, Tianxiang Jiang, Songze Li, Hongjie Zhang, Yifei HUANG, Yu Qiao, Yali Wang, LiMin Wang

We introduce InternVideo2, a new video foundation model (ViFM) that achieves the state-of-the-art performance in action recognition, video-text tasks, and video-centric dialogue.

Ranked #1 on

Audio Classification

on ESC-50

(using extra training data)

Ranked #1 on

Audio Classification

on ESC-50

(using extra training data)

Advancing COVID-19 Detection in 3D CT Scans

no code implementations • 18 Mar 2024 • Qingqiu Li, Runtian Yuan, Junlin Hou, Jilan Xu, Yuejie Zhang, Rui Feng, Hao Chen

To make a more accurate diagnosis of COVID-19, we propose a straightforward yet effective model.

Domain Adaptation Using Pseudo Labels for COVID-19 Detection

no code implementations • 18 Mar 2024 • Runtian Yuan, Qingqiu Li, Junlin Hou, Jilan Xu, Yuejie Zhang, Rui Feng, Hao Chen

In response to the need for rapid and accurate COVID-19 diagnosis during the global pandemic, we present a two-stage framework that leverages pseudo labels for domain adaptation to enhance the detection of COVID-19 from CT scans.

Anatomical Structure-Guided Medical Vision-Language Pre-training

no code implementations • 14 Mar 2024 • Qingqiu Li, Xiaohan Yan, Jilan Xu, Runtian Yuan, Yuejie Zhang, Rui Feng, Quanli Shen, Xiaobo Zhang, Shujun Wang

For finding and existence, we regard them as image tags, applying an image-tag recognition decoder to associate image features with their respective tags within each sample and constructing soft labels for contrastive learning to improve the semantic association of different image-report pairs.

Video Mamba Suite: State Space Model as a Versatile Alternative for Video Understanding

1 code implementation • 14 Mar 2024 • Guo Chen, Yifei HUANG, Jilan Xu, Baoqi Pei, Zhe Chen, Zhiqi Li, Jiahao Wang, Kunchang Li, Tong Lu, LiMin Wang

We categorize Mamba into four roles for modeling videos, deriving a Video Mamba Suite composed of 14 models/modules, and evaluating them on 12 video understanding tasks.

Ranked #1 on

Temporal Action Localization

on FineAction

Ranked #1 on

Temporal Action Localization

on FineAction

Retrieval-Augmented Egocentric Video Captioning

no code implementations • 1 Jan 2024 • Jilan Xu, Yifei HUANG, Junlin Hou, Guo Chen, Yuejie Zhang, Rui Feng, Weidi Xie

In this paper, (1) we develop EgoInstructor, a retrieval-augmented multimodal captioning model that automatically retrieves semantically relevant third-person instructional videos to enhance the video captioning of egocentric videos.

MVBench: A Comprehensive Multi-modal Video Understanding Benchmark

1 code implementation • 28 Nov 2023 • Kunchang Li, Yali Wang, Yinan He, Yizhuo Li, Yi Wang, Yi Liu, Zun Wang, Jilan Xu, Guo Chen, Ping Luo, LiMin Wang, Yu Qiao

With the rapid development of Multi-modal Large Language Models (MLLMs), a number of diagnostic benchmarks have recently emerged to evaluate the comprehension capabilities of these models.

Ranked #1 on

Zero-Shot Video Question Answer

on STAR Benchmark

Ranked #1 on

Zero-Shot Video Question Answer

on STAR Benchmark

Enhanced Knowledge Injection for Radiology Report Generation

no code implementations • 1 Nov 2023 • Qingqiu Li, Jilan Xu, Runtian Yuan, Mohan Chen, Yuejie Zhang, Rui Feng, Xiaobo Zhang, Shang Gao

Automatic generation of radiology reports holds crucial clinical value, as it can alleviate substantial workload on radiologists and remind less experienced ones of potential anomalies.

VideoLLM: Modeling Video Sequence with Large Language Models

1 code implementation • 22 May 2023 • Guo Chen, Yin-Dong Zheng, Jiahao Wang, Jilan Xu, Yifei HUANG, Junting Pan, Yi Wang, Yali Wang, Yu Qiao, Tong Lu, LiMin Wang

Building upon this insight, we propose a novel framework called VideoLLM that leverages the sequence reasoning capabilities of pre-trained LLMs from natural language processing (NLP) for video sequence understanding.

Mask Hierarchical Features For Self-Supervised Learning

no code implementations • 1 Apr 2023 • Fenggang Liu, Yangguang Li, Feng Liang, Jilan Xu, Bin Huang, Jing Shao

We mask part of patches in the representation space and then utilize sparse visible patches to reconstruct high semantic image representation.

Learning Open-vocabulary Semantic Segmentation Models From Natural Language Supervision

1 code implementation • CVPR 2023 • Jilan Xu, Junlin Hou, Yuejie Zhang, Rui Feng, Yi Wang, Yu Qiao, Weidi Xie

The former aims to infer all masked entities in the caption given the group tokens, that enables the model to learn fine-grained alignment between visual groups and text entities.

InternVideo: General Video Foundation Models via Generative and Discriminative Learning

1 code implementation • 6 Dec 2022 • Yi Wang, Kunchang Li, Yizhuo Li, Yinan He, Bingkun Huang, Zhiyu Zhao, Hongjie Zhang, Jilan Xu, Yi Liu, Zun Wang, Sen Xing, Guo Chen, Junting Pan, Jiashuo Yu, Yali Wang, LiMin Wang, Yu Qiao

Specifically, InternVideo efficiently explores masked video modeling and video-language contrastive learning as the pretraining objectives, and selectively coordinates video representations of these two complementary frameworks in a learnable manner to boost various video applications.

Ranked #1 on

Action Recognition

on Something-Something V1

(using extra training data)

Ranked #1 on

Action Recognition

on Something-Something V1

(using extra training data)

Cross-Field Transformer for Diabetic Retinopathy Grading on Two-field Fundus Images

1 code implementation • 26 Nov 2022 • Junlin Hou, Jilan Xu, Fan Xiao, Rui-Wei Zhao, Yuejie Zhang, Haidong Zou, Lina Lu, Wenwen Xue, Rui Feng

However, automatic DR grading based on two-field fundus photography remains a challenging task due to the lack of publicly available datasets and effective fusion strategies.

CMC v2: Towards More Accurate COVID-19 Detection with Discriminative Video Priors

no code implementations • 26 Nov 2022 • Junlin Hou, Jilan Xu, Nan Zhang, Yi Wang, Yuejie Zhang, Xiaobo Zhang, Rui Feng

This paper presents our solution for the 2nd COVID-19 Competition, occurring in the framework of the AIMIA Workshop at the European Conference on Computer Vision (ECCV 2022).

Boosting COVID-19 Severity Detection with Infection-aware Contrastive Mixup Classification

no code implementations • 26 Nov 2022 • Junlin Hou, Jilan Xu, Nan Zhang, Yuejie Zhang, Xiaobo Zhang, Rui Feng

In our approach, we devise a novel infection-aware 3D Contrastive Mixup Classification network for severity grading.

Deep-OCTA: Ensemble Deep Learning Approaches for Diabetic Retinopathy Analysis on OCTA Images

1 code implementation • 2 Oct 2022 • Junlin Hou, Fan Xiao, Jilan Xu, Yuejie Zhang, Haidong Zou, Rui Feng

In the image quality assessment task, we create an ensemble of InceptionV3, SE-ResNeXt, and Vision Transformer models.

FDVTS's Solution for 2nd COV19D Competition on COVID-19 Detection and Severity Analysis

no code implementations • 5 Jul 2022 • Junlin Hou, Jilan Xu, Rui Feng, Yuejie Zhang

This paper presents our solution for the 2nd COVID-19 Competition, occurring in the framework of the AIMIA Workshop in the European Conference on Computer Vision (ECCV 2022).

CREAM: Weakly Supervised Object Localization via Class RE-Activation Mapping

1 code implementation • CVPR 2022 • Jilan Xu, Junlin Hou, Yuejie Zhang, Rui Feng, Rui-Wei Zhao, Tao Zhang, Xuequan Lu, Shang Gao

In this paper, we empirically prove that this problem is associated with the mixup of the activation values between less discriminative foreground regions and the background.

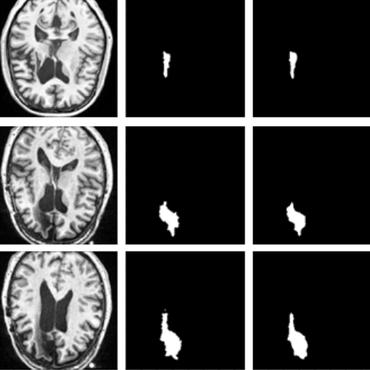

MDU-Net: Multi-scale Densely Connected U-Net for biomedical image segmentation

no code implementations • 2 Dec 2018 • Jiawei Zhang, Yuzhen Jin, Jilan Xu, Xiaowei Xu, Yanchun Zhang

The three multi-scale dense connections improve U-net performance by up to 1. 8% on test A and 3. 5% on test B in the MICCAI Gland dataset.