Search Results for author: Linjie Li

Found 54 papers, 33 papers with code

Entity6K: A Large Open-Domain Evaluation Dataset for Real-World Entity Recognition

no code implementations • 19 Mar 2024 • JieLin Qiu, William Han, Winfred Wang, Zhengyuan Yang, Linjie Li, JianFeng Wang, Christos Faloutsos, Lei LI, Lijuan Wang

Open-domain real-world entity recognition is essential yet challenging, involving identifying various entities in diverse environments.

TaE: Task-aware Expandable Representation for Long Tail Class Incremental Learning

no code implementations • 8 Feb 2024 • Linjie Li, S. Liu, Zhenyu Wu, Ji Yang

Existing methods mainly focus on preserving representative samples from previous classes to combat catastrophic forgetting.

Bring Metric Functions into Diffusion Models

no code implementations • 4 Jan 2024 • Jie An, Zhengyuan Yang, JianFeng Wang, Linjie Li, Zicheng Liu, Lijuan Wang, Jiebo Luo

The first module, similar to a standard DDPM, learns to predict the added noise and is unaffected by the metric function.

COSMO: COntrastive Streamlined MultimOdal Model with Interleaved Pre-Training

no code implementations • 1 Jan 2024 • Alex Jinpeng Wang, Linjie Li, Kevin Qinghong Lin, JianFeng Wang, Kevin Lin, Zhengyuan Yang, Lijuan Wang, Mike Zheng Shou

\ModelName, our unified framework, merges unimodal and multimodal elements, enhancing model performance for tasks involving textual and visual data while notably reducing learnable parameters.

Interfacing Foundation Models' Embeddings

1 code implementation • 12 Dec 2023 • Xueyan Zou, Linjie Li, JianFeng Wang, Jianwei Yang, Mingyu Ding, Zhengyuan Yang, Feng Li, Hao Zhang, Shilong Liu, Arul Aravinthan, Yong Jae Lee, Lijuan Wang

The proposed interface is adaptive to new tasks, and new models.

MM-Narrator: Narrating Long-form Videos with Multimodal In-Context Learning

no code implementations • 29 Nov 2023 • Chaoyi Zhang, Kevin Lin, Zhengyuan Yang, JianFeng Wang, Linjie Li, Chung-Ching Lin, Zicheng Liu, Lijuan Wang

We present MM-Narrator, a novel system leveraging GPT-4 with multimodal in-context learning for the generation of audio descriptions (AD).

GPT-4V in Wonderland: Large Multimodal Models for Zero-Shot Smartphone GUI Navigation

2 code implementations • 13 Nov 2023 • An Yan, Zhengyuan Yang, Wanrong Zhu, Kevin Lin, Linjie Li, JianFeng Wang, Jianwei Yang, Yiwu Zhong, Julian McAuley, Jianfeng Gao, Zicheng Liu, Lijuan Wang

We first benchmark MM-Navigator on our collected iOS screen dataset.

The Generative AI Paradox: "What It Can Create, It May Not Understand"

no code implementations • 31 Oct 2023 • Peter West, Ximing Lu, Nouha Dziri, Faeze Brahman, Linjie Li, Jena D. Hwang, Liwei Jiang, Jillian Fisher, Abhilasha Ravichander, Khyathi Chandu, Benjamin Newman, Pang Wei Koh, Allyson Ettinger, Yejin Choi

Specifically, we propose and test the Generative AI Paradox hypothesis: generative models, having been trained directly to reproduce expert-like outputs, acquire generative capabilities that are not contingent upon -- and can therefore exceed -- their ability to understand those same types of outputs.

MM-VID: Advancing Video Understanding with GPT-4V(ision)

no code implementations • 30 Oct 2023 • Kevin Lin, Faisal Ahmed, Linjie Li, Chung-Ching Lin, Ehsan Azarnasab, Zhengyuan Yang, JianFeng Wang, Lin Liang, Zicheng Liu, Yumao Lu, Ce Liu, Lijuan Wang

We present MM-VID, an integrated system that harnesses the capabilities of GPT-4V, combined with specialized tools in vision, audio, and speech, to facilitate advanced video understanding.

DEsignBench: Exploring and Benchmarking DALL-E 3 for Imagining Visual Design

1 code implementation • 23 Oct 2023 • Kevin Lin, Zhengyuan Yang, Linjie Li, JianFeng Wang, Lijuan Wang

For DEsignBench benchmarking, we perform human evaluations on generated images in DEsignBench gallery, against the criteria of image-text alignment, visual aesthetic, and design creativity.

Idea2Img: Iterative Self-Refinement with GPT-4V(ision) for Automatic Image Design and Generation

no code implementations • 12 Oct 2023 • Zhengyuan Yang, JianFeng Wang, Linjie Li, Kevin Lin, Chung-Ching Lin, Zicheng Liu, Lijuan Wang

We introduce ``Idea to Image,'' a system that enables multimodal iterative self-refinement with GPT-4V(ision) for automatic image design and generation.

OpenLEAF: Open-Domain Interleaved Image-Text Generation and Evaluation

no code implementations • 11 Oct 2023 • Jie An, Zhengyuan Yang, Linjie Li, JianFeng Wang, Kevin Lin, Zicheng Liu, Lijuan Wang, Jiebo Luo

We hope our proposed framework, benchmark, and LMM evaluation could help establish the intriguing interleaved image-text generation task.

The Dawn of LMMs: Preliminary Explorations with GPT-4V(ision)

1 code implementation • 29 Sep 2023 • Zhengyuan Yang, Linjie Li, Kevin Lin, JianFeng Wang, Chung-Ching Lin, Zicheng Liu, Lijuan Wang

We hope that this preliminary exploration will inspire future research on the next-generation multimodal task formulation, new ways to exploit and enhance LMMs to solve real-world problems, and gaining better understanding of multimodal foundation models.

Multimodal Foundation Models: From Specialists to General-Purpose Assistants

1 code implementation • 18 Sep 2023 • Chunyuan Li, Zhe Gan, Zhengyuan Yang, Jianwei Yang, Linjie Li, Lijuan Wang, Jianfeng Gao

This paper presents a comprehensive survey of the taxonomy and evolution of multimodal foundation models that demonstrate vision and vision-language capabilities, focusing on the transition from specialist models to general-purpose assistants.

MM-Vet: Evaluating Large Multimodal Models for Integrated Capabilities

1 code implementation • 4 Aug 2023 • Weihao Yu, Zhengyuan Yang, Linjie Li, JianFeng Wang, Kevin Lin, Zicheng Liu, Xinchao Wang, Lijuan Wang

Problems include: (1) How to systematically structure and evaluate the complicated multimodal tasks; (2) How to design evaluation metrics that work well across question and answer types; and (3) How to give model insights beyond a simple performance ranking.

Spatial-Frequency U-Net for Denoising Diffusion Probabilistic Models

no code implementations • 27 Jul 2023 • Xin Yuan, Linjie Li, JianFeng Wang, Zhengyuan Yang, Kevin Lin, Zicheng Liu, Lijuan Wang

In this paper, we study the denoising diffusion probabilistic model (DDPM) in wavelet space, instead of pixel space, for visual synthesis.

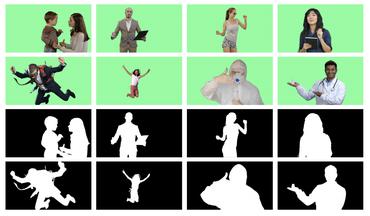

DisCo: Disentangled Control for Realistic Human Dance Generation

1 code implementation • 30 Jun 2023 • Tan Wang, Linjie Li, Kevin Lin, Yuanhao Zhai, Chung-Ching Lin, Zhengyuan Yang, Hanwang Zhang, Zicheng Liu, Lijuan Wang

In this paper, we depart from the traditional paradigm of human motion transfer and emphasize two additional critical attributes for the synthesis of human dance content in social media contexts: (i) Generalizability: the model should be able to generalize beyond generic human viewpoints as well as unseen human subjects, backgrounds, and poses; (ii) Compositionality: it should allow for the seamless composition of seen/unseen subjects, backgrounds, and poses from different sources.

Mitigating Hallucination in Large Multi-Modal Models via Robust Instruction Tuning

3 code implementations • 26 Jun 2023 • Fuxiao Liu, Kevin Lin, Linjie Li, JianFeng Wang, Yaser Yacoob, Lijuan Wang

To efficiently measure the hallucination generated by LMMs, we propose GPT4-Assisted Visual Instruction Evaluation (GAVIE), a stable approach to evaluate visual instruction tuning like human experts.

Ranked #4 on

Visual Question Answering (VQA)

on HallusionBench

Ranked #4 on

Visual Question Answering (VQA)

on HallusionBench

MMSum: A Dataset for Multimodal Summarization and Thumbnail Generation of Videos

1 code implementation • 7 Jun 2023 • JieLin Qiu, Jiacheng Zhu, William Han, Aditesh Kumar, Karthik Mittal, Claire Jin, Zhengyuan Yang, Linjie Li, JianFeng Wang, Ding Zhao, Bo Li, Lijuan Wang

To address these challenges and provide a comprehensive dataset for this new direction, we have meticulously curated the \textbf{MMSum} dataset.

An Empirical Study of Multimodal Model Merging

1 code implementation • 28 Apr 2023 • Yi-Lin Sung, Linjie Li, Kevin Lin, Zhe Gan, Mohit Bansal, Lijuan Wang

In this paper, we expand on this concept to a multimodal setup by merging transformers trained on different modalities.

Segment Everything Everywhere All at Once

2 code implementations • NeurIPS 2023 • Xueyan Zou, Jianwei Yang, Hao Zhang, Feng Li, Linjie Li, JianFeng Wang, Lijuan Wang, Jianfeng Gao, Yong Jae Lee

In SEEM, we propose a novel decoding mechanism that enables diverse prompting for all types of segmentation tasks, aiming at a universal segmentation interface that behaves like large language models (LLMs).

Diagnostic Benchmark and Iterative Inpainting for Layout-Guided Image Generation

1 code implementation • 13 Apr 2023 • Jaemin Cho, Linjie Li, Zhengyuan Yang, Zhe Gan, Lijuan Wang, Mohit Bansal

In this paper, we propose LayoutBench, a diagnostic benchmark for layout-guided image generation that examines four categories of spatial control skills: number, position, size, and shape.

Ranked #1 on

Layout-to-Image Generation

on LayoutBench

Ranked #1 on

Layout-to-Image Generation

on LayoutBench

Adaptive Human Matting for Dynamic Videos

1 code implementation • CVPR 2023 • Chung-Ching Lin, Jiang Wang, Kun Luo, Kevin Lin, Linjie Li, Lijuan Wang, Zicheng Liu

The most recent efforts in video matting have focused on eliminating trimap dependency since trimap annotations are expensive and trimap-based methods are less adaptable for real-time applications.

Equivariant Similarity for Vision-Language Foundation Models

1 code implementation • ICCV 2023 • Tan Wang, Kevin Lin, Linjie Li, Chung-Ching Lin, Zhengyuan Yang, Hanwang Zhang, Zicheng Liu, Lijuan Wang

Unlike the existing image-text similarity objective which only categorizes matched pairs as similar and unmatched pairs as dissimilar, equivariance also requires similarity to vary faithfully according to the semantic changes.

Ranked #7 on

Visual Reasoning

on Winoground

Ranked #7 on

Visual Reasoning

on Winoground

NUWA-XL: Diffusion over Diffusion for eXtremely Long Video Generation

no code implementations • 22 Mar 2023 • Shengming Yin, Chenfei Wu, Huan Yang, JianFeng Wang, Xiaodong Wang, Minheng Ni, Zhengyuan Yang, Linjie Li, Shuguang Liu, Fan Yang, Jianlong Fu, Gong Ming, Lijuan Wang, Zicheng Liu, Houqiang Li, Nan Duan

In this paper, we propose NUWA-XL, a novel Diffusion over Diffusion architecture for eXtremely Long video generation.

MM-REACT: Prompting ChatGPT for Multimodal Reasoning and Action

1 code implementation • 20 Mar 2023 • Zhengyuan Yang, Linjie Li, JianFeng Wang, Kevin Lin, Ehsan Azarnasab, Faisal Ahmed, Zicheng Liu, Ce Liu, Michael Zeng, Lijuan Wang

We propose MM-REACT, a system paradigm that integrates ChatGPT with a pool of vision experts to achieve multimodal reasoning and action.

Ranked #22 on

Visual Question Answering

on MM-Vet

Ranked #22 on

Visual Question Answering

on MM-Vet

Learning 3D Photography Videos via Self-supervised Diffusion on Single Images

no code implementations • 21 Feb 2023 • Xiaodong Wang, Chenfei Wu, Shengming Yin, Minheng Ni, JianFeng Wang, Linjie Li, Zhengyuan Yang, Fan Yang, Lijuan Wang, Zicheng Liu, Yuejian Fang, Nan Duan

3D photography renders a static image into a video with appealing 3D visual effects.

Ranked #1 on

Image Outpainting

on MSCOCO

Ranked #1 on

Image Outpainting

on MSCOCO

Generalized Decoding for Pixel, Image, and Language

1 code implementation • CVPR 2023 • Xueyan Zou, Zi-Yi Dou, Jianwei Yang, Zhe Gan, Linjie Li, Chunyuan Li, Xiyang Dai, Harkirat Behl, JianFeng Wang, Lu Yuan, Nanyun Peng, Lijuan Wang, Yong Jae Lee, Jianfeng Gao

We present X-Decoder, a generalized decoding model that can predict pixel-level segmentation and language tokens seamlessly.

Ranked #4 on

Instance Segmentation

on ADE20K val

(using extra training data)

Ranked #4 on

Instance Segmentation

on ADE20K val

(using extra training data)

ReCo: Region-Controlled Text-to-Image Generation

no code implementations • CVPR 2023 • Zhengyuan Yang, JianFeng Wang, Zhe Gan, Linjie Li, Kevin Lin, Chenfei Wu, Nan Duan, Zicheng Liu, Ce Liu, Michael Zeng, Lijuan Wang

Human evaluation on PaintSkill shows that ReCo is +19. 28% and +17. 21% more accurate in generating images with correct object count and spatial relationship than the T2I model.

Ranked #2 on

Conditional Text-to-Image Synthesis

on COCO-MIG

Ranked #2 on

Conditional Text-to-Image Synthesis

on COCO-MIG

Vision-Language Pre-training: Basics, Recent Advances, and Future Trends

1 code implementation • 17 Oct 2022 • Zhe Gan, Linjie Li, Chunyuan Li, Lijuan Wang, Zicheng Liu, Jianfeng Gao

This paper surveys vision-language pre-training (VLP) methods for multimodal intelligence that have been developed in the last few years.

An Empirical Study of End-to-End Video-Language Transformers with Masked Visual Modeling

1 code implementation • CVPR 2023 • Tsu-Jui Fu, Linjie Li, Zhe Gan, Kevin Lin, William Yang Wang, Lijuan Wang, Zicheng Liu

Masked visual modeling (MVM) has been recently proven effective for visual pre-training.

Ranked #1 on

Video Question Answering

on LSMDC-MC

Ranked #1 on

Video Question Answering

on LSMDC-MC

Coarse-to-Fine Vision-Language Pre-training with Fusion in the Backbone

1 code implementation • NeurIPS 2022 • Zi-Yi Dou, Aishwarya Kamath, Zhe Gan, Pengchuan Zhang, JianFeng Wang, Linjie Li, Zicheng Liu, Ce Liu, Yann Lecun, Nanyun Peng, Jianfeng Gao, Lijuan Wang

Vision-language (VL) pre-training has recently received considerable attention.

Ranked #1 on

Phrase Grounding

on Flickr30k Entities Dev

Ranked #1 on

Phrase Grounding

on Flickr30k Entities Dev

LAVENDER: Unifying Video-Language Understanding as Masked Language Modeling

1 code implementation • CVPR 2023 • Linjie Li, Zhe Gan, Kevin Lin, Chung-Ching Lin, Zicheng Liu, Ce Liu, Lijuan Wang

In this work, we explore a unified VidL framework LAVENDER, where Masked Language Modeling (MLM) is used as the common interface for all pre-training and downstream tasks.

GIT: A Generative Image-to-text Transformer for Vision and Language

2 code implementations • 27 May 2022 • JianFeng Wang, Zhengyuan Yang, Xiaowei Hu, Linjie Li, Kevin Lin, Zhe Gan, Zicheng Liu, Ce Liu, Lijuan Wang

In this paper, we design and train a Generative Image-to-text Transformer, GIT, to unify vision-language tasks such as image/video captioning and question answering.

Ranked #1 on

Image Captioning

on nocaps-XD near-domain

Ranked #1 on

Image Captioning

on nocaps-XD near-domain

Cross-modal Representation Learning for Zero-shot Action Recognition

no code implementations • CVPR 2022 • Chung-Ching Lin, Kevin Lin, Linjie Li, Lijuan Wang, Zicheng Liu

The model design provides a natural mechanism for visual and semantic representations to be learned in a shared knowledge space, whereby it encourages the learned visual embedding to be discriminative and more semantically consistent.

Ranked #3 on

Zero-Shot Action Recognition

on ActivityNet

Ranked #3 on

Zero-Shot Action Recognition

on ActivityNet

MLP Architectures for Vision-and-Language Modeling: An Empirical Study

1 code implementation • 8 Dec 2021 • Yixin Nie, Linjie Li, Zhe Gan, Shuohang Wang, Chenguang Zhu, Michael Zeng, Zicheng Liu, Mohit Bansal, Lijuan Wang

Based on this, we ask an even bolder question: can we have an all-MLP architecture for VL modeling, where both VL fusion and the vision encoder are replaced with MLPs?

SwinBERT: End-to-End Transformers with Sparse Attention for Video Captioning

1 code implementation • CVPR 2022 • Kevin Lin, Linjie Li, Chung-Ching Lin, Faisal Ahmed, Zhe Gan, Zicheng Liu, Yumao Lu, Lijuan Wang

Based on this model architecture, we show that video captioning can benefit significantly from more densely sampled video frames as opposed to previous successes with sparsely sampled video frames for video-and-language understanding tasks (e. g., video question answering).

VIOLET : End-to-End Video-Language Transformers with Masked Visual-token Modeling

1 code implementation • 24 Nov 2021 • Tsu-Jui Fu, Linjie Li, Zhe Gan, Kevin Lin, William Yang Wang, Lijuan Wang, Zicheng Liu

Further, unlike previous studies that found pre-training tasks on video inputs (e. g., masked frame modeling) not very effective, we design a new pre-training task, Masked Visual-token Modeling (MVM), for better video modeling.

Ranked #20 on

Zero-Shot Video Retrieval

on DiDeMo

Ranked #20 on

Zero-Shot Video Retrieval

on DiDeMo

VALUE: A Multi-Task Benchmark for Video-and-Language Understanding Evaluation

1 code implementation • 8 Jun 2021 • Linjie Li, Jie Lei, Zhe Gan, Licheng Yu, Yen-Chun Chen, Rohit Pillai, Yu Cheng, Luowei Zhou, Xin Eric Wang, William Yang Wang, Tamara Lee Berg, Mohit Bansal, Jingjing Liu, Lijuan Wang, Zicheng Liu

Most existing video-and-language (VidL) research focuses on a single dataset, or multiple datasets of a single task.

Adversarial VQA: A New Benchmark for Evaluating the Robustness of VQA Models

no code implementations • ICCV 2021 • Linjie Li, Jie Lei, Zhe Gan, Jingjing Liu

We hope our Adversarial VQA dataset can shed new light on robustness study in the community and serve as a valuable benchmark for future work.

Playing Lottery Tickets with Vision and Language

no code implementations • 23 Apr 2021 • Zhe Gan, Yen-Chun Chen, Linjie Li, Tianlong Chen, Yu Cheng, Shuohang Wang, Jingjing Liu, Lijuan Wang, Zicheng Liu

However, we can find "relaxed" winning tickets at 50%-70% sparsity that maintain 99% of the full accuracy.

UC2: Universal Cross-lingual Cross-modal Vision-and-Language Pre-training

no code implementations • CVPR 2021 • Mingyang Zhou, Luowei Zhou, Shuohang Wang, Yu Cheng, Linjie Li, Zhou Yu, Jingjing Liu

Vision-and-language pre-training has achieved impressive success in learning multimodal representations between vision and language.

LightningDOT: Pre-training Visual-Semantic Embeddings for Real-Time Image-Text Retrieval

2 code implementations • NAACL 2021 • Siqi Sun, Yen-Chun Chen, Linjie Li, Shuohang Wang, Yuwei Fang, Jingjing Liu

Multimodal pre-training has propelled great advancement in vision-and-language research.

Less is More: ClipBERT for Video-and-Language Learning via Sparse Sampling

1 code implementation • CVPR 2021 • Jie Lei, Linjie Li, Luowei Zhou, Zhe Gan, Tamara L. Berg, Mohit Bansal, Jingjing Liu

Experiments on text-to-video retrieval and video question answering on six datasets demonstrate that ClipBERT outperforms (or is on par with) existing methods that exploit full-length videos, suggesting that end-to-end learning with just a few sparsely sampled clips is often more accurate than using densely extracted offline features from full-length videos, proving the proverbial less-is-more principle.

Ranked #24 on

Visual Question Answering (VQA)

on MSRVTT-QA

(using extra training data)

Ranked #24 on

Visual Question Answering (VQA)

on MSRVTT-QA

(using extra training data)

A Closer Look at the Robustness of Vision-and-Language Pre-trained Models

no code implementations • 15 Dec 2020 • Linjie Li, Zhe Gan, Jingjing Liu

Large-scale pre-trained multimodal transformers, such as ViLBERT and UNITER, have propelled the state of the art in vision-and-language (V+L) research to a new level.

Graph Optimal Transport for Cross-Domain Alignment

1 code implementation • ICML 2020 • Liqun Chen, Zhe Gan, Yu Cheng, Linjie Li, Lawrence Carin, Jingjing Liu

In GOT, cross-domain alignment is formulated as a graph matching problem, by representing entities into a dynamically-constructed graph.

Large-Scale Adversarial Training for Vision-and-Language Representation Learning

2 code implementations • NeurIPS 2020 • Zhe Gan, Yen-Chun Chen, Linjie Li, Chen Zhu, Yu Cheng, Jingjing Liu

We present VILLA, the first known effort on large-scale adversarial training for vision-and-language (V+L) representation learning.

Ranked #7 on

Visual Entailment

on SNLI-VE val

(using extra training data)

Ranked #7 on

Visual Entailment

on SNLI-VE val

(using extra training data)

HERO: Hierarchical Encoder for Video+Language Omni-representation Pre-training

3 code implementations • EMNLP 2020 • Linjie Li, Yen-Chun Chen, Yu Cheng, Zhe Gan, Licheng Yu, Jingjing Liu

We present HERO, a novel framework for large-scale video+language omni-representation learning.

Ranked #1 on

Video Retrieval

on TVR

Ranked #1 on

Video Retrieval

on TVR

Meta Module Network for Compositional Visual Reasoning

1 code implementation • 8 Oct 2019 • Wenhu Chen, Zhe Gan, Linjie Li, Yu Cheng, William Wang, Jingjing Liu

To design a more powerful NMN architecture for practical use, we propose Meta Module Network (MMN) centered on a novel meta module, which can take in function recipes and morph into diverse instance modules dynamically.

UNITER: Learning UNiversal Image-TExt Representations

no code implementations • 25 Sep 2019 • Yen-Chun Chen, Linjie Li, Licheng Yu, Ahmed El Kholy, Faisal Ahmed, Zhe Gan, Yu Cheng, Jingjing Liu

Joint image-text embedding is the bedrock for most Vision-and-Language (V+L) tasks, where multimodality inputs are jointly processed for visual and textual understanding.

UNITER: UNiversal Image-TExt Representation Learning

7 code implementations • ECCV 2020 • Yen-Chun Chen, Linjie Li, Licheng Yu, Ahmed El Kholy, Faisal Ahmed, Zhe Gan, Yu Cheng, Jingjing Liu

Different from previous work that applies joint random masking to both modalities, we use conditional masking on pre-training tasks (i. e., masked language/region modeling is conditioned on full observation of image/text).

Ranked #3 on

Visual Question Answering (VQA)

on VCR (Q-A) test

Ranked #3 on

Visual Question Answering (VQA)

on VCR (Q-A) test

Relation-Aware Graph Attention Network for Visual Question Answering

1 code implementation • ICCV 2019 • Linjie Li, Zhe Gan, Yu Cheng, Jingjing Liu

In order to answer semantically-complicated questions about an image, a Visual Question Answering (VQA) model needs to fully understand the visual scene in the image, especially the interactive dynamics between different objects.

Multi-step Reasoning via Recurrent Dual Attention for Visual Dialog

no code implementations • ACL 2019 • Zhe Gan, Yu Cheng, Ahmed El Kholy, Linjie Li, Jingjing Liu, Jianfeng Gao

This paper presents a new model for visual dialog, Recurrent Dual Attention Network (ReDAN), using multi-step reasoning to answer a series of questions about an image.

Learning to see people like people

no code implementations • 5 May 2017 • Amanda Song, Linjie Li, Chad Atalla, Garrison Cottrell

Humans make complex inferences on faces, ranging from objective properties (gender, ethnicity, expression, age, identity, etc) to subjective judgments (facial attractiveness, trustworthiness, sociability, friendliness, etc).