Search Results for author: Lu Yu

Found 47 papers, 13 papers with code

NeRFCodec: Neural Feature Compression Meets Neural Radiance Fields for Memory-Efficient Scene Representation

no code implementations • 2 Apr 2024 • Sicheng Li, Hao Li, Yiyi Liao, Lu Yu

The emergence of Neural Radiance Fields (NeRF) has greatly impacted 3D scene modeling and novel-view synthesis.

PROMPT-SAW: Leveraging Relation-Aware Graphs for Textual Prompt Compression

no code implementations • 30 Mar 2024 • Muhammad Asif Ali, ZhengPing Li, Shu Yang, Keyuan Cheng, Yang Cao, Tianhao Huang, Lijie Hu, Lu Yu, Di Wang

Large language models (LLMs) have shown exceptional abilities for multiple different natural language processing tasks.

Multi-hop Question Answering under Temporal Knowledge Editing

no code implementations • 30 Mar 2024 • Keyuan Cheng, Gang Lin, Haoyang Fei, Yuxuan zhai, Lu Yu, Muhammad Asif Ali, Lijie Hu, Di Wang

Multi-hop question answering (MQA) under knowledge editing (KE) has garnered significant attention in the era of large language models.

Parallelized Midpoint Randomization for Langevin Monte Carlo

no code implementations • 22 Feb 2024 • Lu Yu, Arnak Dalalyan

We explore the sampling problem within the framework where parallel evaluations of the gradient of the log-density are feasible.

MONAL: Model Autophagy Analysis for Modeling Human-AI Interactions

no code implementations • 17 Feb 2024 • Shu Yang, Muhammad Asif Ali, Lu Yu, Lijie Hu, Di Wang

The increasing significance of large models and their multi-modal variants in societal information processing has ignited debates on social safety and ethics.

Professional Agents -- Evolving Large Language Models into Autonomous Experts with Human-Level Competencies

no code implementations • 6 Feb 2024 • Zhixuan Chu, Yan Wang, Feng Zhu, Lu Yu, Longfei Li, Jinjie Gu

The advent of large language models (LLMs) such as ChatGPT, PaLM, and GPT-4 has catalyzed remarkable advances in natural language processing, demonstrating human-like language fluency and reasoning capacities.

Hierarchical Prompts for Rehearsal-free Continual Learning

no code implementations • 21 Jan 2024 • Yukun Zuo, Hantao Yao, Lu Yu, Liansheng Zhuang, Changsheng Xu

Nonetheless, these learnable prompts tend to concentrate on the discriminatory knowledge of the current task while ignoring past task knowledge, leading to that learnable prompts still suffering from catastrophic forgetting.

Fine-Grained Knowledge Selection and Restoration for Non-Exemplar Class Incremental Learning

1 code implementation • 20 Dec 2023 • Jiang-Tian Zhai, Xialei Liu, Lu Yu, Ming-Ming Cheng

Considering this challenge, we propose a novel framework of fine-grained knowledge selection and restoration.

CACFNet: Cross-Modal Attention Cascaded Fusion Network for RGB-T Urban Scene Parsing

no code implementations • journal 2023 • WuJie Zhou, Shaohua Dong, Meixin Fang, Lu Yu

Color–thermal (RGB-T) urban scene parsing has recently attracted widespread interest.

Ranked #5 on

Thermal Image Segmentation

on PST900

Ranked #5 on

Thermal Image Segmentation

on PST900

VeRi3D: Generative Vertex-based Radiance Fields for 3D Controllable Human Image Synthesis

no code implementations • ICCV 2023 • Xinya Chen, Jiaxin Huang, Yanrui Bin, Lu Yu, Yiyi Liao

Unsupervised learning of 3D-aware generative adversarial networks has lately made much progress.

Edit-DiffNeRF: Editing 3D Neural Radiance Fields using 2D Diffusion Model

no code implementations • 15 Jun 2023 • Lu Yu, Wei Xiang, Kang Han

To address this challenge, we propose the Edit-DiffNeRF framework, which is composed of a frozen diffusion model, a proposed delta module to edit the latent semantic space of the diffusion model, and a NeRF.

Langevin Monte Carlo for strongly log-concave distributions: Randomized midpoint revisited

no code implementations • 14 Jun 2023 • Lu Yu, Avetik Karagulyan, Arnak Dalalyan

To provide a more thorough explanation of our method for establishing the computable upper bound, we conduct an analysis of the midpoint discretization for the vanilla Langevin process.

Camera-Incremental Object Re-Identification with Identity Knowledge Evolution

1 code implementation • 25 May 2023 • Hantao Yao, Lu Yu, Jifei Luo, Changsheng Xu

In this paper, we propose a novel Identity Knowledge Evolution (IKE) framework for CIOR, consisting of the Identity Knowledge Association (IKA), Identity Knowledge Distillation (IKD), and Identity Knowledge Update (IKU).

Quality-agnostic Image Captioning to Safely Assist People with Vision Impairment

no code implementations • 28 Apr 2023 • Lu Yu, Malvina Nikandrou, Jiali Jin, Verena Rieser

In this paper, we propose a quality-agnostic framework to improve the performance and robustness of image captioning models for visually impaired people.

Knowledge Distillation for Efficient Sequences of Training Runs

no code implementations • 11 Mar 2023 • Xingyu Liu, Alex Leonardi, Lu Yu, Chris Gilmer-Hill, Matthew Leavitt, Jonathan Frankle

We find that augmenting future runs with KD from previous runs dramatically reduces the time necessary to train these models, even taking into account the overhead of KD.

X-Pruner: eXplainable Pruning for Vision Transformers

1 code implementation • CVPR 2023 • Lu Yu, Wei Xiang

Recent studies have proposed to prune transformers in an unexplainable manner, which overlook the relationship between internal units of the model and the target class, thereby leading to inferior performance.

DCMT: A Direct Entire-Space Causal Multi-Task Framework for Post-Click Conversion Estimation

no code implementations • 13 Feb 2023 • Feng Zhu, Mingjie Zhong, Xinxing Yang, Longfei Li, Lu Yu, Tiehua Zhang, Jun Zhou, Chaochao Chen, Fei Wu, Guanfeng Liu, Yan Wang

In recommendation scenarios, there are two long-standing challenges, i. e., selection bias and data sparsity, which lead to a significant drop in prediction accuracy for both Click-Through Rate (CTR) and post-click Conversion Rate (CVR) tasks.

SteerNeRF: Accelerating NeRF Rendering via Smooth Viewpoint Trajectory

no code implementations • CVPR 2023 • Sicheng Li, Hao Li, Yue Wang, Yiyi Liao, Lu Yu

Neural Radiance Fields (NeRF) have demonstrated superior novel view synthesis performance but are slow at rendering.

Going for GOAL: A Resource for Grounded Football Commentaries

1 code implementation • 8 Nov 2022 • Alessandro Suglia, José Lopes, Emanuele Bastianelli, Andrea Vanzo, Shubham Agarwal, Malvina Nikandrou, Lu Yu, Ioannis Konstas, Verena Rieser

As the course of a game is unpredictable, so are commentaries, which makes them a unique resource to investigate dynamic language grounding.

GEBNet: Graph-Enhancement Branch Network for RGB-T Scene Parsing

no code implementations • journal 2022 • Shaohua Dong, WuJie Zhou, Xiaohong Qian, Lu Yu

RGB-T (red–green–blue and thermal) scene parsing has recently drawn considerable research attention.

Ranked #9 on

Thermal Image Segmentation

on PST900

Ranked #9 on

Thermal Image Segmentation

on PST900

Task Formulation Matters When Learning Continually: A Case Study in Visual Question Answering

no code implementations • 30 Sep 2022 • Mavina Nikandrou, Lu Yu, Alessandro Suglia, Ioannis Konstas, Verena Rieser

We first propose three plausible task formulations and demonstrate their impact on the performance of continual learning algorithms.

Few-shot News Recommendation via Cross-lingual Transfer

1 code implementation • 28 Jul 2022 • Taicheng Guo, Lu Yu, Basem Shihada, Xiangliang Zhang

Second, the user preference over these topics is transferable across different platforms.

eX-ViT: A Novel eXplainable Vision Transformer for Weakly Supervised Semantic Segmentation

no code implementations • 12 Jul 2022 • Lu Yu, Wei Xiang, Juan Fang, Yi-Ping Phoebe Chen, Lianhua Chi

To close these crucial gaps, we propose a novel vision transformer dubbed the eXplainable Vision Transformer (eX-ViT), an intrinsically interpretable transformer model that is able to jointly discover robust interpretable features and perform the prediction.

Adversarial Robustness of Visual Dialog

no code implementations • 6 Jul 2022 • Lu Yu, Verena Rieser

This study is the first to investigate the robustness of visually grounded dialog models towards textual attacks.

Q-LIC: Quantizing Learned Image Compression with Channel Splitting

no code implementations • 28 May 2022 • Heming Sun, Lu Yu, Jiro Katto

Learned image compression (LIC) has reached a comparable coding gain with traditional hand-crafted methods such as VVC intra.

MTANet: Multitask-Aware Network With Hierarchical Multimodal Fusion for RGB-T Urban Scene Understanding

no code implementations • journal 2022 • WuJie Zhou, Shaohua Dong, Jingsheng Lei, Lu Yu

To improve the fusion of multimodal features and the segmentation accuracy, we propose a multitask-aware network (MTANet) with hierarchical multimodal fusion (multiscale fusion strategy) for RGB-T urban scene understanding.

Ranked #10 on

Thermal Image Segmentation

on PST900

Ranked #10 on

Thermal Image Segmentation

on PST900

Mirror Descent Strikes Again: Optimal Stochastic Convex Optimization under Infinite Noise Variance

no code implementations • 23 Feb 2022 • Nuri Mert Vural, Lu Yu, Krishnakumar Balasubramanian, Stanislav Volgushev, Murat A. Erdogdu

We study stochastic convex optimization under infinite noise variance.

Spectral Clustering with Variance Information for Group Structure Estimation in Panel Data

no code implementations • 5 Jan 2022 • Lu Yu, Jiaying Gu, Stanislav Volgushev

Consider a panel data setting where repeated observations on individuals are available.

Continually Learning Self-Supervised Representations with Projected Functional Regularization

1 code implementation • 30 Dec 2021 • Alex Gomez-Villa, Bartlomiej Twardowski, Lu Yu, Andrew D. Bagdanov, Joost Van de Weijer

Recent self-supervised learning methods are able to learn high-quality image representations and are closing the gap with supervised approaches.

End-to-End Learned Image Compression with Quantized Weights and Activations

no code implementations • 17 Nov 2021 • Heming Sun, Lu Yu, Jiro Katto

To our best knowledge, this is the first work to give a complete analysis on the coding gain and the memory cost for a quantized LIC network, which validates the feasibility of the hardware implementation.

Distilling GANs with Style-Mixed Triplets for X2I Translation with Limited Data

no code implementations • ICLR 2022 • Yaxing Wang, Joost Van de Weijer, Lu Yu, Shangling Jui

Therefore, we investigate knowledge distillation to transfer knowledge from a high-quality unconditioned generative model (e. g., StyleGAN) to a conditioned synthetic image generation modules in a variety of systems.

A Simple and Debiased Sampling Method for Personalized Ranking

no code implementations • 29 Sep 2021 • Lu Yu, Shichao Pei, Chuxu Zhang, Xiangliang Zhang

Pairwise ranking models have been widely used to address various problems, such as recommendation.

Fully Neural Network Mode Based Intra Prediction of Variable Block Size

1 code implementation • 5 Aug 2021 • Heming Sun, Lu Yu, Jiro Katto

As far as we know, this is the first work to explore a fully NM based framework for intra prediction, and we reach a better coding gain with a lower complexity compared with the previous work.

Subjective evaluation of traditional and learning-based image coding methods

no code implementations • 28 Jul 2021 • Zhigao Fang, JiaQi Zhang, Lu Yu, Yin Zhao

Additionally, we utilize some typical and frequently used objective quality metrics to evaluate the coding methods in the experiment as comparison.

Self-Training for Class-Incremental Semantic Segmentation

no code implementations • 6 Dec 2020 • Lu Yu, Xialei Liu, Joost Van de Weijer

In class-incremental semantic segmentation, we have no access to the labeled data of previous tasks.

Class-Incremental Semantic Segmentation

Class-Incremental Semantic Segmentation

Incremental Learning

Incremental Learning

DeepI2I: Enabling Deep Hierarchical Image-to-Image Translation by Transferring from GANs

1 code implementation • NeurIPS 2020 • Yaxing Wang, Lu Yu, Joost Van de Weijer

To enable the training of deep I2I models on small datasets, we propose a novel transfer learning method, that transfers knowledge from pre-trained GANs.

Few-Shot Multi-Hop Relation Reasoning over Knowledge Bases

no code implementations • Findings of the Association for Computational Linguistics 2020 • Chuxu Zhang, Lu Yu, Mandana Saebi, Meng Jiang, Nitesh Chawla

Multi-hop relation reasoning over knowledge base is to generate effective and interpretable relation prediction through reasoning paths.

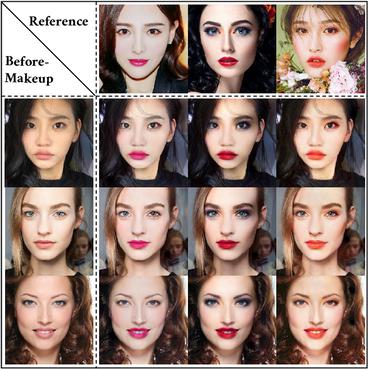

Compressing Facial Makeup Transfer Networks by Collaborative Distillation and Kernel Decomposition

1 code implementation • 16 Sep 2020 • Bianjiang Yang, Zi Hui, Haoji Hu, Xinyi Hu, Lu Yu

Although the facial makeup transfer network has achieved high-quality performance in generating perceptually pleasing makeup images, its capability is still restricted by the massive computation and storage of the network architecture.

SAIL: Self-Augmented Graph Contrastive Learning

no code implementations • 2 Sep 2020 • Lu Yu, Shichao Pei, Lizhong Ding, Jun Zhou, Longfei Li, Chuxu Zhang, Xiangliang Zhang

This paper studies learning node representations with graph neural networks (GNNs) for unsupervised scenario.

An Analysis of Constant Step Size SGD in the Non-convex Regime: Asymptotic Normality and Bias

no code implementations • NeurIPS 2021 • Lu Yu, Krishnakumar Balasubramanian, Stanislav Volgushev, Murat A. Erdogdu

Structured non-convex learning problems, for which critical points have favorable statistical properties, arise frequently in statistical machine learning.

Addressing Class-Imbalance Problem in Personalized Ranking

no code implementations • 19 May 2020 • Lu Yu, Shichao Pei, Chuxu Zhang, Shangsong Liang, Xiao Bai, Nitesh Chawla, Xiangliang Zhang

Pairwise ranking models have been widely used to address recommendation problems.

Semantic Drift Compensation for Class-Incremental Learning

2 code implementations • CVPR 2020 • Lu Yu, Bartłomiej Twardowski, Xialei Liu, Luis Herranz, Kai Wang, Yongmei Cheng, Shangling Jui, Joost Van de Weijer

The vast majority of methods have studied this scenario for classification networks, where for each new task the classification layer of the network must be augmented with additional weights to make room for the newly added classes.

False Discovery Rates in Biological Networks

1 code implementation • 8 Jul 2019 • Lu Yu, Tobias Kaufmann, Johannes Lederer

The increasing availability of data has generated unprecedented prospects for network analyses in many biological fields, such as neuroscience (e. g., brain networks), genomics (e. g., gene-gene interaction networks), and ecology (e. g., species interaction networks).

Methodology Quantitative Methods Applications

Learning Metrics from Teachers: Compact Networks for Image Embedding

1 code implementation • CVPR 2019 • Lu Yu, Vacit Oguz Yazici, Xialei Liu, Joost Van de Weijer, Yongmei Cheng, Arnau Ramisa

In this paper, we propose to use network distillation to efficiently compute image embeddings with small networks.

Three Dimensional Convolutional Neural Network Pruning with Regularization-Based Method

no code implementations • NIPS Workshop CDNNRIA 2018 • Yuxin Zhang, Huan Wang, Yang Luo, Lu Yu, Haoji Hu, Hangguan Shan, Tony Q. S. Quek

Despite enjoying extensive applications in video analysis, three-dimensional convolutional neural networks (3D CNNs)are restricted by their massive computation and storage consumption.

Weakly Supervised Domain-Specific Color Naming Based on Attention

1 code implementation • 11 May 2018 • Lu Yu, Yongmei Cheng, Joost Van de Weijer

The attention branch is used to modulate the pixel-wise color naming predictions of the network.

Oracle Inequalities for High-dimensional Prediction

no code implementations • 1 Aug 2016 • Johannes Lederer, Lu Yu, Irina Gaynanova

The abundance of high-dimensional data in the modern sciences has generated tremendous interest in penalized estimators such as the lasso, scaled lasso, square-root lasso, elastic net, and many others.