Search Results for author: Mikaela Angelina Uy

Found 12 papers, 6 papers with code

ProvNeRF: Modeling per Point Provenance in NeRFs as a Stochastic Process

no code implementations • 16 Jan 2024 • Kiyohiro Nakayama, Mikaela Angelina Uy, Yang You, Ke Li, Leonidas Guibas

We introduce ProvNeRF, a model that enriches a traditional NeRF representation by incorporating per-point provenance, modeling likely source locations for each point.

NeRF Revisited: Fixing Quadrature Instability in Volume Rendering

no code implementations • NeurIPS 2023 • Mikaela Angelina Uy, Kiyohiro Nakayama, Guandao Yang, Rahul Krishna Thomas, Leonidas Guibas, Ke Li

Volume rendering requires evaluating an integral along each ray, which is numerically approximated with a finite sum that corresponds to the exact integral along the ray under piecewise constant volume density.

DiffFacto: Controllable Part-Based 3D Point Cloud Generation with Cross Diffusion

no code implementations • ICCV 2023 • Kiyohiro Nakayama, Mikaela Angelina Uy, Jiahui Huang, Shi-Min Hu, Ke Li, Leonidas J Guibas

We propose a factorization that models independent part style and part configuration distributions and presents a novel cross-diffusion network that enables us to generate coherent and plausible shapes under our proposed factorization.

SCADE: NeRFs from Space Carving with Ambiguity-Aware Depth Estimates

no code implementations • CVPR 2023 • Mikaela Angelina Uy, Ricardo Martin-Brualla, Leonidas Guibas, Ke Li

To address this issue, we introduce SCADE, a novel technique that improves NeRF reconstruction quality on sparse, unconstrained input views for in-the-wild indoor scenes.

PartNeRF: Generating Part-Aware Editable 3D Shapes without 3D Supervision

no code implementations • 16 Mar 2023 • Konstantinos Tertikas, Despoina Paschalidou, Boxiao Pan, Jeong Joon Park, Mikaela Angelina Uy, Ioannis Emiris, Yannis Avrithis, Leonidas Guibas

Evaluations on various ShapeNet categories demonstrate the ability of our model to generate editable 3D objects of improved fidelity, compared to previous part-based generative approaches that require 3D supervision or models relying on NeRFs.

Generating Part-Aware Editable 3D Shapes Without 3D Supervision

1 code implementation • CVPR 2023 • Konstantinos Tertikas, Despoina Paschalidou, Boxiao Pan, Jeong Joon Park, Mikaela Angelina Uy, Ioannis Emiris, Yannis Avrithis, Leonidas Guibas

Evaluations on various ShapeNet categories demonstrate the ability of our model to generate editable 3D objects of improved fidelity, compared to previous part-based generative approaches that require 3D supervision or models relying on NeRFs.

Point2Cyl: Reverse Engineering 3D Objects from Point Clouds to Extrusion Cylinders

no code implementations • CVPR 2022 • Mikaela Angelina Uy, Yen-Yu Chang, Minhyuk Sung, Purvi Goel, Joseph Lambourne, Tolga Birdal, Leonidas Guibas

We propose Point2Cyl, a supervised network transforming a raw 3D point cloud to a set of extrusion cylinders.

Joint Learning of 3D Shape Retrieval and Deformation

1 code implementation • CVPR 2021 • Mikaela Angelina Uy, Vladimir G. Kim, Minhyuk Sung, Noam Aigerman, Siddhartha Chaudhuri, Leonidas Guibas

In fact, we use the embedding space to guide the shape pairs used to train the deformation module, so that it invests its capacity in learning deformations between meaningful shape pairs.

Deformation-Aware 3D Model Embedding and Retrieval

1 code implementation • ECCV 2020 • Mikaela Angelina Uy, Jingwei Huang, Minhyuk Sung, Tolga Birdal, Leonidas Guibas

We introduce a new problem of retrieving 3D models that are deformable to a given query shape and present a novel deep deformation-aware embedding to solve this retrieval task.

LCD: Learned Cross-Domain Descriptors for 2D-3D Matching

1 code implementation • 21 Nov 2019 • Quang-Hieu Pham, Mikaela Angelina Uy, Binh-Son Hua, Duc Thanh Nguyen, Gemma Roig, Sai-Kit Yeung

In this work, we present a novel method to learn a local cross-domain descriptor for 2D image and 3D point cloud matching.

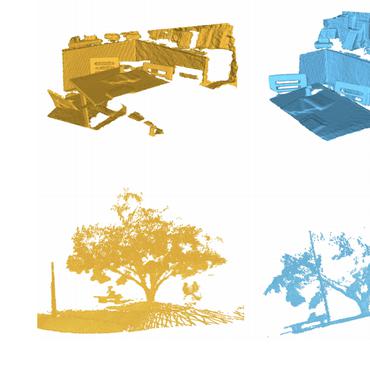

Revisiting Point Cloud Classification: A New Benchmark Dataset and Classification Model on Real-World Data

1 code implementation • ICCV 2019 • Mikaela Angelina Uy, Quang-Hieu Pham, Binh-Son Hua, Duc Thanh Nguyen, Sai-Kit Yeung

From our comprehensive benchmark, we show that our dataset poses great challenges to existing point cloud classification techniques as objects from real-world scans are often cluttered with background and/or are partial due to occlusions.

PointNetVLAD: Deep Point Cloud Based Retrieval for Large-Scale Place Recognition

5 code implementations • CVPR 2018 • Mikaela Angelina Uy, Gim Hee Lee

This is largely due to the difficulty in extracting local feature descriptors from a point cloud that can subsequently be encoded into a global descriptor for the retrieval task.

Ranked #7 on

3D Place Recognition

on CS-Campus3D

Ranked #7 on

3D Place Recognition

on CS-Campus3D