Search Results for author: Na Liu

Found 10 papers, 1 papers with code

Dial-insight: Fine-tuning Large Language Models with High-Quality Domain-Specific Data Preventing Capability Collapse

no code implementations • 14 Mar 2024 • Jianwei Sun, Chaoyang Mei, Linlin Wei, Kaiyu Zheng, Na Liu, Ming Cui, Tianyi Li

The efficacy of large language models (LLMs) is heavily dependent on the quality of the underlying data, particularly within specialized domains.

A Physics-driven GraphSAGE Method for Physical Process Simulations Described by Partial Differential Equations

no code implementations • 13 Mar 2024 • Hang Hu, Sidi Wu, Guoxiong Cai, Na Liu

In this work, a physics-driven GraphSAGE approach (PD-GraphSAGE) based on the Galerkin method and piecewise polynomial nodal basis functions is presented to solve computational problems governed by irregular PDEs and to develop parametric PDE surrogate models.

CasCast: Skillful High-resolution Precipitation Nowcasting via Cascaded Modelling

no code implementations • 6 Feb 2024 • Junchao Gong, Lei Bai, Peng Ye, Wanghan Xu, Na Liu, Jianhua Dai, Xiaokang Yang, Wanli Ouyang

Precipitation nowcasting based on radar data plays a crucial role in extreme weather prediction and has broad implications for disaster management.

From LLM to Conversational Agent: A Memory Enhanced Architecture with Fine-Tuning of Large Language Models

no code implementations • 5 Jan 2024 • Na Liu, Liangyu Chen, Xiaoyu Tian, Wei Zou, Kaijiang Chen, Ming Cui

This paper introduces RAISE (Reasoning and Acting through Scratchpad and Examples), an advanced architecture enhancing the integration of Large Language Models (LLMs) like GPT-4 into conversational agents.

DUMA: a Dual-Mind Conversational Agent with Fast and Slow Thinking

no code implementations • 27 Oct 2023 • Xiaoyu Tian, Liangyu Chen, Na Liu, Yaxuan Liu, Wei Zou, Kaijiang Chen, Ming Cui

The fast thinking model serves as the primary interface for external interactions and initial response generation, evaluating the necessity for engaging the slow thinking model based on the complexity of the complete response.

A Survey on Hyperspectral Image Restoration: From the View of Low-Rank Tensor Approximation

no code implementations • 18 May 2022 • Na Liu, Wei Li, Yinjian Wang, Rao Tao, Qian Du, Jocelyn Chanussot

The ability of capturing fine spectral discriminative information enables hyperspectral images (HSIs) to observe, detect and identify objects with subtle spectral discrepancy.

Detecting Textual Adversarial Examples Based on Distributional Characteristics of Data Representations

1 code implementation • RepL4NLP (ACL) 2022 • Na Liu, Mark Dras, Wei Emma Zhang

Although deep neural networks have achieved state-of-the-art performance in various machine learning tasks, adversarial examples, constructed by adding small non-random perturbations to correctly classified inputs, successfully fool highly expressive deep classifiers into incorrect predictions.

Metasurface-Enabled On-Chip Multiplexed Diffractive Neural Networks in the Visible

no code implementations • 13 Jul 2021 • Xuhao Luo, Yueqiang Hu, Xin Li, Xiangnian Ou, Jiajie Lai, Na Liu, Huigao Duan

Replacing electrons with photons is a compelling route towards light-speed, highly parallel, and low-power artificial intelligence computing.

Incorporating Inner-word and Out-word Features for Mongolian Morphological Segmentation

no code implementations • COLING 2020 • Na Liu, Xiangdong Su, Haoran Zhang, Guanglai Gao, Feilong Bao

The inner-word encoder uses the self-attention mechanisms to capture the inner-word features of the target word.

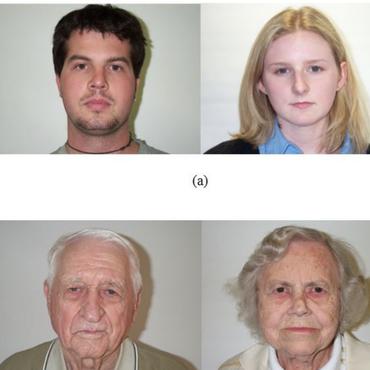

Fine-Grained Age Estimation in the wild with Attention LSTM Networks

no code implementations • 26 May 2018 • Ke Zhang, Na Liu, Xingfang Yuan, Xinyao Guo, Ce Gao, Zhenbing Zhao, Zhanyu Ma

Then, we fine-tune the ResNets or the RoR on the target age datasets to extract the global features of face images.

Ranked #4 on

Age And Gender Classification

on Adience Age

(using extra training data)

Ranked #4 on

Age And Gender Classification

on Adience Age

(using extra training data)