Search Results for author: Ruihao Gong

Found 28 papers, 17 papers with code

2023 Low-Power Computer Vision Challenge (LPCVC) Summary

no code implementations • 11 Mar 2024 • Leo Chen, Benjamin Boardley, Ping Hu, Yiru Wang, Yifan Pu, Xin Jin, Yongqiang Yao, Ruihao Gong, Bo Li, Gao Huang, Xianglong Liu, Zifu Wan, Xinwang Chen, Ning Liu, Ziyi Zhang, Dongping Liu, Ruijie Shan, Zhengping Che, Fachao Zhang, Xiaofeng Mou, Jian Tang, Maxim Chuprov, Ivan Malofeev, Alexander Goncharenko, Andrey Shcherbin, Arseny Yanchenko, Sergey Alyamkin, Xiao Hu, George K. Thiruvathukal, Yung Hsiang Lu

This article describes the 2023 IEEE Low-Power Computer Vision Challenge (LPCVC).

ProPD: Dynamic Token Tree Pruning and Generation for LLM Parallel Decoding

no code implementations • 21 Feb 2024 • Shuzhang Zhong, Zebin Yang, Meng Li, Ruihao Gong, Runsheng Wang, Ru Huang

Additionally, it introduces a dynamic token tree generation algorithm to balance the computation and parallelism of the verification phase in real-time and maximize the overall efficiency across different batch sizes, sequence lengths, and tasks, etc.

TFMQ-DM: Temporal Feature Maintenance Quantization for Diffusion Models

1 code implementation • 27 Nov 2023 • Yushi Huang, Ruihao Gong, Jing Liu, Tianlong Chen, Xianglong Liu

Remarkably, our quantization approach, for the first time, achieves model performance nearly on par with the full-precision model under 4-bit weight quantization.

QLLM: Accurate and Efficient Low-Bitwidth Quantization for Large Language Models

2 code implementations • 12 Oct 2023 • Jing Liu, Ruihao Gong, Xiuying Wei, Zhiwei Dong, Jianfei Cai, Bohan Zhuang

Additionally, an adaptive strategy is designed to autonomously determine the optimal number of sub-channels for channel disassembly.

Lossy and Lossless (L$^2$) Post-training Model Size Compression

1 code implementation • 8 Aug 2023 • Yumeng Shi, Shihao Bai, Xiuying Wei, Ruihao Gong, Jianlei Yang

Then, a dedicated differentiable counter is introduced to guide the optimization of lossy compression to arrive at a more suitable point for later lossless compression.

SysNoise: Exploring and Benchmarking Training-Deployment System Inconsistency

no code implementations • 1 Jul 2023 • Yan Wang, Yuhang Li, Ruihao Gong, Aishan Liu, Yanfei Wang, Jian Hu, Yongqiang Yao, Yunchen Zhang, Tianzi Xiao, Fengwei Yu, Xianglong Liu

Extensive studies have shown that deep learning models are vulnerable to adversarial and natural noises, yet little is known about model robustness on noises caused by different system implementations.

Outlier Suppression+: Accurate quantization of large language models by equivalent and optimal shifting and scaling

1 code implementation • 18 Apr 2023 • Xiuying Wei, Yunchen Zhang, Yuhang Li, Xiangguo Zhang, Ruihao Gong, Jinyang Guo, Xianglong Liu

The channel-wise shifting aligns the center of each channel for removal of outlier asymmetry.

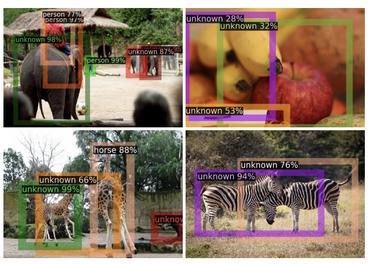

Annealing-Based Label-Transfer Learning for Open World Object Detection

1 code implementation • CVPR 2023 • Yuqing Ma, Hainan Li, Zhange Zhang, Jinyang Guo, Shanghang Zhang, Ruihao Gong, Xianglong Liu

To the best of our knowledge, this is the first OWOD work without manual unknown selection.

Lossy and Lossless (L2) Post-training Model Size Compression

1 code implementation • ICCV 2023 • Yumeng Shi, Shihao Bai, Xiuying Wei, Ruihao Gong, Jianlei Yang

Then, a dedicated differentiable counter is introduced to guide the optimization of lossy compression to arrive at a more suitable point for later lossless compression.

Exploring the Relationship Between Architectural Design and Adversarially Robust Generalization

no code implementations • CVPR 2023 • Aishan Liu, Shiyu Tang, Siyuan Liang, Ruihao Gong, Boxi Wu, Xianglong Liu, DaCheng Tao

In particular, we comprehensively evaluated 20 most representative adversarially trained architectures on ImageNette and CIFAR-10 datasets towards multiple l_p-norm adversarial attacks.

Exploring the Relationship between Architecture and Adversarially Robust Generalization

no code implementations • 28 Sep 2022 • Aishan Liu, Shiyu Tang, Siyuan Liang, Ruihao Gong, Boxi Wu, Xianglong Liu, DaCheng Tao

Inparticular, we comprehensively evaluated 20 most representative adversarially trained architectures on ImageNette and CIFAR-10 datasets towards multiple `p-norm adversarial attacks.

Outlier Suppression: Pushing the Limit of Low-bit Transformer Language Models

1 code implementation • 27 Sep 2022 • Xiuying Wei, Yunchen Zhang, Xiangguo Zhang, Ruihao Gong, Shanghang Zhang, Qi Zhang, Fengwei Yu, Xianglong Liu

With the trends of large NLP models, the increasing memory and computation costs hinder their efficient deployment on resource-limited devices.

QDrop: Randomly Dropping Quantization for Extremely Low-bit Post-Training Quantization

2 code implementations • 11 Mar 2022 • Xiuying Wei, Ruihao Gong, Yuhang Li, Xianglong Liu, Fengwei Yu

With QDROP, the limit of PTQ is pushed to the 2-bit activation for the first time and the accuracy boost can be up to 51. 49%.

MQBench: Towards Reproducible and Deployable Model Quantization Benchmark

1 code implementation • 5 Nov 2021 • Yuhang Li, Mingzhu Shen, Jian Ma, Yan Ren, Mingxin Zhao, Qi Zhang, Ruihao Gong, Fengwei Yu, Junjie Yan

Surprisingly, no existing algorithm wins every challenge in MQBench, and we hope this work could inspire future research directions.

Distribution-sensitive Information Retention for Accurate Binary Neural Network

no code implementations • 25 Sep 2021 • Haotong Qin, Xiangguo Zhang, Ruihao Gong, Yifu Ding, Yi Xu, Xianglong Liu

We present a novel Distribution-sensitive Information Retention Network (DIR-Net) that retains the information in the forward and backward propagation by improving internal propagation and introducing external representations.

RobustART: Benchmarking Robustness on Architecture Design and Training Techniques

1 code implementation • 11 Sep 2021 • Shiyu Tang, Ruihao Gong, Yan Wang, Aishan Liu, Jiakai Wang, Xinyun Chen, Fengwei Yu, Xianglong Liu, Dawn Song, Alan Yuille, Philip H. S. Torr, DaCheng Tao

Thus, we propose RobustART, the first comprehensive Robustness investigation benchmark on ImageNet regarding ARchitecture design (49 human-designed off-the-shelf architectures and 1200+ networks from neural architecture search) and Training techniques (10+ techniques, e. g., data augmentation) towards diverse noises (adversarial, natural, and system noises).

Real World Robustness from Systematic Noise

no code implementations • 2 Sep 2021 • Yan Wang, Yuhang Li, Ruihao Gong

Systematic error, which is not determined by chance, often refers to the inaccuracy (involving either the observation or measurement process) inherent to a system.

A Free Lunch From ANN: Towards Efficient, Accurate Spiking Neural Networks Calibration

1 code implementation • 13 Jun 2021 • Yuhang Li, Shikuang Deng, Xin Dong, Ruihao Gong, Shi Gu

Moreover, our calibration algorithm can produce SNN with state-of-the-art architecture on the large-scale ImageNet dataset, including MobileNet and RegNet.

Diversifying Sample Generation for Accurate Data-Free Quantization

no code implementations • CVPR 2021 • Xiangguo Zhang, Haotong Qin, Yifu Ding, Ruihao Gong, Qinghua Yan, Renshuai Tao, Yuhang Li, Fengwei Yu, Xianglong Liu

Unfortunately, we find that in practice, the synthetic data identically constrained by BN statistics suffers serious homogenization at both distribution level and sample level and further causes a significant performance drop of the quantized model.

BRECQ: Pushing the Limit of Post-Training Quantization by Block Reconstruction

3 code implementations • ICLR 2021 • Yuhang Li, Ruihao Gong, Xu Tan, Yang Yang, Peng Hu, Qi Zhang, Fengwei Yu, Wei Wang, Shi Gu

To further employ the power of quantization, the mixed precision technique is incorporated in our framework by approximating the inter-layer and intra-layer sensitivity.

MixMix: All You Need for Data-Free Compression Are Feature and Data Mixing

no code implementations • ICCV 2021 • Yuhang Li, Feng Zhu, Ruihao Gong, Mingzhu Shen, Xin Dong, Fengwei Yu, Shaoqing Lu, Shi Gu

However, the inversion process only utilizes biased feature statistics stored in one model and is from low-dimension to high-dimension.

Once Quantization-Aware Training: High Performance Extremely Low-bit Architecture Search

1 code implementation • ICCV 2021 • Mingzhu Shen, Feng Liang, Ruihao Gong, Yuhang Li, Chuming Li, Chen Lin, Fengwei Yu, Junjie Yan, Wanli Ouyang

Therefore, we propose to combine Network Architecture Search methods with quantization to enjoy the merits of the two sides.

Binary Neural Networks: A Survey

2 code implementations • 31 Mar 2020 • Haotong Qin, Ruihao Gong, Xianglong Liu, Xiao Bai, Jingkuan Song, Nicu Sebe

The binary neural network, largely saving the storage and computation, serves as a promising technique for deploying deep models on resource-limited devices.

Efficient Bitwidth Search for Practical Mixed Precision Neural Network

no code implementations • 17 Mar 2020 • Yuhang Li, Wei Wang, Haoli Bai, Ruihao Gong, Xin Dong, Fengwei Yu

Network quantization has rapidly become one of the most widely used methods to compress and accelerate deep neural networks.

Towards Unified INT8 Training for Convolutional Neural Network

no code implementations • CVPR 2020 • Feng Zhu, Ruihao Gong, Fengwei Yu, Xianglong Liu, Yanfei Wang, Zhelong Li, Xiuqi Yang, Junjie Yan

In this paper, we give an attempt to build a unified 8-bit (INT8) training framework for common convolutional neural networks from the aspects of both accuracy and speed.

Balanced Binary Neural Networks with Gated Residual

1 code implementation • 26 Sep 2019 • Mingzhu Shen, Xianglong Liu, Ruihao Gong, Kai Han

In this paper, we attempt to maintain the information propagated in the forward process and propose a Balanced Binary Neural Networks with Gated Residual (BBG for short).

Ranked #969 on

Image Classification

on ImageNet

Ranked #969 on

Image Classification

on ImageNet

Forward and Backward Information Retention for Accurate Binary Neural Networks

2 code implementations • CVPR 2020 • Haotong Qin, Ruihao Gong, Xianglong Liu, Mingzhu Shen, Ziran Wei, Fengwei Yu, Jingkuan Song

Our empirical study indicates that the quantization brings information loss in both forward and backward propagation, which is the bottleneck of training accurate binary neural networks.

Differentiable Soft Quantization: Bridging Full-Precision and Low-Bit Neural Networks

2 code implementations • ICCV 2019 • Ruihao Gong, Xianglong Liu, Shenghu Jiang, Tianxiang Li, Peng Hu, Jiazhen Lin, Fengwei Yu, Junjie Yan

Hardware-friendly network quantization (e. g., binary/uniform quantization) can efficiently accelerate the inference and meanwhile reduce memory consumption of the deep neural networks, which is crucial for model deployment on resource-limited devices like mobile phones.