Search Results for author: Taesup Moon

Found 38 papers, 16 papers with code

Reset & Distill: A Recipe for Overcoming Negative Transfer in Continual Reinforcement Learning

no code implementations • 8 Mar 2024 • Hongjoon Ahn, Jinu Hyeon, Youngmin Oh, Bosun Hwang, Taesup Moon

We argue that one of the main obstacles for developing effective Continual Reinforcement Learning (CRL) algorithms is the negative transfer issue occurring when the new task to learn arrives.

Converting and Smoothing False Negatives for Vision-Language Pre-training

no code implementations • 11 Dec 2023 • Jaeseok Byun, Dohoon Kim, Taesup Moon

We consider the critical issue of false negatives in Vision-Language Pre-training (VLP), a challenge that arises from the inherent many-to-many correspondence of image-text pairs in large-scale web-crawled datasets.

TLDR: Text Based Last-layer Retraining for Debiasing Image Classifiers

1 code implementation • 30 Nov 2023 • Juhyeon Park, Seokhyeon Jeong, Taesup Moon

Recently, Last Layer Retraining (LLR) with group-balanced datasets is known to be efficient in mitigating the spurious correlation of classifiers.

SwiFT: Swin 4D fMRI Transformer

1 code implementation • NeurIPS 2023 • Peter Yongho Kim, Junbeom Kwon, Sunghwan Joo, Sangyoon Bae, DongGyu Lee, Yoonho Jung, Shinjae Yoo, Jiook Cha, Taesup Moon

To address this challenge, we present SwiFT (Swin 4D fMRI Transformer), a Swin Transformer architecture that can learn brain dynamics directly from fMRI volumes in a memory and computation-efficient manner.

Sy-CON: Symmetric Contrastive Loss for Continual Self-Supervised Representation Learning

no code implementations • 8 Jun 2023 • Sungmin Cha, Taesup Moon

We first argue that the conventional loss form of continual learning which consists of single task-specific loss (for plasticity) and a regularizer (for stability) may not be ideal for contrastive loss based CSSL that focus on representation learning.

Continual Learning in the Presence of Spurious Correlation

no code implementations • 21 Mar 2023 • DongGyu Lee, Sangwon Jung, Taesup Moon

Specifically, we first show through two-task CL experiments that standard CL methods, which are unaware of dataset bias, can transfer biases from one task to another, both forward and backward, and this transfer is exacerbated depending on whether the CL methods focus on the stability or the plasticity.

Re-weighting Based Group Fairness Regularization via Classwise Robust Optimization

no code implementations • 1 Mar 2023 • Sangwon Jung, TaeEon Park, Sanghyuk Chun, Taesup Moon

Many existing group fairness-aware training methods aim to achieve the group fairness by either re-weighting underrepresented groups based on certain rules or using weakly approximated surrogates for the fairness metrics in the objective as regularization terms.

Learning to Unlearn: Instance-wise Unlearning for Pre-trained Classifiers

no code implementations • 27 Jan 2023 • Sungmin Cha, Sungjun Cho, Dasol Hwang, Honglak Lee, Taesup Moon, Moontae Lee

Since the recent advent of regulations for data protection (e. g., the General Data Protection Regulation), there has been increasing demand in deleting information learned from sensitive data in pre-trained models without retraining from scratch.

Towards More Robust Interpretation via Local Gradient Alignment

1 code implementation • 29 Nov 2022 • Sunghwan Joo, Seokhyeon Jeong, Juyeon Heo, Adrian Weller, Taesup Moon

However, the lack of considering the normalization of the attributions, which is essential in their visualizations, has been an obstacle to understanding and improving the robustness of feature attribution methods.

GRIT-VLP: Grouped Mini-batch Sampling for Efficient Vision and Language Pre-training

1 code implementation • 8 Aug 2022 • Jaeseok Byun, Taebaek Hwang, Jianlong Fu, Taesup Moon

In contrast to the mainstream VLP methods, we highlight that two routinely applied steps during pre-training have crucial impact on the performance of the pre-trained model: in-batch hard negative sampling for image-text matching (ITM) and assigning the large masking probability for the masked language modeling (MLM).

Descent Steps of a Relation-Aware Energy Produce Heterogeneous Graph Neural Networks

1 code implementation • 22 Jun 2022 • Hongjoon Ahn, Yongyi Yang, Quan Gan, Taesup Moon, David Wipf

Moreover, the complexity of this trade-off is compounded in the heterogeneous graph case due to the disparate heterophily relationships between nodes of different types.

Towards More Objective Evaluation of Class Incremental Learning: Representation Learning Perspective

no code implementations • 16 Jun 2022 • Sungmin Cha, Jihwan Kwak, Dongsub Shim, Hyunwoo Kim, Moontae Lee, Honglak Lee, Taesup Moon

While the common method for evaluating CIL algorithms is based on average test accuracy for all learned classes, we argue that maximizing accuracy alone does not necessarily lead to effective CIL algorithms.

Rebalancing Batch Normalization for Exemplar-based Class-Incremental Learning

no code implementations • CVPR 2023 • Sungmin Cha, Sungjun Cho, Dasol Hwang, Sunwon Hong, Moontae Lee, Taesup Moon

The main reason for the ineffectiveness of their method lies in not fully addressing the data imbalance issue, especially in computing the gradients for learning the affine transformation parameters of BN.

Learning Fair Classifiers with Partially Annotated Group Labels

1 code implementation • CVPR 2022 • Sangwon Jung, Sanghyuk Chun, Taesup Moon

To address this problem, we propose a simple Confidence-based Group Label assignment (CGL) strategy that is readily applicable to any fairness-aware learning method.

Supervised Neural Discrete Universal Denoiser for Adaptive Denoising

no code implementations • 24 Nov 2021 • Sungmin Cha, Seonwoo Min, Sungroh Yoon, Taesup Moon

Namely, we make the supervised pre-training of Neural DUDE compatible with the adaptive fine-tuning of the parameters based on the given noisy data subject to denoising.

Observations on K-image Expansion of Image-Mixing Augmentation for Classification

no code implementations • 8 Oct 2021 • JoonHyun Jeong, Sungmin Cha, Youngjoon Yoo, Sangdoo Yun, Taesup Moon, Jongwon Choi

Image-mixing augmentations (e. g., Mixup and CutMix), which typically involve mixing two images, have become the de-facto training techniques for image classification.

NCIS: Neural Contextual Iterative Smoothing for Purifying Adversarial Perturbations

no code implementations • ICML Workshop AML 2021 • Sungmin Cha, Naeun Ko, Youngjoon Yoo, Taesup Moon

We propose a novel and effective purification based adversarial defense method against pre-processor blind white- and black-box attacks.

SSUL: Semantic Segmentation with Unknown Label for Exemplar-based Class-Incremental Learning

1 code implementation • NeurIPS 2021 • Sungmin Cha, Beomyoung Kim, Youngjoon Yoo, Taesup Moon

While the recent CISS algorithms utilize variants of the knowledge distillation (KD) technique to tackle the problem, they failed to fully address the critical challenges in CISS causing the catastrophic forgetting; the semantic drift of the background class and the multi-label prediction issue.

Ranked #1 on

Disjoint 15-5

on PASCAL VOC 2012

Ranked #1 on

Disjoint 15-5

on PASCAL VOC 2012

Fair Feature Distillation for Visual Recognition

no code implementations • CVPR 2021 • Sangwon Jung, DongGyu Lee, TaeEon Park, Taesup Moon

Fairness is becoming an increasingly crucial issue for computer vision, especially in the human-related decision systems.

FBI-Denoiser: Fast Blind Image Denoiser for Poisson-Gaussian Noise

1 code implementation • CVPR 2021 • Jaeseok Byun, Sungmin Cha, Taesup Moon

To that end, we propose Fast Blind Image Denoiser (FBI-Denoiser) for Poisson-Gaussian noise, which consists of two neural network models; 1) PGE-Net that estimates Poisson-Gaussian noise parameters 2000 times faster than the conventional methods and 2) FBI-Net that realizes a much more efficient BSN for pixelwise affine denoiser in terms of the number of parameters and inference speed.

CPR: Classifier-Projection Regularization for Continual Learning

1 code implementation • ICLR 2021 • Sungmin Cha, Hsiang Hsu, Taebaek Hwang, Flavio P. Calmon, Taesup Moon

Inspired by both recent results on neural networks with wide local minima and information theory, CPR adds an additional regularization term that maximizes the entropy of a classifier's output probability.

SS-IL: Separated Softmax for Incremental Learning

no code implementations • ICCV 2021 • Hongjoon Ahn, Jihwan Kwak, Subin Lim, Hyeonsu Bang, Hyojun Kim, Taesup Moon

To that end, we analyze that computing the softmax probabilities by combining the output scores for all old and new classes could be the main cause of the bias.

Continual Learning with Node-Importance based Adaptive Group Sparse Regularization

no code implementations • NeurIPS 2020 • Sangwon Jung, Hongjoon Ahn, Sungmin Cha, Taesup Moon

We propose a novel regularization-based continual learning method, dubbed as Adaptive Group Sparsity based Continual Learning (AGS-CL), using two group sparsity-based penalties.

Unsupervised Neural Universal Denoiser for Finite-Input General-Output Noisy Channel

2 code implementations • 5 Mar 2020 • Tae-Eon Park, Taesup Moon

We devise a novel neural network-based universal denoiser for the finite-input, general-output (FIGO) channel.

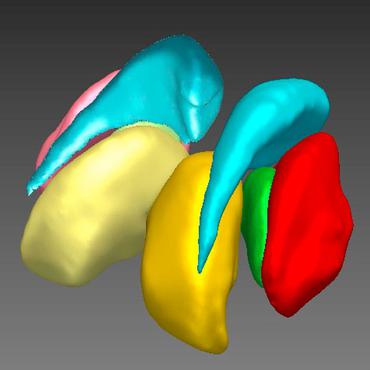

Skip-connected 3D DenseNet for volumetric infant brain MRI segmentation

no code implementations • Biomedical Signal Processing and Control 2019 • Toan Duc Bui, Jitae Shin, Taesup Moon

The proposed network, called 3D-SkipDenseSeg, exploits the advantage of the recently DenseNet for classification task and extends this to segment the 6-month infant brain tissue segmentation of magnetic resonance imaging (MRI).

Uncertainty-based Continual Learning with Adaptive Regularization

2 code implementations • NeurIPS 2019 • Hongjoon Ahn, Sungmin Cha, DongGyu Lee, Taesup Moon

We introduce a new neural network-based continual learning algorithm, dubbed as Uncertainty-regularized Continual Learning (UCL), which builds on traditional Bayesian online learning framework with variational inference.

Ranked #11 on

Continual Learning

on ASC (19 tasks)

Ranked #11 on

Continual Learning

on ASC (19 tasks)

GAN2GAN: Generative Noise Learning for Blind Denoising with Single Noisy Images

1 code implementation • ICLR 2021 • Sungmin Cha, TaeEon Park, Byeongjoon Kim, Jongduk Baek, Taesup Moon

We tackle a challenging blind image denoising problem, in which only single distinct noisy images are available for training a denoiser, and no information about noise is known, except for it being zero-mean, additive, and independent of the clean image.

Iterative Channel Estimation for Discrete Denoising under Channel Uncertainty

no code implementations • 24 Feb 2019 • Hongjoon Ahn, Taesup Moon

We propose a novel iterative channel estimation (ICE) algorithm that essentially removes the critical known noisy channel assumption for universal discrete denoising problem.

DoPAMINE: Double-sided Masked CNN for Pixel Adaptive Multiplicative Noise Despeckling

no code implementations • 7 Feb 2019 • Sunghwan Joo, Sungmin Cha, Taesup Moon

We propose DoPAMINE, a new neural network based multiplicative noise despeckling algorithm.

Fooling Neural Network Interpretations via Adversarial Model Manipulation

3 code implementations • NeurIPS 2019 • Juyeon Heo, Sunghwan Joo, Taesup Moon

We ask whether the neural network interpretation methods can be fooled via adversarial model manipulation, which is defined as a model fine-tuning step that aims to radically alter the explanations without hurting the accuracy of the original models, e. g., VGG19, ResNet50, and DenseNet121.

Subtask Gated Networks for Non-Intrusive Load Monitoring

no code implementations • 16 Nov 2018 • Changho Shin, Sunghwan Joo, Jaeryun Yim, Hyoseop Lee, Taesup Moon, Wonjong Rhee

In this work, we focus on the idea that appliances have on/off states, and develop a deep network for further performance improvements.

Fully Convolutional Pixel Adaptive Image Denoiser

2 code implementations • ICCV 2019 • Sungmin Cha, Taesup Moon

We propose a new image denoising algorithm, dubbed as Fully Convolutional Adaptive Image DEnoiser (FC-AIDE), that can learn from an offline supervised training set with a fully convolutional neural network as well as adaptively fine-tune the supervised model for each given noisy image.

Neural Affine Grayscale Image Denoising

no code implementations • 17 Sep 2017 • Sungmin Cha, Taesup Moon

We propose a new grayscale image denoiser, dubbed as Neural Affine Image Denoiser (Neural AIDE), which utilizes neural network in a novel way.

3D Densely Convolutional Networks for VolumetricSegmentation

1 code implementation • arXiv preprint 2017 • Toan Duc Bui, Jitae Shin, Taesup Moon

The proposed network architecture provides a dense connection between layers that aims to improve the information flow in the network.

A Denoising Loss Bound for Neural Network based Universal Discrete Denoisers

no code implementations • 12 Sep 2017 • Taesup Moon

We obtain a denoising loss bound of the recently proposed neural network based universal discrete denoiser, Neural DUDE, which can adaptively learn its parameters solely from the noise-corrupted data, by minimizing the \emph{empirical estimated loss}.

3D Densely Convolutional Networks for Volumetric Segmentation

1 code implementation • 11 Sep 2017 • Toan Duc Bui, Jitae Shin, Taesup Moon

The proposed network architecture provides a dense connection between layers that aims to improve the information flow in the network.

Neural Universal Discrete Denoiser

no code implementations • NeurIPS 2016 • Taesup Moon, Seonwoo Min, Byunghan Lee, Sungroh Yoon

We present a new framework of applying deep neural networks (DNN) to devise a universal discrete denoiser.

Regularization and Kernelization of the Maximin Correlation Approach

no code implementations • 21 Feb 2015 • Taehoon Lee, Taesup Moon, Seung Jean Kim, Sungroh Yoon

Robust classification becomes challenging when each class consists of multiple subclasses.