Search Results for author: Thang Doan

Found 19 papers, 11 papers with code

A streamlined Approach to Multimodal Few-Shot Class Incremental Learning for Fine-Grained Datasets

2 code implementations • 10 Mar 2024 • Thang Doan, Sima Behpour, Xin Li, Wenbin He, Liang Gou, Liu Ren

Few-shot Class-Incremental Learning (FSCIL) poses the challenge of retaining prior knowledge while learning from limited new data streams, all without overfitting.

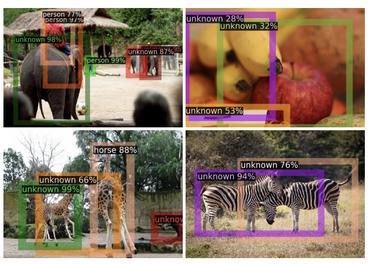

Hyp-OW: Exploiting Hierarchical Structure Learning with Hyperbolic Distance Enhances Open World Object Detection

2 code implementations • 25 Jun 2023 • Thang Doan, Xin Li, Sima Behpour, Wenbin He, Liang Gou, Liu Ren

We argue that this contextual information should already be embedded within the known classes.

Building a Subspace of Policies for Scalable Continual Learning

1 code implementation • 18 Nov 2022 • Jean-Baptiste Gaya, Thang Doan, Lucas Caccia, Laure Soulier, Ludovic Denoyer, Roberta Raileanu

We introduce Continual Subspace of Policies (CSP), a new approach that incrementally builds a subspace of policies for training a reinforcement learning agent on a sequence of tasks.

Continual Learning Beyond a Single Model

no code implementations • 20 Feb 2022 • Thang Doan, Seyed Iman Mirzadeh, Mehrdad Farajtabar

A growing body of research in continual learning focuses on the catastrophic forgetting problem.

Domain Adversarial Reinforcement Learning

no code implementations • 14 Feb 2021 • Bonnie Li, Vincent François-Lavet, Thang Doan, Joelle Pineau

We consider the problem of generalization in reinforcement learning where visual aspects of the observations might differ, e. g. when there are different backgrounds or change in contrast, brightness, etc.

Regularized Inverse Reinforcement Learning

no code implementations • ICLR 2021 • Wonseok Jeon, Chen-Yang Su, Paul Barde, Thang Doan, Derek Nowrouzezahrai, Joelle Pineau

Inverse Reinforcement Learning (IRL) aims to facilitate a learner's ability to imitate expert behavior by acquiring reward functions that explain the expert's decisions.

A Theoretical Analysis of Catastrophic Forgetting through the NTK Overlap Matrix

2 code implementations • 7 Oct 2020 • Thang Doan, Mehdi Bennani, Bogdan Mazoure, Guillaume Rabusseau, Pierre Alquier

Continual learning (CL) is a setting in which an agent has to learn from an incoming stream of data during its entire lifetime.

Generalisation Guarantees for Continual Learning with Orthogonal Gradient Descent

1 code implementation • 21 Jun 2020 • Mehdi Abbana Bennani, Thang Doan, Masashi Sugiyama

In this framework, we prove that OGD is robust to Catastrophic Forgetting then derive the first generalisation bound for SGD and OGD for Continual Learning.

Deep Reinforcement and InfoMax Learning

1 code implementation • NeurIPS 2020 • Bogdan Mazoure, Remi Tachet des Combes, Thang Doan, Philip Bachman, R. Devon Hjelm

We begin with the hypothesis that a model-free agent whose representations are predictive of properties of future states (beyond expected rewards) will be more capable of solving and adapting to new RL problems.

Representation of Reinforcement Learning Policies in Reproducing Kernel Hilbert Spaces

no code implementations • 7 Feb 2020 • Bogdan Mazoure, Thang Doan, Tianyu Li, Vladimir Makarenkov, Joelle Pineau, Doina Precup, Guillaume Rabusseau

We propose a general framework for policy representation for reinforcement learning tasks.

Attraction-Repulsion Actor-Critic for Continuous Control Reinforcement Learning

no code implementations • 17 Sep 2019 • Thang Doan, Bogdan Mazoure, Moloud Abdar, Audrey Durand, Joelle Pineau, R. Devon Hjelm

Continuous control tasks in reinforcement learning are important because they provide an important framework for learning in high-dimensional state spaces with deceptive rewards, where the agent can easily become trapped into suboptimal solutions.

Self-supervised Learning of Distance Functions for Goal-Conditioned Reinforcement Learning

no code implementations • 5 Jul 2019 • Srinivas Venkattaramanujam, Eric Crawford, Thang Doan, Doina Precup

Goal-conditioned policies are used in order to break down complex reinforcement learning (RL) problems by using subgoals, which can be defined either in state space or in a latent feature space.

Leveraging exploration in off-policy algorithms via normalizing flows

1 code implementation • 16 May 2019 • Bogdan Mazoure, Thang Doan, Audrey Durand, R. Devon Hjelm, Joelle Pineau

The ability to discover approximately optimal policies in domains with sparse rewards is crucial to applying reinforcement learning (RL) in many real-world scenarios.

Multi-objective training of Generative Adversarial Networks with multiple discriminators

1 code implementation • ICLR 2019 • Isabela Albuquerque, João Monteiro, Thang Doan, Breandan Considine, Tiago Falk, Ioannis Mitliagkas

Recent literature has demonstrated promising results for training Generative Adversarial Networks by employing a set of discriminators, in contrast to the traditional game involving one generator against a single adversary.

Generating Realistic Sequences of Customer-level Transactions for Retail Datasets

no code implementations • 17 Jan 2019 • Thang Doan, Neil Veira, Saibal Ray, Brian Keng

The GAN is thus used in tandem with the RNN module in a pipeline alternating between basket generation and customer state updating steps.

EmojiGAN: learning emojis distributions with a generative model

no code implementations • WS 2018 • Bogdan Mazoure, Thang Doan, Saibal Ray

Generative models have recently experienced a surge in popularity due to the development of more efficient training algorithms and increasing computational power.

On-line Adaptative Curriculum Learning for GANs

3 code implementations • 31 Jul 2018 • Thang Doan, Joao Monteiro, Isabela Albuquerque, Bogdan Mazoure, Audrey Durand, Joelle Pineau, R. Devon Hjelm

We argue that less expressive discriminators are smoother and have a general coarse grained view of the modes map, which enforces the generator to cover a wide portion of the data distribution support.

GAN Q-learning

1 code implementation • 13 May 2018 • Thang Doan, Bogdan Mazoure, Clare Lyle

Distributional reinforcement learning (distributional RL) has seen empirical success in complex Markov Decision Processes (MDPs) in the setting of nonlinear function approximation.

Bayesian Policy Gradients via Alpha Divergence Dropout Inference

1 code implementation • 6 Dec 2017 • Peter Henderson, Thang Doan, Riashat Islam, David Meger

Policy gradient methods have had great success in solving continuous control tasks, yet the stochastic nature of such problems makes deterministic value estimation difficult.