Search Results for author: Wenbin He

Found 8 papers, 2 papers with code

A streamlined Approach to Multimodal Few-Shot Class Incremental Learning for Fine-Grained Datasets

2 code implementations • 10 Mar 2024 • Thang Doan, Sima Behpour, Xin Li, Wenbin He, Liang Gou, Liu Ren

Few-shot Class-Incremental Learning (FSCIL) poses the challenge of retaining prior knowledge while learning from limited new data streams, all without overfitting.

InterVLS: Interactive Model Understanding and Improvement with Vision-Language Surrogates

no code implementations • 6 Nov 2023 • Jinbin Huang, Wenbin He, Liang Gou, Liu Ren, Chris Bryan

Deep learning models are widely used in critical applications, highlighting the need for pre-deployment model understanding and improvement.

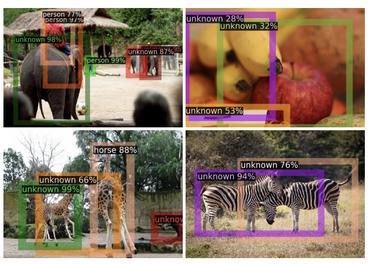

Hyp-OW: Exploiting Hierarchical Structure Learning with Hyperbolic Distance Enhances Open World Object Detection

2 code implementations • 25 Jun 2023 • Thang Doan, Xin Li, Sima Behpour, Wenbin He, Liang Gou, Liu Ren

We argue that this contextual information should already be embedded within the known classes.

A Graph Reconstruction by Dynamic Signal Coefficient for Fault Classification

no code implementations • 30 May 2023 • Wenbin He, Jianxu Mao, Yaonan Wang, Zhe Li, Qiu Fang, Haotian Wu

To improve the performance in identifying the faults under strong noise for rotating machinery, this paper presents a dynamic feature reconstruction signal graph method, which plays the key role of the proposed end-to-end fault diagnosis model.

CLIP-S$^4$: Language-Guided Self-Supervised Semantic Segmentation

no code implementations • 1 May 2023 • Wenbin He, Suphanut Jamonnak, Liang Gou, Liu Ren

To further improve the pixel embeddings and enable language-driven semantic segmentation, we design two types of consistency guided by vision-language models: 1) embedding consistency, aligning our pixel embeddings to the joint feature space of a pre-trained vision-language model, CLIP; and 2) semantic consistency, forcing our model to make the same predictions as CLIP over a set of carefully designed target classes with both known and unknown prototypes.

CLIP-S4: Language-Guided Self-Supervised Semantic Segmentation

no code implementations • CVPR 2023 • Wenbin He, Suphanut Jamonnak, Liang Gou, Liu Ren

To further improve the pixel embeddings and enable language-driven semantic segmentation, we design two types of consistency guided by vision-language models: 1) embedding consistency, aligning our pixel embeddings to the joint feature space of a pre-trained vision-language model, CLIP; and 2) semantic consistency, forcing our model to make the same predictions as CLIP over a set of carefully designed target classes with both known and unknown prototypes.

Self-supervised Semantic Segmentation Grounded in Visual Concepts

no code implementations • 25 Mar 2022 • Wenbin He, William Surmeier, Arvind Kumar Shekar, Liang Gou, Liu Ren

In this work, we propose a self-supervised pixel representation learning method for semantic segmentation by using visual concepts (i. e., groups of pixels with semantic meanings, such as parts, objects, and scenes) extracted from images.

InSituNet: Deep Image Synthesis for Parameter Space Exploration of Ensemble Simulations

no code implementations • 1 Aug 2019 • Wenbin He, Junpeng Wang, Hanqi Guo, Ko-Chih Wang, Han-Wei Shen, Mukund Raj, Youssef S. G. Nashed, Tom Peterka

We propose InSituNet, a deep learning based surrogate model to support parameter space exploration for ensemble simulations that are visualized in situ.