Search Results for author: Wesley De Neve

Found 19 papers, 7 papers with code

Leveraging Human-Machine Interactions for Computer Vision Dataset Quality Enhancement

no code implementations • 31 Jan 2024 • Esla Timothy Anzaku, Hyesoo Hong, Jin-Woo Park, Wonjun Yang, KangMin Kim, JongBum Won, Deshika Vinoshani Kumari Herath, Arnout Van Messem, Wesley De Neve

In this paper, we introduce a lightweight, user-friendly, and scalable framework that synergizes human and machine intelligence for efficient dataset validation and quality enhancement.

Towards Abdominal 3-D Scene Rendering from Laparoscopy Surgical Videos using NeRFs

no code implementations • 18 Oct 2023 • Khoa Tuan Nguyen, Francesca Tozzi, Nikdokht Rashidian, Wouter Willaert, Joris Vankerschaver, Wesley De Neve

Given that a conventional laparoscope only provides a two-dimensional (2-D) view, the detection and diagnosis of medical ailments can be challenging.

Know Your Self-supervised Learning: A Survey on Image-based Generative and Discriminative Training

1 code implementation • 23 May 2023 • Utku Ozbulak, Hyun Jung Lee, Beril Boga, Esla Timothy Anzaku, Homin Park, Arnout Van Messem, Wesley De Neve, Joris Vankerschaver

In this survey, we review a plethora of research efforts conducted on image-oriented SSL, providing a historic view and paying attention to best practices as well as useful software packages.

A Principled Evaluation Protocol for Comparative Investigation of the Effectiveness of DNN Classification Models on Similar-but-non-identical Datasets

no code implementations • 5 Sep 2022 • Esla Timothy Anzaku, Haohan Wang, Arnout Van Messem, Wesley De Neve

Deep Neural Network (DNN) models are increasingly evaluated using new replication test datasets, which have been carefully created to be similar to older and popular benchmark datasets.

Exact Feature Collisions in Neural Networks

no code implementations • 31 May 2022 • Utku Ozbulak, Manvel Gasparyan, Shodhan Rao, Wesley De Neve, Arnout Van Messem

Predictions made by deep neural networks were shown to be highly sensitive to small changes made in the input space where such maliciously crafted data points containing small perturbations are being referred to as adversarial examples.

Evaluating Adversarial Attacks on ImageNet: A Reality Check on Misclassification Classes

1 code implementation • NeurIPS Workshop ImageNet_PPF 2021 • Utku Ozbulak, Maura Pintor, Arnout Van Messem, Wesley De Neve

We find that $71\%$ of the adversarial examples that achieve model-to-model adversarial transferability are misclassified into one of the top-5 classes predicted for the underlying source images.

Selection of Source Images Heavily Influences the Effectiveness of Adversarial Attacks

1 code implementation • 14 Jun 2021 • Utku Ozbulak, Esla Timothy Anzaku, Wesley De Neve, Arnout Van Messem

Although the adoption rate of deep neural networks (DNNs) has tremendously increased in recent years, a solution for their vulnerability against adversarial examples has not yet been found.

Investigating the significance of adversarial attacks and their relation to interpretability for radar-based human activity recognition systems

no code implementations • 26 Jan 2021 • Utku Ozbulak, Baptist Vandersmissen, Azarakhsh Jalalvand, Ivo Couckuyt, Arnout Van Messem, Wesley De Neve

Another concern that is often cited when designing smart home applications is the resilience of these applications against cyberattacks.

Regional Image Perturbation Reduces $L_p$ Norms of Adversarial Examples While Maintaining Model-to-model Transferability

1 code implementation • 7 Jul 2020 • Utku Ozbulak, Jonathan Peck, Wesley De Neve, Bart Goossens, Yvan Saeys, Arnout Van Messem

Regional adversarial attacks often rely on complicated methods for generating adversarial perturbations, making it hard to compare their efficacy against well-known attacks.

Perturbation Analysis of Gradient-based Adversarial Attacks

no code implementations • 2 Jun 2020 • Utku Ozbulak, Manvel Gasparyan, Wesley De Neve, Arnout Van Messem

Our experiments reveal that the Iterative Fast Gradient Sign attack, which is thought to be fast for generating adversarial examples, is the worst attack in terms of the number of iterations required to create adversarial examples in the setting of equal perturbation.

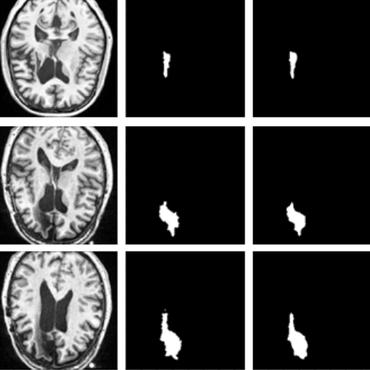

Impact of Adversarial Examples on Deep Learning Models for Biomedical Image Segmentation

1 code implementation • 30 Jul 2019 • Utku Ozbulak, Arnout Van Messem, Wesley De Neve

Given that a large portion of medical imaging problems are effectively segmentation problems, we analyze the impact of adversarial examples on deep learning-based image segmentation models.

Not All Adversarial Examples Require a Complex Defense: Identifying Over-optimized Adversarial Examples with IQR-based Logit Thresholding

no code implementations • 30 Jul 2019 • Utku Ozbulak, Arnout Van Messem, Wesley De Neve

Detecting adversarial examples currently stands as one of the biggest challenges in the field of deep learning.

Web Applicable Computer-aided Diagnosis of Glaucoma Using Deep Learning

no code implementations • 6 Dec 2018 • Mijung Kim, Olivier Janssens, Ho-min Park, Jasper Zuallaert, Sofie Van Hoecke, Wesley De Neve

Glaucoma is a major eye disease, leading to vision loss in the absence of proper medical treatment.

How the Softmax Output is Misleading for Evaluating the Strength of Adversarial Examples

no code implementations • 21 Nov 2018 • Utku Ozbulak, Wesley De Neve, Arnout Van Messem

Nowadays, the output of the softmax function is also commonly used to assess the strength of adversarial examples: malicious data points designed to fail machine learning models during the testing phase.

Explaining Character-Aware Neural Networks for Word-Level Prediction: Do They Discover Linguistic Rules?

1 code implementation • EMNLP 2018 • Fréderic Godin, Kris Demuynck, Joni Dambre, Wesley De Neve, Thomas Demeester

In this paper, we investigate which character-level patterns neural networks learn and if those patterns coincide with manually-defined word segmentations and annotations.

Interpretable Convolutional Neural Networks for Effective Translation Initiation Site Prediction

no code implementations • 27 Nov 2017 • Jasper Zuallaert, Mijung Kim, Yvan Saeys, Wesley De Neve

Thanks to rapidly evolving sequencing techniques, the amount of genomic data at our disposal is growing increasingly large.

Dual Rectified Linear Units (DReLUs): A Replacement for Tanh Activation Functions in Quasi-Recurrent Neural Networks

2 code implementations • 25 Jul 2017 • Fréderic Godin, Jonas Degrave, Joni Dambre, Wesley De Neve

A DReLU, which comes with an unbounded positive and negative image, can be used as a drop-in replacement for a tanh activation function in the recurrent step of Quasi-Recurrent Neural Networks (QRNNs) (Bradbury et al. (2017)).

Improving Language Modeling using Densely Connected Recurrent Neural Networks

no code implementations • WS 2017 • Fréderic Godin, Joni Dambre, Wesley De Neve

In this paper, we introduce the novel concept of densely connected layers into recurrent neural networks.