Search Results for author: Xiaodan Li

Found 18 papers, 6 papers with code

Flatness-aware Adversarial Attack

no code implementations • 10 Nov 2023 • Mingyuan Fan, Xiaodan Li, Cen Chen, Yinggui Wang

We reveal that input regularization based methods make resultant adversarial examples biased towards flat extreme regions.

Model Inversion Attack via Dynamic Memory Learning

no code implementations • 24 Aug 2023 • Gege Qi, Yuefeng Chen, Xiaofeng Mao, Binyuan Hui, Xiaodan Li, Rong Zhang, Hui Xue

Model Inversion (MI) attacks aim to recover the private training data from the target model, which has raised security concerns about the deployment of DNNs in practice.

ImageNet-E: Benchmarking Neural Network Robustness via Attribute Editing

2 code implementations • CVPR 2023 • Xiaodan Li, Yuefeng Chen, Yao Zhu, Shuhui Wang, Rong Zhang, Hui Xue

We also evaluate some robust models including both adversarially trained models and other robust trained models and find that some models show worse robustness against attribute changes than vanilla models.

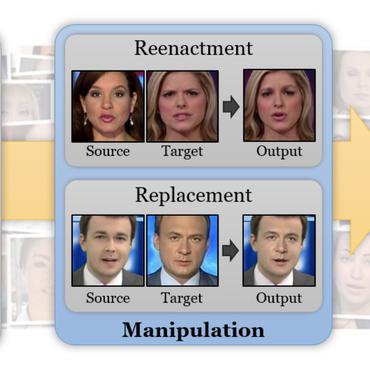

Information-containing Adversarial Perturbation for Combating Facial Manipulation Systems

no code implementations • 21 Mar 2023 • Yao Zhu, Yuefeng Chen, Xiaodan Li, Rong Zhang, Xiang Tian, Bolun Zheng, Yaowu Chen

We use an encoder to map a facial image and its identity message to a cross-model adversarial example which can disrupt multiple facial manipulation systems to achieve initiative protection.

Refiner: Data Refining against Gradient Leakage Attacks in Federated Learning

no code implementations • 5 Dec 2022 • Mingyuan Fan, Cen Chen, Chengyu Wang, Xiaodan Li, Wenmeng Zhou, Jun Huang

Recent works have brought attention to the vulnerability of Federated Learning (FL) systems to gradient leakage attacks.

Rethinking Out-of-Distribution Detection From a Human-Centric Perspective

no code implementations • 30 Nov 2022 • Yao Zhu, Yuefeng Chen, Xiaodan Li, Rong Zhang, Hui Xue, Xiang Tian, Rongxin Jiang, Bolun Zheng, Yaowu Chen

Additionally, our experiments demonstrate that model selection is non-trivial for OOD detection and should be considered as an integral of the proposed method, which differs from the claim in existing works that proposed methods are universal across different models.

Boosting Out-of-distribution Detection with Typical Features

no code implementations • 9 Oct 2022 • Yao Zhu, Yuefeng Chen, Chuanlong Xie, Xiaodan Li, Rong Zhang, Hui Xue, Xiang Tian, Bolun Zheng, Yaowu Chen

Out-of-distribution (OOD) detection is a critical task for ensuring the reliability and safety of deep neural networks in real-world scenarios.

Towards Understanding and Boosting Adversarial Transferability from a Distribution Perspective

2 code implementations • 9 Oct 2022 • Yao Zhu, Yuefeng Chen, Xiaodan Li, Kejiang Chen, Yuan He, Xiang Tian, Bolun Zheng, Yaowu Chen, Qingming Huang

We conduct comprehensive transferable attacks against multiple DNNs to demonstrate the effectiveness of the proposed method.

MaxMatch: Semi-Supervised Learning with Worst-Case Consistency

no code implementations • 26 Sep 2022 • Yangbangyan Jiang, Xiaodan Li, Yuefeng Chen, Yuan He, Qianqian Xu, Zhiyong Yang, Xiaochun Cao, Qingming Huang

In recent years, great progress has been made to incorporate unlabeled data to overcome the inefficiently supervised problem via semi-supervised learning (SSL).

Enhance the Visual Representation via Discrete Adversarial Training

1 code implementation • 16 Sep 2022 • Xiaofeng Mao, Yuefeng Chen, Ranjie Duan, Yao Zhu, Gege Qi, Shaokai Ye, Xiaodan Li, Rong Zhang, Hui Xue

For borrowing the advantage from NLP-style AT, we propose Discrete Adversarial Training (DAT).

Ranked #1 on

Domain Generalization

on Stylized-ImageNet

Ranked #1 on

Domain Generalization

on Stylized-ImageNet

Enhanced compound-protein binding affinity prediction by representing protein multimodal information via a coevolutionary strategy

no code implementations • 30 Mar 2022 • Binjie Guo, Hanyu Zheng, Haohan Jiang, Xiaodan Li, Naiyu Guan, Yanming Zuo, YiCheng Zhang, Hengfu Yang, Xuhua Wang

FeatNN provides an outstanding method for higher CPA prediction accuracy and better generalization ability by efficiently representing multimodal information of proteins via a coevolutionary strategy.

Beyond ImageNet Attack: Towards Crafting Adversarial Examples for Black-box Domains

2 code implementations • ICLR 2022 • Qilong Zhang, Xiaodan Li, Yuefeng Chen, Jingkuan Song, Lianli Gao, Yuan He, Hui Xue

Notably, our methods outperform state-of-the-art approaches by up to 7. 71\% (towards coarse-grained domains) and 25. 91\% (towards fine-grained domains) on average.

Towards Robust Vision Transformer

2 code implementations • CVPR 2022 • Xiaofeng Mao, Gege Qi, Yuefeng Chen, Xiaodan Li, Ranjie Duan, Shaokai Ye, Yuan He, Hui Xue

By using and combining robust components as building blocks of ViTs, we propose Robust Vision Transformer (RVT), which is a new vision transformer and has superior performance with strong robustness.

Ranked #24 on

Domain Generalization

on ImageNet-C

Ranked #24 on

Domain Generalization

on ImageNet-C

QAIR: Practical Query-efficient Black-Box Attacks for Image Retrieval

no code implementations • CVPR 2021 • Xiaodan Li, Jinfeng Li, Yuefeng Chen, Shaokai Ye, Yuan He, Shuhui Wang, Hang Su, Hui Xue

Comprehensive experiments show that the proposed attack achieves a high attack success rate with few queries against the image retrieval systems under the black-box setting.

Spatial-Phase Shallow Learning: Rethinking Face Forgery Detection in Frequency Domain

no code implementations • CVPR 2021 • Honggu Liu, Xiaodan Li, Wenbo Zhou, Yuefeng Chen, Yuan He, Hui Xue, Weiming Zhang, Nenghai Yu

The remarkable success in face forgery techniques has received considerable attention in computer vision due to security concerns.

Sharp Multiple Instance Learning for DeepFake Video Detection

no code implementations • 11 Aug 2020 • Xiaodan Li, Yining Lang, Yuefeng Chen, Xiaofeng Mao, Yuan He, Shuhui Wang, Hui Xue, Quan Lu

A sharp MIL (S-MIL) is proposed which builds direct mapping from instance embeddings to bag prediction, rather than from instance embeddings to instance prediction and then to bag prediction in traditional MIL.

AdvKnn: Adversarial Attacks On K-Nearest Neighbor Classifiers With Approximate Gradients

1 code implementation • 15 Nov 2019 • Xiaodan Li, Yuefeng Chen, Yuan He, Hui Xue

Deep neural networks have been shown to be vulnerable to adversarial examples---maliciously crafted examples that can trigger the target model to misbehave by adding imperceptible perturbations.

A Deep Belief Network Based Machine Learning System for Risky Host Detection

no code implementations • 29 Dec 2017 • Wangyan Feng, Shuning Wu, Xiaodan Li, Kevin Kunkle

The system leverages alert information, various security logs and analysts' investigation results in a real enterprise environment to flag hosts that have high likelihood of being compromised.