Search Results for author: Xingning Dong

Found 6 papers, 5 papers with code

SHE-Net: Syntax-Hierarchy-Enhanced Text-Video Retrieval

no code implementations • 22 Apr 2024 • Xuzheng Yu, Chen Jiang, Xingning Dong, Tian Gan, Ming Yang, Qingpei Guo

In particular, text-video retrieval, which aims to find the top matching videos given text descriptions from a vast video corpus, is an essential function, the primary challenge of which is to bridge the modality gap.

M2-RAAP: A Multi-Modal Recipe for Advancing Adaptation-based Pre-training towards Effective and Efficient Zero-shot Video-text Retrieval

1 code implementation • 31 Jan 2024 • Xingning Dong, Zipeng Feng, Chunluan Zhou, Xuzheng Yu, Ming Yang, Qingpei Guo

We then summarize this empirical study into the M2-RAAP recipe, where our technical contributions lie in 1) the data filtering and text re-writing pipeline resulting in 1M high-quality bilingual video-text pairs, 2) the replacement of video inputs with key-frames to accelerate pre-training, and 3) the Auxiliary-Caption-Guided (ACG) strategy to enhance video features.

SNP-S3: Shared Network Pre-training and Significant Semantic Strengthening for Various Video-Text Tasks

1 code implementation • 31 Jan 2024 • Xingning Dong, Qingpei Guo, Tian Gan, Qing Wang, Jianlong Wu, Xiangyuan Ren, Yuan Cheng, Wei Chu

By employing one shared BERT-type network to refine textual and cross-modal features simultaneously, SNP is lightweight and could support various downstream applications.

EVE: Efficient zero-shot text-based Video Editing with Depth Map Guidance and Temporal Consistency Constraints

1 code implementation • 21 Aug 2023 • Yutao Chen, Xingning Dong, Tian Gan, Chunluan Zhou, Ming Yang, Qingpei Guo

Compared with images, we conjecture that videos necessitate more constraints to preserve the temporal consistency during editing.

CNVid-3.5M: Build, Filter, and Pre-Train the Large-Scale Public Chinese Video-Text Dataset

1 code implementation • CVPR 2023 • Tian Gan, Qing Wang, Xingning Dong, Xiangyuan Ren, Liqiang Nie, Qingpei Guo

Though there are certain methods studying the Chinese video-text pre-training, they pre-train their models on private datasets whose videos and text are unavailable.

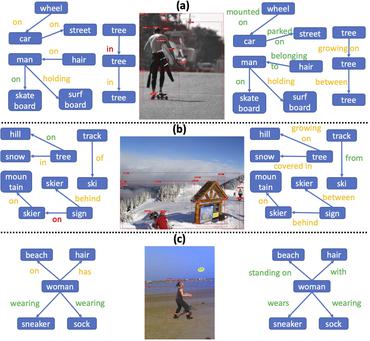

Stacked Hybrid-Attention and Group Collaborative Learning for Unbiased Scene Graph Generation

1 code implementation • CVPR 2022 • Xingning Dong, Tian Gan, Xuemeng Song, Jianlong Wu, Yuan Cheng, Liqiang Nie

Scene Graph Generation, which generally follows a regular encoder-decoder pipeline, aims to first encode the visual contents within the given image and then parse them into a compact summary graph.

Ranked #1 on

Unbiased Scene Graph Generation

on Visual Genome

(mR@20 metric)

Ranked #1 on

Unbiased Scene Graph Generation

on Visual Genome

(mR@20 metric)