Search Results for author: Yang You

Found 111 papers, 72 papers with code

How Does the Textual Information Affect the Retrieval of Multimodal In-Context Learning?

no code implementations • 19 Apr 2024 • Yang Luo, Zangwei Zheng, Zirui Zhu, Yang You

This effectiveness, however, hinges on the appropriate selection of in-context examples, a process that is currently biased towards visual data, overlooking textual information.

RPMArt: Towards Robust Perception and Manipulation for Articulated Objects

no code implementations • 24 Mar 2024 • JunBo Wang, Wenhai Liu, Qiaojun Yu, Yang You, Liu Liu, Weiming Wang, Cewu Lu

Our primary contribution is a Robust Articulation Network (RoArtNet) that is able to predict both joint parameters and affordable points robustly by local feature learning and point tuple voting.

ManiPose: A Comprehensive Benchmark for Pose-aware Object Manipulation in Robotics

no code implementations • 20 Mar 2024 • Qiaojun Yu, Ce Hao, JunBo Wang, Wenhai Liu, Liu Liu, Yao Mu, Yang You, Hengxu Yan, Cewu Lu

Robotic manipulation in everyday scenarios, especially in unstructured environments, requires skills in pose-aware object manipulation (POM), which adapts robots' grasping and handling according to an object's 6D pose.

Dynamic Tuning Towards Parameter and Inference Efficiency for ViT Adaptation

1 code implementation • 18 Mar 2024 • Wangbo Zhao, Jiasheng Tang, Yizeng Han, Yibing Song, Kai Wang, Gao Huang, Fan Wang, Yang You

Existing parameter-efficient fine-tuning (PEFT) methods have achieved significant success on vision transformers (ViTs) adaptation by improving parameter efficiency.

DSP: Dynamic Sequence Parallelism for Multi-Dimensional Transformers

1 code implementation • 15 Mar 2024 • Xuanlei Zhao, Shenggan Cheng, Zangwei Zheng, Zheming Yang, Ziming Liu, Yang You

Scaling large models with long sequences across applications like language generation, video generation and multimodal tasks requires efficient sequence parallelism.

Sparse MeZO: Less Parameters for Better Performance in Zeroth-Order LLM Fine-Tuning

no code implementations • 24 Feb 2024 • Yong liu, Zirui Zhu, Chaoyu Gong, Minhao Cheng, Cho-Jui Hsieh, Yang You

While fine-tuning large language models (LLMs) for specific tasks often yields impressive results, it comes at the cost of memory inefficiency due to back-propagation in gradient-based training.

Helen: Optimizing CTR Prediction Models with Frequency-wise Hessian Eigenvalue Regularization

1 code implementation • 23 Feb 2024 • Zirui Zhu, Yong liu, Zangwei Zheng, Huifeng Guo, Yang You

We explore the typical data characteristics and optimization statistics of CTR prediction, revealing a strong positive correlation between the top hessian eigenvalue and feature frequency.

Neural Network Diffusion

1 code implementation • 20 Feb 2024 • Kai Wang, Zhaopan Xu, Yukun Zhou, Zelin Zang, Trevor Darrell, Zhuang Liu, Yang You

The autoencoder extracts latent representations of a subset of the trained network parameters.

Two Trades is not Baffled: Condensing Graph via Crafting Rational Gradient Matching

1 code implementation • 7 Feb 2024 • Tianle Zhang, Yuchen Zhang, Kun Wang, Kai Wang, Beining Yang, Kaipeng Zhang, Wenqi Shao, Ping Liu, Joey Tianyi Zhou, Yang You

Training on large-scale graphs has achieved remarkable results in graph representation learning, but its cost and storage have raised growing concerns.

Navigating Complexity: Toward Lossless Graph Condensation via Expanding Window Matching

1 code implementation • 7 Feb 2024 • Yuchen Zhang, Tianle Zhang, Kai Wang, Ziyao Guo, Yuxuan Liang, Xavier Bresson, Wei Jin, Yang You

Specifically, we employ a curriculum learning strategy to train expert trajectories with more diverse supervision signals from the original graph, and then effectively transfer the information into the condensed graph with expanding window matching.

RAP: Retrieval-Augmented Planning with Contextual Memory for Multimodal LLM Agents

no code implementations • 6 Feb 2024 • Tomoyuki Kagaya, Thong Jing Yuan, Yuxuan Lou, Jayashree Karlekar, Sugiri Pranata, Akira Kinose, Koki Oguri, Felix Wick, Yang You

Owing to recent advancements, Large Language Models (LLMs) can now be deployed as agents for increasingly complex decision-making applications in areas including robotics, gaming, and API integration.

GliDe with a CaPE: A Low-Hassle Method to Accelerate Speculative Decoding

no code implementations • 3 Feb 2024 • Cunxiao Du, Jing Jiang, Xu Yuanchen, Jiawei Wu, Sicheng Yu, Yongqi Li, Shenggui Li, Kai Xu, Liqiang Nie, Zhaopeng Tu, Yang You

Speculative decoding is a relatively new decoding framework that leverages small and efficient draft models to reduce the latency of LLMs.

OpenMoE: An Early Effort on Open Mixture-of-Experts Language Models

1 code implementation • 29 Jan 2024 • Fuzhao Xue, Zian Zheng, Yao Fu, Jinjie Ni, Zangwei Zheng, Wangchunshu Zhou, Yang You

To help the open-source community have a better understanding of Mixture-of-Experts (MoE) based large language models (LLMs), we train and release OpenMoE, a series of fully open-sourced and reproducible decoder-only MoE LLMs, ranging from 650M to 34B parameters and trained on up to over 1T tokens.

AutoChunk: Automated Activation Chunk for Memory-Efficient Long Sequence Inference

no code implementations • 19 Jan 2024 • Xuanlei Zhao, Shenggan Cheng, Guangyang Lu, Jiarui Fang, Haotian Zhou, Bin Jia, Ziming Liu, Yang You

The experiments demonstrate that AutoChunk can reduce over 80\% of activation memory while maintaining speed loss within 10%, extend max sequence length by 3. 2x to 11. 7x, and outperform state-of-the-art methods by a large margin.

ProvNeRF: Modeling per Point Provenance in NeRFs as a Stochastic Process

no code implementations • 16 Jan 2024 • Kiyohiro Nakayama, Mikaela Angelina Uy, Yang You, Ke Li, Leonidas Guibas

We introduce ProvNeRF, a model that enriches a traditional NeRF representation by incorporating per-point provenance, modeling likely source locations for each point.

Must: Maximizing Latent Capacity of Spatial Transcriptomics Data

1 code implementation • 15 Jan 2024 • Zelin Zang, Liangyu Li, Yongjie Xu, Chenrui Duan, Kai Wang, Yang You, Yi Sun, Stan Z. Li

MuST integrates the multi-modality information contained in the ST data effectively into a uniform latent space to provide a foundation for all the downstream tasks.

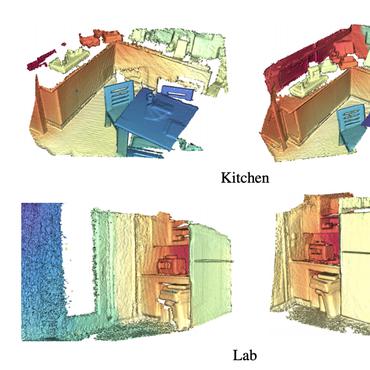

PACE: A Large-Scale Dataset with Pose Annotations in Cluttered Environments

1 code implementation • 23 Dec 2023 • Yang You, Kai Xiong, Zhening Yang, Zhengxiang Huang, Junwei Zhou, Ruoxi Shi, Zhou Fang, Adam W. Harley, Leonidas Guibas, Cewu Lu

We introduce PACE (Pose Annotations in Cluttered Environments), a large-scale benchmark designed to advance the development and evaluation of pose estimation methods in cluttered scenarios.

Primitive-based 3D Human-Object Interaction Modelling and Programming

no code implementations • 17 Dec 2023 • SiQi Liu, Yong-Lu Li, Zhou Fang, Xinpeng Liu, Yang You, Cewu Lu

To explore an effective embedding of HAOI for the machine, we build a new benchmark on 3D HAOI consisting of primitives together with their images and propose a task requiring machines to recover 3D HAOI using primitives from images.

MLLMs-Augmented Visual-Language Representation Learning

1 code implementation • 30 Nov 2023 • Yanqing Liu, Kai Wang, Wenqi Shao, Ping Luo, Yu Qiao, Mike Zheng Shou, Kaipeng Zhang, Yang You

Visual-language pre-training has achieved remarkable success in many multi-modal tasks, largely attributed to the availability of large-scale image-text datasets.

Efficient Dataset Distillation via Minimax Diffusion

1 code implementation • 27 Nov 2023 • Jianyang Gu, Saeed Vahidian, Vyacheslav Kungurtsev, Haonan Wang, Wei Jiang, Yang You, Yiran Chen

Observing that key factors for constructing an effective surrogate dataset are representativeness and diversity, we design additional minimax criteria in the generative training to enhance these facets for the generated images of diffusion models.

Make a Donut: Hierarchical EMD-Space Planning for Zero-Shot Deformable Manipulation with Tools

no code implementations • 5 Nov 2023 • Yang You, Bokui Shen, Congyue Deng, Haoran Geng, Songlin Wei, He Wang, Leonidas Guibas

Remarkably, our model demonstrates robust generalization capabilities to novel and previously unencountered complex tasks without any preliminary demonstrations.

SparseDFF: Sparse-View Feature Distillation for One-Shot Dexterous Manipulation

no code implementations • 25 Oct 2023 • Qianxu Wang, Haotong Zhang, Congyue Deng, Yang You, Hao Dong, Yixin Zhu, Leonidas Guibas

Central to SparseDFF is a feature refinement network, optimized with a contrastive loss between views and a point-pruning mechanism for feature continuity.

DREAM+: Efficient Dataset Distillation by Bidirectional Representative Matching

1 code implementation • 23 Oct 2023 • Yanqing Liu, Jianyang Gu, Kai Wang, Zheng Zhu, Kaipeng Zhang, Wei Jiang, Yang You

Dataset distillation plays a crucial role in creating compact datasets with similar training performance compared with original large-scale ones.

Let's reward step by step: Step-Level reward model as the Navigators for Reasoning

no code implementations • 16 Oct 2023 • Qianli Ma, Haotian Zhou, Tingkai Liu, Jianbo Yuan, PengFei Liu, Yang You, Hongxia Yang

Recent years have seen considerable advancements in multi-step reasoning with Large Language Models (LLMs).

LoBaSS: Gauging Learnability in Supervised Fine-tuning Data

no code implementations • 16 Oct 2023 • Haotian Zhou, Tingkai Liu, Qianli Ma, Jianbo Yuan, PengFei Liu, Yang You, Hongxia Yang

In this paper, we introduce a new dimension in SFT data selection: learnability.

Does Graph Distillation See Like Vision Dataset Counterpart?

2 code implementations • NeurIPS 2023 • Beining Yang, Kai Wang, Qingyun Sun, Cheng Ji, Xingcheng Fu, Hao Tang, Yang You, JianXin Li

We validate the proposed SGDD across 9 datasets and achieve state-of-the-art results on all of them: for example, on the YelpChi dataset, our approach maintains 98. 6% test accuracy of training on the original graph dataset with 1, 000 times saving on the scale of the graph.

Towards Lossless Dataset Distillation via Difficulty-Aligned Trajectory Matching

1 code implementation • 9 Oct 2023 • Ziyao Guo, Kai Wang, George Cazenavette, Hui Li, Kaipeng Zhang, Yang You

The ultimate goal of Dataset Distillation is to synthesize a small synthetic dataset such that a model trained on this synthetic set will perform equally well as a model trained on the full, real dataset.

Bridging the Gap between Human Motion and Action Semantics via Kinematic Phrases

no code implementations • 6 Oct 2023 • Xinpeng Liu, Yong-Lu Li, Ailing Zeng, Zizheng Zhou, Yang You, Cewu Lu

The goal of motion understanding is to establish a reliable mapping between motion and action semantics, while it is a challenging many-to-many problem.

Can pre-trained models assist in dataset distillation?

1 code implementation • 5 Oct 2023 • Yao Lu, Xuguang Chen, Yuchen Zhang, Jianyang Gu, Tianle Zhang, Yifan Zhang, Xiaoniu Yang, Qi Xuan, Kai Wang, Yang You

Dataset Distillation (DD) is a prominent technique that encapsulates knowledge from a large-scale original dataset into a small synthetic dataset for efficient training.

Boosting Unsupervised Contrastive Learning Using Diffusion-Based Data Augmentation From Scratch

no code implementations • 10 Sep 2023 • Zelin Zang, Hao Luo, Kai Wang, Panpan Zhang, Fan Wang, Stan. Z Li, Yang You

When applied to biological data, DiffAug improves performance by up to 10. 1%, with an average improvement of 5. 8%.

Color Prompting for Data-Free Continual Unsupervised Domain Adaptive Person Re-Identification

1 code implementation • 21 Aug 2023 • Jianyang Gu, Hao Luo, Kai Wang, Wei Jiang, Yang You, Jian Zhao

In this work, we propose a Color Prompting (CoP) method for data-free continual unsupervised domain adaptive person Re-ID.

Domain Adaptive Person Re-Identification

Domain Adaptive Person Re-Identification

Person Re-Identification

+1

Person Re-Identification

+1

Dataset Quantization

1 code implementation • ICCV 2023 • Daquan Zhou, Kai Wang, Jianyang Gu, Xiangyu Peng, Dongze Lian, Yifan Zhang, Yang You, Jiashi Feng

Extensive experiments demonstrate that DQ is able to generate condensed small datasets for training unseen network architectures with state-of-the-art compression ratios for lossless model training.

The Snowflake Hypothesis: Training Deep GNN with One Node One Receptive field

no code implementations • 19 Aug 2023 • Kun Wang, Guohao Li, Shilong Wang, Guibin Zhang, Kai Wang, Yang You, Xiaojiang Peng, Yuxuan Liang, Yang Wang

Despite Graph Neural Networks demonstrating considerable promise in graph representation learning tasks, GNNs predominantly face significant issues with over-fitting and over-smoothing as they go deeper as models of computer vision realm.

Learning Referring Video Object Segmentation from Weak Annotation

no code implementations • 4 Aug 2023 • Wangbo Zhao, Kepan Nan, Songyang Zhang, Kai Chen, Dahua Lin, Yang You

Based on this scheme, we develop a novel RVOS method that exploits weak annotations effectively.

CAME: Confidence-guided Adaptive Memory Efficient Optimization

2 code implementations • 5 Jul 2023 • Yang Luo, Xiaozhe Ren, Zangwei Zheng, Zhuo Jiang, Xin Jiang, Yang You

Adaptive gradient methods, such as Adam and LAMB, have demonstrated excellent performance in the training of large language models.

Summarizing Stream Data for Memory-Constrained Online Continual Learning

2 code implementations • 26 May 2023 • Jianyang Gu, Kai Wang, Wei Jiang, Yang You

Through maintaining the consistency of training gradients and relationship to the past tasks, the summarized samples are more representative for the stream data compared to the original images.

Response Length Perception and Sequence Scheduling: An LLM-Empowered LLM Inference Pipeline

1 code implementation • NeurIPS 2023 • Zangwei Zheng, Xiaozhe Ren, Fuzhao Xue, Yang Luo, Xin Jiang, Yang You

By leveraging this information, we introduce an efficient sequence scheduling technique that groups queries with similar response lengths into micro-batches.

Large Language Models are Not Yet Human-Level Evaluators for Abstractive Summarization

1 code implementation • 22 May 2023 • Chenhui Shen, Liying Cheng, Xuan-Phi Nguyen, Yang You, Lidong Bing

With the recent undeniable advancement in reasoning abilities in large language models (LLMs) like ChatGPT and GPT-4, there is a growing trend for using LLMs on various tasks.

Monte-Carlo Search for an Equilibrium in Dec-POMDPs

no code implementations • 19 May 2023 • Yang You, Vincent Thomas, Francis Colas, Olivier Buffet

Decentralized partially observable Markov decision processes (Dec-POMDPs) formalize the problem of designing individual controllers for a group of collaborative agents under stochastic dynamics and partial observability.

A Hierarchical Encoding-Decoding Scheme for Abstractive Multi-document Summarization

1 code implementation • 15 May 2023 • Chenhui Shen, Liying Cheng, Xuan-Phi Nguyen, Yang You, Lidong Bing

Pre-trained language models (PLMs) have achieved outstanding achievements in abstractive single-document summarization (SDS).

Hierarchical Dialogue Understanding with Special Tokens and Turn-level Attention

1 code implementation • Tiny Papers @ ICLR 2023 • Xiao Liu, Jian Zhang, Heng Zhang, Fuzhao Xue, Yang You

We evaluate our model on various dialogue understanding tasks including dialogue relation extraction, dialogue emotion recognition, and dialogue act classification.

Ranked #1 on

Dialog Relation Extraction

on DialogRE

Ranked #1 on

Dialog Relation Extraction

on DialogRE

BiCro: Noisy Correspondence Rectification for Multi-modality Data via Bi-directional Cross-modal Similarity Consistency

1 code implementation • CVPR 2023 • Shuo Yang, Zhaopan Xu, Kai Wang, Yang You, Hongxun Yao, Tongliang Liu, Min Xu

As one of the most fundamental techniques in multimodal learning, cross-modal matching aims to project various sensory modalities into a shared feature space.

MSINet: Twins Contrastive Search of Multi-Scale Interaction for Object ReID

1 code implementation • CVPR 2023 • Jianyang Gu, Kai Wang, Hao Luo, Chen Chen, Wei Jiang, Yuqiang Fang, Shanghang Zhang, Yang You, Jian Zhao

Neural Architecture Search (NAS) has been increasingly appealing to the society of object Re-Identification (ReID), for that task-specific architectures significantly improve the retrieval performance.

Ranked #8 on

Vehicle Re-Identification

on VehicleID Large

Ranked #8 on

Vehicle Re-Identification

on VehicleID Large

Preventing Zero-Shot Transfer Degradation in Continual Learning of Vision-Language Models

1 code implementation • ICCV 2023 • Zangwei Zheng, Mingyuan Ma, Kai Wang, Ziheng Qin, Xiangyu Yue, Yang You

To address this challenge, we propose a novel method ZSCL to prevent zero-shot transfer degradation in the continual learning of vision-language models in both feature and parameter space.

DiM: Distilling Dataset into Generative Model

2 code implementations • 8 Mar 2023 • Kai Wang, Jianyang Gu, Daquan Zhou, Zheng Zhu, Wei Jiang, Yang You

To the best of our knowledge, we are the first to achieve higher accuracy on complex architectures than simple ones, such as 75. 1\% with ResNet-18 and 72. 6\% with ConvNet-3 on ten images per class of CIFAR-10.

InfoBatch: Lossless Training Speed Up by Unbiased Dynamic Data Pruning

1 code implementation • 8 Mar 2023 • Ziheng Qin, Kai Wang, Zangwei Zheng, Jianyang Gu, Xiangyu Peng, Zhaopan Xu, Daquan Zhou, Lei Shang, Baigui Sun, Xuansong Xie, Yang You

To solve this problem, we propose \textbf{InfoBatch}, a novel framework aiming to achieve lossless training acceleration by unbiased dynamic data pruning.

CRIN: Rotation-Invariant Point Cloud Analysis and Rotation Estimation via Centrifugal Reference Frame

1 code implementation • 6 Mar 2023 • Yujing Lou, Zelin Ye, Yang You, Nianjuan Jiang, Jiangbo Lu, Weiming Wang, Lizhuang Ma, Cewu Lu

CRIN directly takes the coordinates of points as input and transforms local points into rotation-invariant representations via centrifugal reference frames.

DREAM: Efficient Dataset Distillation by Representative Matching

2 code implementations • ICCV 2023 • Yanqing Liu, Jianyang Gu, Kai Wang, Zheng Zhu, Wei Jiang, Yang You

Although there are various matching objectives, currently the strategy for selecting original images is limited to naive random sampling.

Robust Robot Planning for Human-Robot Collaboration

no code implementations • 27 Feb 2023 • Yang You, Vincent Thomas, Francis Colas, Rachid Alami, Olivier Buffet

Based on this, we propose two contributions: 1) an approach to automatically generate an uncertain human behavior (a policy) for each given objective function while accounting for possible robot behaviors; and 2) a robot planning algorithm that is robust to the above-mentioned uncertainties and relies on solving a partially observable Markov decision process (POMDP) obtained by reasoning on a distribution over human behaviors.

Colossal-Auto: Unified Automation of Parallelization and Activation Checkpoint for Large-scale Models

1 code implementation • 6 Feb 2023 • Yuliang Liu, Shenggui Li, Jiarui Fang, Yanjun Shao, Boyuan Yao, Yang You

To address these challenges, we introduce a system that can jointly optimize distributed execution and gradient checkpointing plans.

Adaptive Computation with Elastic Input Sequence

1 code implementation • 30 Jan 2023 • Fuzhao Xue, Valerii Likhosherstov, Anurag Arnab, Neil Houlsby, Mostafa Dehghani, Yang You

However, most standard neural networks have a fixed function type and computation budget regardless of the sample's nature or difficulty.

MIGPerf: A Comprehensive Benchmark for Deep Learning Training and Inference Workloads on Multi-Instance GPUs

1 code implementation • 1 Jan 2023 • Huaizheng Zhang, Yuanming Li, Wencong Xiao, Yizheng Huang, Xing Di, Jianxiong Yin, Simon See, Yong Luo, Chiew Tong Lau, Yang You

The vision of this paper is to provide a more comprehensive and practical benchmark study for MIG in order to eliminate the need for tedious manual benchmarking and tuning efforts.

Inconsistencies in Masked Language Models

1 code implementation • 30 Dec 2022 • Tom Young, Yunan Chen, Yang You

Learning to predict masked tokens in a sequence has been shown to be a helpful pretraining objective for powerful language models such as PaLM2.

GPTR: Gestalt-Perception Transformer for Diagram Object Detection

no code implementations • 29 Dec 2022 • Xin Hu, Lingling Zhang, Jun Liu, Jinfu Fan, Yang You, Yaqiang Wu

These lead to the fact that traditional data-driven detection model is not suitable for diagrams.

Elixir: Train a Large Language Model on a Small GPU Cluster

2 code implementations • 10 Dec 2022 • Haichen Huang, Jiarui Fang, Hongxin Liu, Shenggui Li, Yang You

To reduce GPU memory usage, memory partitioning, and memory offloading have been proposed.

One-Shot General Object Localization

1 code implementation • 24 Nov 2022 • Yang You, Zhuochen Miao, Kai Xiong, Weiming Wang, Cewu Lu

In contrast, our proposed OneLoc algorithm efficiently finds the object center and bounding box size by a special voting scheme.

CPPF++: Uncertainty-Aware Sim2Real Object Pose Estimation by Vote Aggregation

2 code implementations • 24 Nov 2022 • Yang You, Wenhao He, Jin Liu, Hongkai Xiong, Weiming Wang, Cewu Lu

We introduce a novel method, CPPF++, designed for sim-to-real pose estimation.

SentBS: Sentence-level Beam Search for Controllable Summarization

1 code implementation • 26 Oct 2022 • Chenhui Shen, Liying Cheng, Lidong Bing, Yang You, Luo Si

A wide range of control perspectives have been explored in controllable text generation.

EnergonAI: An Inference System for 10-100 Billion Parameter Transformer Models

no code implementations • 6 Sep 2022 • Jiangsu Du, Ziming Liu, Jiarui Fang, Shenggui Li, Yongbin Li, Yutong Lu, Yang You

Although the AI community has expanded the model scale to the trillion parameter level, the practical deployment of 10-100 billion parameter models is still uncertain due to the latency, throughput, and memory constraints.

Prompt Vision Transformer for Domain Generalization

1 code implementation • 18 Aug 2022 • Zangwei Zheng, Xiangyu Yue, Kai Wang, Yang You

In this paper, we propose a novel approach DoPrompt based on prompt learning to embed the knowledge of source domains in domain prompts for target domain prediction.

A Frequency-aware Software Cache for Large Recommendation System Embeddings

1 code implementation • 8 Aug 2022 • Jiarui Fang, Geng Zhang, Jiatong Han, Shenggui Li, Zhengda Bian, Yongbin Li, Jin Liu, Yang You

Deep learning recommendation models (DLRMs) have been widely applied in Internet companies.

Active-Learning-as-a-Service: An Automatic and Efficient MLOps System for Data-Centric AI

2 code implementations • 19 Jul 2022 • Yizheng Huang, Huaizheng Zhang, Yuanming Li, Chiew Tong Lau, Yang You

In data-centric AI, active learning (AL) plays a vital role, but current AL tools 1) require users to manually select AL strategies, and 2) can not perform AL tasks efficiently.

Divide to Adapt: Mitigating Confirmation Bias for Domain Adaptation of Black-Box Predictors

1 code implementation • 28 May 2022 • Jianfei Yang, Xiangyu Peng, Kai Wang, Zheng Zhu, Jiashi Feng, Lihua Xie, Yang You

Domain Adaptation of Black-box Predictors (DABP) aims to learn a model on an unlabeled target domain supervised by a black-box predictor trained on a source domain.

FaceMAE: Privacy-Preserving Face Recognition via Masked Autoencoders

1 code implementation • 23 May 2022 • Kai Wang, Bo Zhao, Xiangyu Peng, Zheng Zhu, Jiankang Deng, Xinchao Wang, Hakan Bilen, Yang You

Firstly, randomly masked face images are used to train the reconstruction module in FaceMAE.

A Study on Transformer Configuration and Training Objective

no code implementations • 21 May 2022 • Fuzhao Xue, Jianghai Chen, Aixin Sun, Xiaozhe Ren, Zangwei Zheng, Xiaoxin He, Yongming Chen, Xin Jiang, Yang You

In this paper, we revisit these conventional configurations.

Ranked #101 on

Image Classification

on ImageNet

Ranked #101 on

Image Classification

on ImageNet

Reliable Label Correction is a Good Booster When Learning with Extremely Noisy Labels

1 code implementation • 30 Apr 2022 • Kai Wang, Xiangyu Peng, Shuo Yang, Jianfei Yang, Zheng Zhu, Xinchao Wang, Yang You

This paradigm, however, is prone to significant degeneration under heavy label noise, as the number of clean samples is too small for conventional methods to behave well.

CowClip: Reducing CTR Prediction Model Training Time from 12 hours to 10 minutes on 1 GPU

1 code implementation • 13 Apr 2022 • Zangwei Zheng, Pengtai Xu, Xuan Zou, Da Tang, Zhen Li, Chenguang Xi, Peng Wu, Leqi Zou, Yijie Zhu, Ming Chen, Xiangzhuo Ding, Fuzhao Xue, Ziheng Qin, Youlong Cheng, Yang You

Our experiments show that previous scaling rules fail in the training of CTR prediction neural networks.

Modeling Motion with Multi-Modal Features for Text-Based Video Segmentation

1 code implementation • CVPR 2022 • Wangbo Zhao, Kai Wang, Xiangxiang Chu, Fuzhao Xue, Xinchao Wang, Yang You

Text-based video segmentation aims to segment the target object in a video based on a describing sentence.

Ranked #10 on

Referring Expression Segmentation

on A2D Sentences

Ranked #10 on

Referring Expression Segmentation

on A2D Sentences

Optical Flow Estimation

Optical Flow Estimation

Referring Expression Segmentation

+4

Referring Expression Segmentation

+4

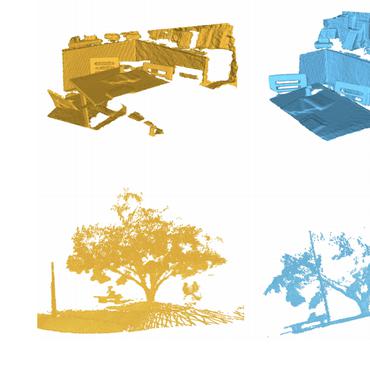

CPPF: Towards Robust Category-Level 9D Pose Estimation in the Wild

1 code implementation • CVPR 2022 • Yang You, Ruoxi Shi, Weiming Wang, Cewu Lu

Drawing inspirations from traditional point pair features (PPFs), in this paper, we design a novel Category-level PPF (CPPF) voting method to achieve accurate, robust and generalizable 9D pose estimation in the wild.

Ranked #8 on

6D Pose Estimation using RGBD

on REAL275

Ranked #8 on

6D Pose Estimation using RGBD

on REAL275

Towards Efficient and Scalable Sharpness-Aware Minimization

2 code implementations • CVPR 2022 • Yong liu, Siqi Mai, Xiangning Chen, Cho-Jui Hsieh, Yang You

Recently, Sharpness-Aware Minimization (SAM), which connects the geometry of the loss landscape and generalization, has demonstrated significant performance boosts on training large-scale models such as vision transformers.

CAFE: Learning to Condense Dataset by Aligning Features

2 code implementations • CVPR 2022 • Kai Wang, Bo Zhao, Xiangyu Peng, Zheng Zhu, Shuo Yang, Shuo Wang, Guan Huang, Hakan Bilen, Xinchao Wang, Yang You

Dataset condensation aims at reducing the network training effort through condensing a cumbersome training set into a compact synthetic one.

FastFold: Reducing AlphaFold Training Time from 11 Days to 67 Hours

1 code implementation • 2 Mar 2022 • Shenggan Cheng, Xuanlei Zhao, Guangyang Lu, Jiarui Fang, Zhongming Yu, Tian Zheng, Ruidong Wu, Xiwen Zhang, Jian Peng, Yang You

In this work, we present FastFold, an efficient implementation of AlphaFold for both training and inference.

Sky Computing: Accelerating Geo-distributed Computing in Federated Learning

1 code implementation • 24 Feb 2022 • Jie Zhu, Shenggui Li, Yang You

In this paper, we proposed Sky Computing, a load-balanced model parallelism framework to adaptively allocate the weights to devices.

Crafting Better Contrastive Views for Siamese Representation Learning

1 code implementation • CVPR 2022 • Xiangyu Peng, Kai Wang, Zheng Zhu, Mang Wang, Yang You

For high performance Siamese representation learning, one of the keys is to design good contrastive pairs.

One Student Knows All Experts Know: From Sparse to Dense

no code implementations • 26 Jan 2022 • Fuzhao Xue, Xiaoxin He, Xiaozhe Ren, Yuxuan Lou, Yang You

Mixture-of-experts (MoE) is a powerful sparse architecture including multiple experts.

Understanding Pixel-level 2D Image Semantics with 3D Keypoint Knowledge Engine

no code implementations • 21 Nov 2021 • Yang You, Chengkun Li, Yujing Lou, Zhoujun Cheng, Liangwei Li, Lizhuang Ma, Weiming Wang, Cewu Lu

Pixel-level 2D object semantic understanding is an important topic in computer vision and could help machine deeply understand objects (e. g. functionality and affordance) in our daily life.

Large-Scale Deep Learning Optimizations: A Comprehensive Survey

no code implementations • 1 Nov 2021 • Xiaoxin He, Fuzhao Xue, Xiaozhe Ren, Yang You

Deep learning have achieved promising results on a wide spectrum of AI applications.

Colossal-AI: A Unified Deep Learning System For Large-Scale Parallel Training

1 code implementation • 28 Oct 2021 • Shenggui Li, Hongxin Liu, Zhengda Bian, Jiarui Fang, Haichen Huang, Yuliang Liu, Boxiang Wang, Yang You

The success of Transformer models has pushed the deep learning model scale to billions of parameters.

MReD: A Meta-Review Dataset for Structure-Controllable Text Generation

1 code implementation • Findings (ACL) 2022 • Chenhui Shen, Liying Cheng, Ran Zhou, Lidong Bing, Yang You, Luo Si

A more useful text generator should leverage both the input text and the control signal to guide the generation, which can only be built with a deep understanding of the domain knowledge.

Sharpness-Aware Minimization in Large-Batch Training: Training Vision Transformer In Minutes

no code implementations • 29 Sep 2021 • Yong liu, Siqi Mai, Xiangning Chen, Cho-Jui Hsieh, Yang You

Large-batch training is an important direction for distributed machine learning, which can improve the utilization of large-scale clusters and therefore accelerate the training process.

Solving infinite-horizon Dec-POMDPs using Finite State Controllers within JESP

no code implementations • 17 Sep 2021 • Yang You, Vincent Thomas, Francis Colas, Olivier Buffet

This paper looks at solving collaborative planning problems formalized as Decentralized POMDPs (Dec-POMDPs) by searching for Nash equilibria, i. e., situations where each agent's policy is a best response to the other agents' (fixed) policies.

Cross-token Modeling with Conditional Computation

no code implementations • 5 Sep 2021 • Yuxuan Lou, Fuzhao Xue, Zangwei Zheng, Yang You

Mixture-of-Experts (MoE), a conditional computation architecture, achieved promising performance by scaling local module (i. e. feed-forward network) of transformer.

PatrickStar: Parallel Training of Pre-trained Models via Chunk-based Memory Management

1 code implementation • 12 Aug 2021 • Jiarui Fang, Zilin Zhu, Shenggui Li, Hui Su, Yang Yu, Jie zhou, Yang You

PatrickStar uses the CPU-GPU heterogeneous memory space to store the model data.

Online Evolutionary Batch Size Orchestration for Scheduling Deep Learning Workloads in GPU Clusters

no code implementations • 8 Aug 2021 • Zhengda Bian, Shenggui Li, Wei Wang, Yang You

ONES automatically manages the elasticity of each job based on the training batch size, so as to maximize GPU utilization and improve scheduling efficiency.

Go Wider Instead of Deeper

1 code implementation • 25 Jul 2021 • Fuzhao Xue, Ziji Shi, Futao Wei, Yuxuan Lou, Yong liu, Yang You

To achieve better performance with fewer trainable parameters, recent methods are proposed to go shallower by parameter sharing or model compressing along with the depth.

Ranked #660 on

Image Classification

on ImageNet

Ranked #660 on

Image Classification

on ImageNet

Concurrent Adversarial Learning for Large-Batch Training

no code implementations • ICLR 2022 • Yong liu, Xiangning Chen, Minhao Cheng, Cho-Jui Hsieh, Yang You

Current methods usually use extensive data augmentation to increase the batch size, but we found the performance gain with data augmentation decreases as batch size increases, and data augmentation will become insufficient after certain point.

Tesseract: Parallelize the Tensor Parallelism Efficiently

no code implementations • 30 May 2021 • Boxiang Wang, Qifan Xu, Zhengda Bian, Yang You

It increases efficiency by reducing communication overhead and lowers the memory required for each GPU.

Maximizing Parallelism in Distributed Training for Huge Neural Networks

no code implementations • 30 May 2021 • Zhengda Bian, Qifan Xu, Boxiang Wang, Yang You

Our work is the first to introduce a 3-dimensional model parallelism for expediting huge language models.

Sequence Parallelism: Long Sequence Training from System Perspective

no code implementations • 26 May 2021 • Shenggui Li, Fuzhao Xue, Chaitanya Baranwal, Yongbin Li, Yang You

That is, with sparse attention, our sequence parallelism enables us to train transformer with infinite long sequence.

An Efficient Training Approach for Very Large Scale Face Recognition

1 code implementation • CVPR 2022 • Kai Wang, Shuo Wang, Panpan Zhang, Zhipeng Zhou, Zheng Zhu, Xiaobo Wang, Xiaojiang Peng, Baigui Sun, Hao Li, Yang You

This method adopts Dynamic Class Pool (DCP) for storing and updating the identities features dynamically, which could be regarded as a substitute for the FC layer.

Ranked #1 on

Face Verification

on IJB-C

(training dataset metric)

Ranked #1 on

Face Verification

on IJB-C

(training dataset metric)

An Efficient 2D Method for Training Super-Large Deep Learning Models

1 code implementation • 12 Apr 2021 • Qifan Xu, Shenggui Li, Chaoyu Gong, Yang You

However, due to memory constraints, model parallelism must be utilized to host large models that would otherwise not fit into the memory of a single device.

Skeleton Merger: an Unsupervised Aligned Keypoint Detector

1 code implementation • CVPR 2021 • Ruoxi Shi, Zhengrong Xue, Yang You, Cewu Lu

In this paper, we propose an unsupervised aligned keypoint detector, Skeleton Merger, which utilizes skeletons to reconstruct objects.

PRIN/SPRIN: On Extracting Point-wise Rotation Invariant Features

2 code implementations • 24 Feb 2021 • Yang You, Yujing Lou, Ruoxi Shi, Qi Liu, Yu-Wing Tai, Lizhuang Ma, Weiming Wang, Cewu Lu

Spherical Voxel Convolution and Point Re-sampling are proposed to extract rotation invariant features for each point.

UKPGAN: A General Self-Supervised Keypoint Detector

1 code implementation • CVPR 2022 • Yang You, Wenhai Liu, Yanjie Ze, Yong-Lu Li, Weiming Wang, Cewu Lu

Keypoint detection is an essential component for the object registration and alignment.

Canonical Voting: Towards Robust Oriented Bounding Box Detection in 3D Scenes

1 code implementation • CVPR 2022 • Yang You, Zelin Ye, Yujing Lou, Chengkun Li, Yong-Lu Li, Lizhuang Ma, Weiming Wang, Cewu Lu

In the work, we disentangle the direct offset into Local Canonical Coordinates (LCC), box scales and box orientations.

Training EfficientNets at Supercomputer Scale: 83% ImageNet Top-1 Accuracy in One Hour

no code implementations • 30 Oct 2020 • Arissa Wongpanich, Hieu Pham, James Demmel, Mingxing Tan, Quoc Le, Yang You, Sameer Kumar

EfficientNets are a family of state-of-the-art image classification models based on efficiently scaled convolutional neural networks.

How much progress have we made in neural network training? A New Evaluation Protocol for Benchmarking Optimizers

no code implementations • 19 Oct 2020 • Yuanhao Xiong, Xuanqing Liu, Li-Cheng Lan, Yang You, Si Si, Cho-Jui Hsieh

For end-to-end efficiency, unlike previous work that assumes random hyperparameter tuning, which over-emphasizes the tuning time, we propose to evaluate with a bandit hyperparameter tuning strategy.

LOCx2, a Low-latency, Low-overhead, 2 x 5.12-Gbps Transmitter ASIC for the ATLAS Liquid Argon Calorimeter Trigger Upgrade

no code implementations • 18 Sep 2020 • Le Xiao, Xiaoting Li, Datao Gong, Jinghong Chen, Di Guo, Huiqin He, Suen Hou, Guangming Huang, Chonghan Liu, Tiankuan Liu, Xiangming Sun, Ping-Kun Teng, Bozorgmehr Vosooghi, Annie C. Xiang, Jingbo Ye, Yang You, Zhiheng Zuo

In this paper, we present the design and test results of LOCx2, a transmitter ASIC for the ATLAS Liquid Argon Calorimeter trigger upgrade.

Instrumentation and Detectors

The Limit of the Batch Size

no code implementations • 15 Jun 2020 • Yang You, Yuhui Wang, huan zhang, Zhao Zhang, James Demmel, Cho-Jui Hsieh

For the first time we scale the batch size on ImageNet to at least a magnitude larger than all previous work, and provide detailed studies on the performance of many state-of-the-art optimization schemes under this setting.

Semantic Correspondence via 2D-3D-2D Cycle

1 code implementation • 20 Apr 2020 • Yang You, Chengkun Li, Yujing Lou, Zhoujun Cheng, Lizhuang Ma, Cewu Lu, Weiming Wang

Visual semantic correspondence is an important topic in computer vision and could help machine understand objects in our daily life.

KeypointNet: A Large-scale 3D Keypoint Dataset Aggregated from Numerous Human Annotations

1 code implementation • CVPR 2020 • Yang You, Yujing Lou, Chengkun Li, Zhoujun Cheng, Liangwei Li, Lizhuang Ma, Weiming Wang, Cewu Lu

Detecting 3D objects keypoints is of great interest to the areas of both graphics and computer vision.

Human Correspondence Consensus for 3D Object Semantic Understanding

1 code implementation • ECCV 2020 • Yujing Lou, Yang You, Chengkun Li, Zhoujun Cheng, Liangwei Li, Lizhuang Ma, Weiming Wang, Cewu Lu

Semantic understanding of 3D objects is crucial in many applications such as object manipulation.

Auto-Precision Scaling for Distributed Deep Learning

1 code implementation • 20 Nov 2019 • Ruobing Han, James Demmel, Yang You

Our experimental results show that for many applications, APS can train state-of-the-art models by 8-bit gradients with no or only a tiny accuracy loss (<0. 05%).

Large Batch Optimization for Deep Learning: Training BERT in 76 minutes

24 code implementations • ICLR 2020 • Yang You, Jing Li, Sashank Reddi, Jonathan Hseu, Sanjiv Kumar, Srinadh Bhojanapalli, Xiaodan Song, James Demmel, Kurt Keutzer, Cho-Jui Hsieh

In this paper, we first study a principled layerwise adaptation strategy to accelerate training of deep neural networks using large mini-batches.

Ranked #11 on

Question Answering

on SQuAD1.1 dev

(F1 metric)

Ranked #11 on

Question Answering

on SQuAD1.1 dev

(F1 metric)

Large-Batch Training for LSTM and Beyond

1 code implementation • 24 Jan 2019 • Yang You, Jonathan Hseu, Chris Ying, James Demmel, Kurt Keutzer, Cho-Jui Hsieh

LEGW enables Sqrt Scaling scheme to be useful in practice and as a result we achieve much better results than the Linear Scaling learning rate scheme.

Combinational Q-Learning for Dou Di Zhu

1 code implementation • 24 Jan 2019 • Yang You, Liangwei Li, Baisong Guo, Weiming Wang, Cewu Lu

Deep reinforcement learning (DRL) has gained a lot of attention in recent years, and has been proven to be able to play Atari games and Go at or above human levels.

Pointwise Rotation-Invariant Network with Adaptive Sampling and 3D Spherical Voxel Convolution

1 code implementation • 23 Nov 2018 • Yang You, Yujing Lou, Qi Liu, Yu-Wing Tai, Lizhuang Ma, Cewu Lu, Weiming Wang

Point cloud analysis without pose priors is very challenging in real applications, as the orientations of point clouds are often unknown.

Large Batch Training of Convolutional Networks with Layer-wise Adaptive Rate Scaling

no code implementations • ICLR 2018 • Boris Ginsburg, Igor Gitman, Yang You

Using LARS, we scaled AlexNet and ResNet-50 to a batch size of 16K.

ImageNet Training in Minutes

1 code implementation • 14 Sep 2017 • Yang You, Zhao Zhang, Cho-Jui Hsieh, James Demmel, Kurt Keutzer

If we can make full use of the supercomputer for DNN training, we should be able to finish the 90-epoch ResNet-50 training in one minute.

Large Batch Training of Convolutional Networks

12 code implementations • 13 Aug 2017 • Yang You, Igor Gitman, Boris Ginsburg

Using LARS, we scaled Alexnet up to a batch size of 8K, and Resnet-50 to a batch size of 32K without loss in accuracy.

Asynchronous Parallel Greedy Coordinate Descent

no code implementations • NeurIPS 2016 • Yang You, Xiangru Lian, Ji Liu, Hsiang-Fu Yu, Inderjit S. Dhillon, James Demmel, Cho-Jui Hsieh

n this paper, we propose and study an Asynchronous parallel Greedy Coordinate Descent (Asy-GCD) algorithm for minimizing a smooth function with bounded constraints.