Search Results for author: Yaohui Jin

Found 35 papers, 6 papers with code

Modeling Content Importance for Summarization with Pre-trained Language Models

no code implementations • EMNLP 2020 • Liqiang Xiao, Lu Wang, Hao He, Yaohui Jin

Previous work is mostly based on statistical methods that estimate word-level salience, which does not consider semantics and larger context when quantifying importance.

To What Extent Do Natural Language Understanding Datasets Correlate to Logical Reasoning? A Method for Diagnosing Logical Reasoning.

no code implementations • COLING 2022 • Yitian Li, Jidong Tian, Wenqing Chen, Caoyun Fan, Hao He, Yaohui Jin

In this paper, we propose a systematic method to diagnose the correlations between an NLU dataset and a specific skill, and then take a fundamental reasoning skill, logical reasoning, as an example for analysis.

Diagnosing the First-Order Logical Reasoning Ability Through LogicNLI

no code implementations • EMNLP 2021 • Jidong Tian, Yitian Li, Wenqing Chen, Liqiang Xiao, Hao He, Yaohui Jin

Recently, language models (LMs) have achieved significant performance on many NLU tasks, which has spurred widespread interest for their possible applications in the scientific and social area.

Exploring Logically Dependent Multi-task Learning with Causal Inference

no code implementations • EMNLP 2020 • Wenqing Chen, Jidong Tian, Liqiang Xiao, Hao He, Yaohui Jin

In the field of causal inference, GS in our model is essentially a counterfactual reasoning process, trying to estimate the causal effect between tasks and utilize it to improve MTL.

UAlign: Pushing the Limit of Template-free Retrosynthesis Prediction with Unsupervised SMILES Alignment

1 code implementation • 25 Mar 2024 • Kaipeng Zeng, Xin Zhao, Yu Zhang, Fan Nie, Xiaokang Yang, Yaohui Jin, Yanyan Xu

Single-step retrosynthesis prediction, a crucial step in the planning process, has witnessed a surge in interest in recent years due to advancements in AI for science.

Look Before You Leap: Problem Elaboration Prompting Improves Mathematical Reasoning in Large Language Models

no code implementations • 24 Feb 2024 • Haoran Liao, Jidong Tian, Shaohua Hu, Hao He, Yaohui Jin

Large language models (LLMs) still grapple with complex tasks like mathematical reasoning.

AgentBoard: An Analytical Evaluation Board of Multi-turn LLM Agents

2 code implementations • 24 Jan 2024 • Chang Ma, Junlei Zhang, Zhihao Zhu, Cheng Yang, Yujiu Yang, Yaohui Jin, Zhenzhong Lan, Lingpeng Kong, Junxian He

Evaluating large language models (LLMs) as general-purpose agents is essential for understanding their capabilities and facilitating their integration into practical applications.

Swap-based Deep Reinforcement Learning for Facility Location Problems in Networks

no code implementations • 25 Dec 2023 • Wenxuan Guo, Yanyan Xu, Yaohui Jin

Facility location problems on graphs are ubiquitous in real world and hold significant importance, yet their resolution is often impeded by NP-hardness.

Modeling Complex Mathematical Reasoning via Large Language Model based MathAgent

1 code implementation • 14 Dec 2023 • Haoran Liao, Qinyi Du, Shaohua Hu, Hao He, Yanyan Xu, Jidong Tian, Yaohui Jin

Large language models (LLMs) face challenges in solving complex mathematical problems that require comprehensive capacities to parse the statements, associate domain knowledge, perform compound logical reasoning, and integrate the intermediate rationales.

Comparable Demonstrations are Important in In-Context Learning: A Novel Perspective on Demonstration Selection

no code implementations • 12 Dec 2023 • Caoyun Fan, Jidong Tian, Yitian Li, Hao He, Yaohui Jin

In-Context Learning (ICL) is an important paradigm for adapting Large Language Models (LLMs) to downstream tasks through a few demonstrations.

Can Large Language Models Serve as Rational Players in Game Theory? A Systematic Analysis

no code implementations • 9 Dec 2023 • Caoyun Fan, Jindou Chen, Yaohui Jin, Hao He

With the high alignment between the behavior of Large Language Models (LLMs) and humans, a promising research direction is to employ LLMs as substitutes for humans in game experiments, enabling social science research.

Chain-of-Thought Tuning: Masked Language Models can also Think Step By Step in Natural Language Understanding

no code implementations • 18 Oct 2023 • Caoyun Fan, Jidong Tian, Yitian Li, Wenqing Chen, Hao He, Yaohui Jin

From the perspective of CoT, CoTT's two-step framework enables MLMs to implement task decomposition; CoTT's prompt tuning allows intermediate steps to be used in natural language form.

Accurate Use of Label Dependency in Multi-Label Text Classification Through the Lens of Causality

no code implementations • 11 Oct 2023 • Caoyun Fan, Wenqing Chen, Jidong Tian, Yitian Li, Hao He, Yaohui Jin

In this study, we attribute the bias to the model's misuse of label dependency, i. e., the model tends to utilize the correlation shortcut in label dependency rather than fusing text information and label dependency for prediction.

Unlock the Potential of Counterfactually-Augmented Data in Out-Of-Distribution Generalization

no code implementations • 10 Oct 2023 • Caoyun Fan, Wenqing Chen, Jidong Tian, Yitian Li, Hao He, Yaohui Jin

Counterfactually-Augmented Data (CAD) -- minimal editing of sentences to flip the corresponding labels -- has the potential to improve the Out-Of-Distribution (OOD) generalization capability of language models, as CAD induces language models to exploit domain-independent causal features and exclude spurious correlations.

Improving the Out-Of-Distribution Generalization Capability of Language Models: Counterfactually-Augmented Data is not Enough

no code implementations • 18 Feb 2023 • Caoyun Fan, Wenqing Chen, Jidong Tian, Yitian Li, Hao He, Yaohui Jin

Counterfactually-Augmented Data (CAD) has the potential to improve language models' Out-Of-Distribution (OOD) generalization capability, as CAD induces language models to exploit causal features and exclude spurious correlations.

MaxGNR: A Dynamic Weight Strategy via Maximizing Gradient-to-Noise Ratio for Multi-Task Learning

no code implementations • 18 Feb 2023 • Caoyun Fan, Wenqing Chen, Jidong Tian, Yitian Li, Hao He, Yaohui Jin

A series of studies point out that too much gradient noise would lead to performance degradation in STL, however, in the MTL scenario, Inter-Task Gradient Noise (ITGN) is an additional source of gradient noise for each task, which can also affect the optimization process.

Contrast with Major Classifier Vectors for Federated Medical Relation Extraction with Heterogeneous Label Distribution

no code implementations • 13 Jan 2023 • Chunhui Du, Hao He, Yaohui Jin

Federated medical relation extraction enables multiple clients to train a deep network collaboratively without sharing their raw medical data.

Human Mobility Prediction with Causal and Spatial-constrained Multi-task Network

1 code implementation • 12 Jun 2022 • Zongyuan Huang, Shengyuan Xu, Menghan Wang, Hansi Wu, Yanyan Xu, Yaohui Jin

Next location prediction is one decisive task in individual human mobility modeling and is usually viewed as sequence modeling, solved with Markov or RNN-based methods.

Open Source MagicData-RAMC: A Rich Annotated Mandarin Conversational(RAMC) Speech Dataset

no code implementations • 31 Mar 2022 • Zehui Yang, Yifan Chen, Lei Luo, Runyan Yang, Lingxuan Ye, Gaofeng Cheng, Ji Xu, Yaohui Jin, Qingqing Zhang, Pengyuan Zhang, Lei Xie, Yonghong Yan

As a Mandarin speech dataset designed for dialog scenarios with high quality and rich annotations, MagicData-RAMC enriches the data diversity in the Mandarin speech community and allows extensive research on a series of speech-related tasks, including automatic speech recognition, speaker diarization, topic detection, keyword search, text-to-speech, etc.

Automatic Speech Recognition

Automatic Speech Recognition

Automatic Speech Recognition (ASR)

+3

Automatic Speech Recognition (ASR)

+3

Machine Learning Methods in Solving the Boolean Satisfiability Problem

no code implementations • 2 Mar 2022 • Wenxuan Guo, Junchi Yan, Hui-Ling Zhen, Xijun Li, Mingxuan Yuan, Yaohui Jin

This paper reviews the recent literature on solving the Boolean satisfiability problem (SAT), an archetypal NP-complete problem, with the help of machine learning techniques.

End-to-End Conversational Search for Online Shopping with Utterance Transfer

no code implementations • EMNLP 2021 • Liqiang Xiao, Jun Ma2, Xin Luna Dong, Pascual Martinez-Gomez, Nasser Zalmout, Wei Chen, Tong Zhao, Hao He, Yaohui Jin

Successful conversational search systems can present natural, adaptive and interactive shopping experience for online shopping customers.

Enhancing Unsupervised Anomaly Detection with Score-Guided Network

2 code implementations • 10 Sep 2021 • Zongyuan Huang, Baohua Zhang, Guoqiang Hu, Longyuan Li, Yanyan Xu, Yaohui Jin

Anomaly detection plays a crucial role in various real-world applications, including healthcare and finance systems.

De-Confounded Variational Encoder-Decoder for Logical Table-to-Text Generation

no code implementations • ACL 2021 • Wenqing Chen, Jidong Tian, Yitian Li, Hao He, Yaohui Jin

The task remains challenging where deep learning models often generated linguistically fluent but logically inconsistent text.

Dependent Multi-Task Learning with Causal Intervention for Image Captioning

no code implementations • 18 May 2021 • Wenqing Chen, Jidong Tian, Caoyun Fan, Hao He, Yaohui Jin

The intermediate task would help the model better understand the visual features and thus alleviate the content inconsistency problem.

Anomaly Detection of Time Series with Smoothness-Inducing Sequential Variational Auto-Encoder

no code implementations • 2 Feb 2021 • Longyuan Li, Junchi Yan, Haiyang Wang, Yaohui Jin

Our model is based on Variational Auto-Encoder (VAE), and its backbone is fulfilled by a Recurrent Neural Network to capture latent temporal structures of time series for both generative model and inference model.

Synergetic Learning of Heterogeneous Temporal Sequences for Multi-Horizon Probabilistic Forecasting

no code implementations • 31 Jan 2021 • Longyuan Li, Jihai Zhang, Junchi Yan, Yaohui Jin, Yunhao Zhang, Yanjie Duan, Guangjian Tian

Time-series is ubiquitous across applications, such as transportation, finance and healthcare.

Learning Interpretable Deep State Space Model for Probabilistic Time Series Forecasting

no code implementations • 31 Jan 2021 • Longyuan Li, Junchi Yan, Xiaokang Yang, Yaohui Jin

We propose a deep state space model for probabilistic time series forecasting whereby the non-linear emission model and transition model are parameterized by networks and the dependency is modeled by recurrent neural nets.

A Semantically Consistent and Syntactically Variational Encoder-Decoder Framework for Paraphrase Generation

no code implementations • COLING 2020 • Wenqing Chen, Jidong Tian, Liqiang Xiao, Hao He, Yaohui Jin

In this paper, we propose a semantically consistent and syntactically variational encoder-decoder framework, which uses adversarial learning to ensure the syntactic latent variable be semantic-free.

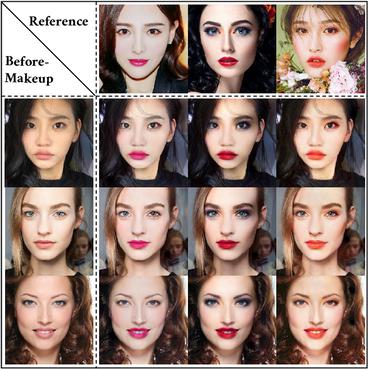

Disentangled Makeup Transfer with Generative Adversarial Network

1 code implementation • 2 Jul 2019 • Honglun Zhang, Wenqing Chen, Hao He, Yaohui Jin

Facial makeup transfer is a widely-used technology that aims to transfer the makeup style from a reference face image to a non-makeup face.

Show, Attend and Translate: Unpaired Multi-Domain Image-to-Image Translation with Visual Attention

no code implementations • 19 Nov 2018 • Honglun Zhang, Wenqing Chen, Jidong Tian, Yongkun Wang, Yaohui Jin

Recently unpaired multi-domain image-to-image translation has attracted great interests and obtained remarkable progress, where a label vector is utilized to indicate multi-domain information.

MCapsNet: Capsule Network for Text with Multi-Task Learning

no code implementations • EMNLP 2018 • Liqiang Xiao, Honglun Zhang, Wenqing Chen, Yongkun Wang, Yaohui Jin

Multi-task learning has an ability to share the knowledge among related tasks and implicitly increase the training data.

Learning What to Share: Leaky Multi-Task Network for Text Classification

no code implementations • COLING 2018 • Liqiang Xiao, Honglun Zhang, Wenqing Chen, Yongkun Wang, Yaohui Jin

Neural network based multi-task learning has achieved great success on many NLP problems, which focuses on sharing knowledge among tasks by linking some layers to enhance the performance.

Multi-Task Label Embedding for Text Classification

no code implementations • EMNLP 2018 • Honglun Zhang, Liqiang Xiao, Wenqing Chen, Yongkun Wang, Yaohui Jin

Multi-task learning in text classification leverages implicit correlations among related tasks to extract common features and yield performance gains.

A Generalized Recurrent Neural Architecture for Text Classification with Multi-Task Learning

no code implementations • 10 Jul 2017 • Honglun Zhang, Liqiang Xiao, Yongkun Wang, Yaohui Jin

Multi-task learning leverages potential correlations among related tasks to extract common features and yield performance gains.

OMNIRank: Risk Quantification for P2P Platforms with Deep Learning

no code implementations • 27 Apr 2017 • Honglun Zhang, Haiyang Wang, Xiaming Chen, Yongkun Wang, Yaohui Jin

P2P lending presents as an innovative and flexible alternative for conventional lending institutions like banks, where lenders and borrowers directly make transactions and benefit each other without complicated verifications.