Search Results for author: Yoshitaka Ushiku

Found 49 papers, 25 papers with code

Crystalformer: Infinitely Connected Attention for Periodic Structure Encoding

no code implementations • 18 Mar 2024 • Tatsunori Taniai, Ryo Igarashi, Yuta Suzuki, Naoya Chiba, Kotaro Saito, Yoshitaka Ushiku, Kanta Ono

Predicting physical properties of materials from their crystal structures is a fundamental problem in materials science.

TNF: Tri-branch Neural Fusion for Multimodal Medical Data Classification

no code implementations • 4 Mar 2024 • Tong Zheng, Shusaku Sone, Yoshitaka Ushiku, Yuki Oba, Jiaxin Ma

This paper presents a Tri-branch Neural Fusion (TNF) approach designed for classifying multimodal medical images and tabular data.

Unsupervised LLM Adaptation for Question Answering

no code implementations • 16 Feb 2024 • Kuniaki Saito, Kihyuk Sohn, Chen-Yu Lee, Yoshitaka Ushiku

In this task, we leverage a pre-trained LLM, a publicly available QA dataset (source data), and unlabeled documents from the target domain.

A Transformer Model for Symbolic Regression towards Scientific Discovery

1 code implementation • 7 Dec 2023 • Florian Lalande, Yoshitomo Matsubara, Naoya Chiba, Tatsunori Taniai, Ryo Igarashi, Yoshitaka Ushiku

Once trained, we apply our best model to the SRSD datasets (Symbolic Regression for Scientific Discovery datasets) which yields state-of-the-art results using the normalized tree-based edit distance, at no extra computational cost.

Exo2EgoDVC: Dense Video Captioning of Egocentric Procedural Activities Using Web Instructional Videos

no code implementations • 28 Nov 2023 • Takehiko Ohkawa, Takuma Yagi, Taichi Nishimura, Ryosuke Furuta, Atsushi Hashimoto, Yoshitaka Ushiku, Yoichi Sato

We propose a novel benchmark for cross-view knowledge transfer of dense video captioning, adapting models from web instructional videos with exocentric views to an egocentric view.

Vision-Language Interpreter for Robot Task Planning

1 code implementation • 2 Nov 2023 • Keisuke Shirai, Cristian C. Beltran-Hernandez, Masashi Hamaya, Atsushi Hashimoto, Shohei Tanaka, Kento Kawaharazuka, Kazutoshi Tanaka, Yoshitaka Ushiku, Shinsuke Mori

By generating PDs from language instruction and scene observation, we can drive symbolic planners in a language-guided framework.

WeaveNet for Approximating Two-sided Matching Problems

1 code implementation • 19 Oct 2023 • Shusaku Sone, Jiaxin Ma, Atsushi Hashimoto, Naoya Chiba, Yoshitaka Ushiku

Matching, a task to optimally assign limited resources under constraints, is a fundamental technology for society.

A Critical Look at the Current Usage of Foundation Model for Dense Recognition Task

no code implementations • 6 Jul 2023 • Shiqi Yang, Atsushi Hashimoto, Yoshitaka Ushiku

In recent years large model trained on huge amount of cross-modality data, which is usually be termed as foundation model, achieves conspicuous accomplishment in many fields, such as image recognition and generation.

Noisy Universal Domain Adaptation via Divergence Optimization for Visual Recognition

1 code implementation • 20 Apr 2023 • Qing Yu, Atsushi Hashimoto, Yoshitaka Ushiku

To transfer the knowledge learned from a labeled source domain to an unlabeled target domain, many studies have worked on universal domain adaptation (UniDA), where there is no constraint on the label sets of the source domain and target domain.

Neural Structure Fields with Application to Crystal Structure Autoencoders

1 code implementation • 8 Dec 2022 • Naoya Chiba, Yuta Suzuki, Tatsunori Taniai, Ryo Igarashi, Yoshitaka Ushiku, Kotaro Saito, Kanta Ono

We propose neural structure fields (NeSF) as an accurate and practical approach for representing crystal structures using neural networks.

SRSD: Rethinking Datasets of Symbolic Regression for Scientific Discovery

1 code implementation • NeurIPS 2022 AI for Science: Progress and Promises 2022 • Yoshitomo Matsubara, Naoya Chiba, Ryo Igarashi, Yoshitaka Ushiku

Symbolic Regression (SR) is a task of recovering mathematical expressions from given data and has been attracting attention from the research community to discuss its potential for scientific discovery.

Recipe Generation from Unsegmented Cooking Videos

no code implementations • 21 Sep 2022 • Taichi Nishimura, Atsushi Hashimoto, Yoshitaka Ushiku, Hirotaka Kameko, Shinsuke Mori

However, unlike DVC, in recipe generation, recipe story awareness is crucial, and a model should extract an appropriate number of events in the correct order and generate accurate sentences based on them.

Visual Recipe Flow: A Dataset for Learning Visual State Changes of Objects with Recipe Flows

no code implementations • COLING 2022 • Keisuke Shirai, Atsushi Hashimoto, Taichi Nishimura, Hirotaka Kameko, Shuhei Kurita, Yoshitaka Ushiku, Shinsuke Mori

We present a new multimodal dataset called Visual Recipe Flow, which enables us to learn each cooking action result in a recipe text.

Rethinking Symbolic Regression Datasets and Benchmarks for Scientific Discovery

1 code implementation • 21 Jun 2022 • Yoshitomo Matsubara, Naoya Chiba, Ryo Igarashi, Yoshitaka Ushiku

For each of the 120 SRSD datasets, we carefully review the properties of the formula and its variables to design reasonably realistic sampling ranges of values so that our new SRSD datasets can be used for evaluating the potential of SRSD such as whether or not an SR method can (re)discover physical laws from such datasets.

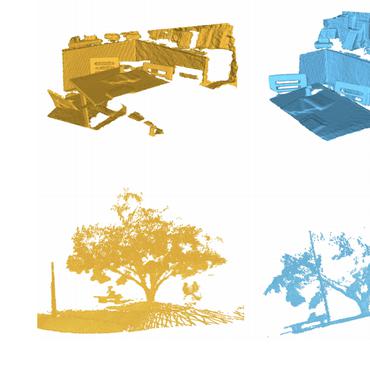

3D Point Cloud Registration with Learning-based Matching Algorithm

1 code implementation • 4 Feb 2022 • rintaro yanagi, Atsushi Hashimoto, Shusaku Sone, Naoya Chiba, Jiaxin Ma, Yoshitaka Ushiku

Instead of only optimizing the feature extractor for a matching algorithm, we propose a learning-based matching module optimized to the jointly-trained feature extractor.

WeaveNet: A Differentiable Solver for Non-linear Assignment Problems

no code implementations • 29 Sep 2021 • Shusaku Sone, Atsushi Hashimoto, Jiaxin Ma, rintaro yanagi, Naoya Chiba, Yoshitaka Ushiku

Assignment, a task to match a limited number of elements, is a fundamental problem in informatics.

Foreground-Aware Stylization and Consensus Pseudo-Labeling for Domain Adaptation of First-Person Hand Segmentation

1 code implementation • 6 Jul 2021 • Takehiko Ohkawa, Takuma Yagi, Atsushi Hashimoto, Yoshitaka Ushiku, Yoichi Sato

We validated our method on domain adaptation of hand segmentation from real and simulation images.

WeaveNet for Approximating Assignment Problems

no code implementations • NeurIPS 2021 • Shusaku Sone, Jiaxin Ma, Atsushi Hashimoto, Naoya Chiba, Yoshitaka Ushiku

Assignment, a task to match a limited number of elements, is a fundamental problem in informatics.

Removing Word-Level Spurious Alignment between Images and Pseudo-Captions in Unsupervised Image Captioning

1 code implementation • EACL 2021 • Ukyo Honda, Yoshitaka Ushiku, Atsushi Hashimoto, Taro Watanabe, Yuji Matsumoto

Unsupervised image captioning is a challenging task that aims at generating captions without the supervision of image-sentence pairs, but only with images and sentences drawn from different sources and object labels detected from the images.

Divergence Optimization for Noisy Universal Domain Adaptation

1 code implementation • CVPR 2021 • Qing Yu, Atsushi Hashimoto, Yoshitaka Ushiku

Hence, we consider a new realistic setting called Noisy UniDA, in which classifiers are trained with noisy labeled data from the source domain and unlabeled data with an unknown class distribution from the target domain.

Visual Grounding Annotation of Recipe Flow Graph

no code implementations • LREC 2020 • Taichi Nishimura, Suzushi Tomori, Hayato Hashimoto, Atsushi Hashimoto, Yoko Yamakata, Jun Harashima, Yoshitaka Ushiku, Shinsuke Mori

Visual grounding is provided as bounding boxes to image sequences of recipes, and each bounding box is linked to an element of the workflow.

Crowd Density Forecasting by Modeling Patch-based Dynamics

no code implementations • 22 Nov 2019 • Hiroaki Minoura, Ryo Yonetani, Mai Nishimura, Yoshitaka Ushiku

To address this task, we have developed the patch-based density forecasting network (PDFN), which enables forecasting over a sequence of crowd density maps describing how crowded each location is in each video frame.

Decentralized Learning of Generative Adversarial Networks from Non-iid Data

no code implementations • 23 May 2019 • Ryo Yonetani, Tomohiro Takahashi, Atsushi Hashimoto, Yoshitaka Ushiku

This work addresses a new problem that learns generative adversarial networks (GANs) from multiple data collections that are each i) owned separately by different clients and ii) drawn from a non-identical distribution that comprises different classes.

Pose Graph Optimization for Unsupervised Monocular Visual Odometry

no code implementations • 15 Mar 2019 • Yang Li, Yoshitaka Ushiku, Tatsuya Harada

In this paper, we propose to leverage graph optimization and loop closure detection to overcome limitations of unsupervised learning based monocular visual odometry.

Strong-Weak Distribution Alignment for Adaptive Object Detection

2 code implementations • CVPR 2019 • Kuniaki Saito, Yoshitaka Ushiku, Tatsuya Harada, Kate Saenko

This motivates us to propose a novel method for detector adaptation based on strong local alignment and weak global alignment.

Ranked #2 on

Unsupervised Domain Adaptation

on SIM10K to BDD100K

Ranked #2 on

Unsupervised Domain Adaptation

on SIM10K to BDD100K

Multichannel Semantic Segmentation with Unsupervised Domain Adaptation

1 code implementation • 11 Dec 2018 • Kohei Watanabe, Kuniaki Saito, Yoshitaka Ushiku, Tatsuya Harada

The other is a multitask learning approach that uses depth images as outputs.

Conditional Video Generation Using Action-Appearance Captions

no code implementations • 4 Dec 2018 • Shohei Yamamoto, Antonio Tejero-de-Pablos, Yoshitaka Ushiku, Tatsuya Harada

The results demonstrate that CFT-GAN is able to successfully generate videos containing the action and appearances indicated in the captions.

Generating Easy-to-Understand Referring Expressions for Target Identifications

2 code implementations • ICCV 2019 • Mikihiro Tanaka, Takayuki Itamochi, Kenichi Narioka, Ikuro Sato, Yoshitaka Ushiku, Tatsuya Harada

Moreover, we regard that sentences that are easily understood are those that are comprehended correctly and quickly by humans.

Class-Distinct and Class-Mutual Image Generation with GANs

2 code implementations • 27 Nov 2018 • Takuhiro Kaneko, Yoshitaka Ushiku, Tatsuya Harada

To overcome this limitation, we address a novel problem called class-distinct and class-mutual image generation, in which the goal is to construct a generator that can capture between-class relationships and generate an image selectively conditioned on the class specificity.

Label-Noise Robust Generative Adversarial Networks

3 code implementations • CVPR 2019 • Takuhiro Kaneko, Yoshitaka Ushiku, Tatsuya Harada

To remedy this, we propose a novel family of GANs called label-noise robust GANs (rGANs), which, by incorporating a noise transition model, can learn a clean label conditional generative distribution even when training labels are noisy.

Visual Question Generation for Class Acquisition of Unknown Objects

1 code implementation • ECCV 2018 • Kohei Uehara, Antonio Tejero-de-Pablos, Yoshitaka Ushiku, Tatsuya Harada

In this paper, we propose a method for generating questions about unknown objects in an image, as means to get information about classes that have not been learned.

Customized Image Narrative Generation via Interactive Visual Question Generation and Answering

no code implementations • CVPR 2018 • Andrew Shin, Yoshitaka Ushiku, Tatsuya Harada

Image description task has been invariably examined in a static manner with qualitative presumptions held to be universally applicable, regardless of the scope or target of the description.

Open Set Domain Adaptation by Backpropagation

4 code implementations • ECCV 2018 • Kuniaki Saito, Shohei Yamamoto, Yoshitaka Ushiku, Tatsuya Harada

Almost all of them are proposed for a closed-set scenario, where the source and the target domain completely share the class of their samples.

Viewpoint-aware Video Summarization

no code implementations • CVPR 2018 • Atsushi Kanehira, Luc van Gool, Yoshitaka Ushiku, Tatsuya Harada

To satisfy these requirements (A)-(C) simultaneously, we proposed a novel video summarization method from multiple groups of videos.

MFNet: Towards real-time semantic segmentation for autonomous vehicles with multi-spectral scenes

no code implementations • IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2017 • Qishen Ha, Kohei Watanabe, Takumi Karasawa, Yoshitaka Ushiku, Tatsuya Harada

We benchmarked our method by creating an RGB-Thermal dataset in which thermal and RGB images are combined.

Ranked #5 on

Thermal Image Segmentation

on KP day-night

Ranked #5 on

Thermal Image Segmentation

on KP day-night

Maximum Classifier Discrepancy for Unsupervised Domain Adaptation

8 code implementations • CVPR 2018 • Kuniaki Saito, Kohei Watanabe, Yoshitaka Ushiku, Tatsuya Harada

To solve these problems, we introduce a new approach that attempts to align distributions of source and target by utilizing the task-specific decision boundaries.

Ranked #3 on

Domain Adaptation

on HMDBfull-to-UCF

Ranked #3 on

Domain Adaptation

on HMDBfull-to-UCF

Image Classification

Image Classification

Multi-Source Unsupervised Domain Adaptation

+2

Multi-Source Unsupervised Domain Adaptation

+2

Between-class Learning for Image Classification

3 code implementations • CVPR 2018 • Yuji Tokozume, Yoshitaka Ushiku, Tatsuya Harada

Second, we propose a mixing method that treats the images as waveforms, which leads to a further improvement in performance.

Learning from Between-class Examples for Deep Sound Recognition

5 code implementations • ICLR 2018 • Yuji Tokozume, Yoshitaka Ushiku, Tatsuya Harada

Deep learning methods have achieved high performance in sound recognition tasks.

Hierarchical Video Generation from Orthogonal Information: Optical Flow and Texture

3 code implementations • 27 Nov 2017 • Katsunori Ohnishi, Shohei Yamamoto, Yoshitaka Ushiku, Tatsuya Harada

FlowGAN generates optical flow, which contains only the edge and motion of the videos to be begerated.

Neural 3D Mesh Renderer

3 code implementations • CVPR 2018 • Hiroharu Kato, Yoshitaka Ushiku, Tatsuya Harada

Using this renderer, we perform single-image 3D mesh reconstruction with silhouette image supervision and our system outperforms the existing voxel-based approach.

Ranked #6 on

3D Object Reconstruction

on Data3D−R2N2

(Avg F1 metric)

Ranked #6 on

3D Object Reconstruction

on Data3D−R2N2

(Avg F1 metric)

Adversarial Dropout Regularization

no code implementations • ICLR 2018 • Kuniaki Saito, Yoshitaka Ushiku, Tatsuya Harada, Kate Saenko

However, a drawback of this approach is that the critic simply labels the generated features as in-domain or not, without considering the boundaries between classes.

Ranked #2 on

Synthetic-to-Real Translation

on Syn2Real-C

Ranked #2 on

Synthetic-to-Real Translation

on Syn2Real-C

Melody Generation for Pop Music via Word Representation of Musical Properties

1 code implementation • 31 Oct 2017 • Andrew Shin, Leopold Crestel, Hiroharu Kato, Kuniaki Saito, Katsunori Ohnishi, Masataka Yamaguchi, Masahiro Nakawaki, Yoshitaka Ushiku, Tatsuya Harada

Automatic melody generation for pop music has been a long-time aspiration for both AI researchers and musicians.

Sound Multimedia Audio and Speech Processing

Spatio-temporal Person Retrieval via Natural Language Queries

no code implementations • ICCV 2017 • Masataka Yamaguchi, Kuniaki Saito, Yoshitaka Ushiku, Tatsuya Harada

In this paper, we address the problem of spatio-temporal person retrieval from multiple videos using a natural language query, in which we output a tube (i. e., a sequence of bounding boxes) which encloses the person described by the query.

Asymmetric Tri-training for Unsupervised Domain Adaptation

1 code implementation • ICML 2017 • Kuniaki Saito, Yoshitaka Ushiku, Tatsuya Harada

Deep-layered models trained on a large number of labeled samples boost the accuracy of many tasks.

Ranked #5 on

Sentiment Analysis

on Multi-Domain Sentiment Dataset

Ranked #5 on

Sentiment Analysis

on Multi-Domain Sentiment Dataset

DeMIAN: Deep Modality Invariant Adversarial Network

no code implementations • 23 Dec 2016 • Kuniaki Saito, Yusuke Mukuta, Yoshitaka Ushiku, Tatsuya Harada

To obtain the common representations under such a situation, we propose to make the distributions over different modalities similar in the learned representations, namely modality-invariant representations.

The Color of the Cat is Gray: 1 Million Full-Sentences Visual Question Answering (FSVQA)

no code implementations • 21 Sep 2016 • Andrew Shin, Yoshitaka Ushiku, Tatsuya Harada

Visual Question Answering (VQA) task has showcased a new stage of interaction between language and vision, two of the most pivotal components of artificial intelligence.

DualNet: Domain-Invariant Network for Visual Question Answering

no code implementations • 20 Jun 2016 • Kuniaki Saito, Andrew Shin, Yoshitaka Ushiku, Tatsuya Harada

Visual question answering (VQA) task not only bridges the gap between images and language, but also requires that specific contents within the image are understood as indicated by linguistic context of the question, in order to generate the accurate answers.

Common Subspace for Model and Similarity: Phrase Learning for Caption Generation From Images

no code implementations • ICCV 2015 • Yoshitaka Ushiku, Masataka Yamaguchi, Yusuke Mukuta, Tatsuya Harada

In order to overcome the shortage of training samples, CoSMoS obtains a subspace in which (a) all feature vectors associated with the same phrase are mapped as mutually close, (b) classifiers for each phrase are learned, and (c) training samples are shared among co-occurring phrases.

Three Guidelines of Online Learning for Large-Scale Visual Recognition

no code implementations • CVPR 2014 • Yoshitaka Ushiku, Masatoshi Hidaka, Tatsuya Harada

In this paper, we would like to evaluate online learning algorithms for large-scale visual recognition using state-of-the-art features which are preselected and held fixed.