Search Results for author: Yujia Wang

Found 12 papers, 5 papers with code

Learn to Copy from the Copying History: Correlational Copy Network for Abstractive Summarization

1 code implementation • EMNLP 2021 • Haoran Li, Song Xu, Peng Yuan, Yujia Wang, Youzheng Wu, Xiaodong He, BoWen Zhou

It thereby takes advantage of prior copying distributions and, at each time step, explicitly encourages the model to copy the input word that is relevant to the previously copied one.

Ranked #11 on

Abstractive Text Summarization

on CNN / Daily Mail

(using extra training data)

Ranked #11 on

Abstractive Text Summarization

on CNN / Daily Mail

(using extra training data)

Can LLMs Learn from Previous Mistakes? Investigating LLMs' Errors to Boost for Reasoning

no code implementations • 29 Mar 2024 • Yongqi Tong, Dawei Li, Sizhe Wang, Yujia Wang, Fei Teng, Jingbo Shang

We conduct a series of experiments to prove LLMs can obtain benefits from mistakes in both directions.

Federated Learning with Projected Trajectory Regularization

no code implementations • 22 Dec 2023 • Tiejin Chen, Yuanpu Cao, Yujia Wang, Cho-Jui Hsieh, Jinghui Chen

Specifically, FedPTR allows local clients or the server to optimize an auxiliary (synthetic) dataset that mimics the learning dynamics of the recent model update and utilizes it to project the next-step model trajectory for local training regularization.

ToxicChat: Unveiling Hidden Challenges of Toxicity Detection in Real-World User-AI Conversation

no code implementations • 26 Oct 2023 • Zi Lin, Zihan Wang, Yongqi Tong, Yangkun Wang, Yuxin Guo, Yujia Wang, Jingbo Shang

This benchmark contains the rich, nuanced phenomena that can be tricky for current toxicity detection models to identify, revealing a significant domain difference compared to social media content.

Communication-Efficient Adaptive Federated Learning

1 code implementation • 5 May 2022 • Yujia Wang, Lu Lin, Jinghui Chen

We show that in the nonconvex stochastic optimization setting, our proposed FedCAMS achieves the same convergence rate of $O(\frac{1}{\sqrt{TKm}})$ as its non-compressed counterparts.

Communication-Compressed Adaptive Gradient Method for Distributed Nonconvex Optimization

no code implementations • 1 Nov 2021 • Yujia Wang, Lu Lin, Jinghui Chen

We prove that the proposed communication-efficient distributed adaptive gradient method converges to the first-order stationary point with the same iteration complexity as uncompressed vanilla AMSGrad in the stochastic nonconvex optimization setting.

K-PLUG: Knowledge-injected Pre-trained Language Model for Natural Language Understanding and Generation in E-Commerce

1 code implementation • Findings (EMNLP) 2021 • Song Xu, Haoran Li, Peng Yuan, Yujia Wang, Youzheng Wu, Xiaodong He, Ying Liu, BoWen Zhou

K-PLUG achieves new state-of-the-art results on a suite of domain-specific NLP tasks, including product knowledge base completion, abstractive product summarization, and multi-turn dialogue, significantly outperforms baselines across the board, which demonstrates that the proposed method effectively learns a diverse set of domain-specific knowledge for both language understanding and generation tasks.

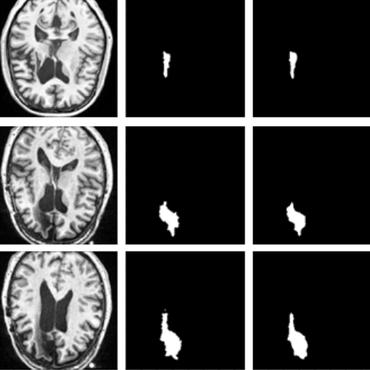

D2A U-Net: Automatic Segmentation of COVID-19 Lesions from CT Slices with Dilated Convolution and Dual Attention Mechanism

1 code implementation • 10 Feb 2021 • Xiangyu Zhao, Peng Zhang, Fan Song, Guangda Fan, Yangyang Sun, Yujia Wang, Zheyuan Tian, Luqi Zhang, Guanglei Zhang

In this paper we propose a dilated dual attention U-Net (D2A U-Net) for COVID-19 lesion segmentation in CT slices based on dilated convolution and a novel dual attention mechanism to address the issues above.

K-PLUG: KNOWLEDGE-INJECTED PRE-TRAINED LANGUAGE MODEL FOR NATURAL LANGUAGE UNDERSTANDING AND GENERATION

1 code implementation • 1 Jan 2021 • Song Xu, Haoran Li, Peng Yuan, Yujia Wang, Youzheng Wu, Xiaodong He, Ying Liu, BoWen Zhou

K-PLUG achieves new state-of-the-art results on a suite of domain-specific NLP tasks, including product knowledge base completion, abstractive product summarization, and multi-turn dialogue, significantly outperforms baselines across the board, which demonstrates that the proposed method effectively learns a diverse set of domain-specific knowledge for both language understanding and generation tasks.

SAU-Net: Efficient 3D Spine MRI Segmentation Using Inter-Slice Attention

no code implementations • MIDL 2019 • Yichi Zhang, Lin Yuan, Yujia Wang, Jicong Zhang

Accurate segmentation of spine Magnetic Resonance Imaging (MRI) is highly demanded in morphological research, quantitative analysis, and diseases identification, such as spinal canal stenosis, disc herniation and degeneration.

PNS: Population-Guided Novelty Search for Reinforcement Learning in Hard Exploration Environments

no code implementations • 26 Nov 2018 • Qihao Liu, Yujia Wang, Xiaofeng Liu

To balance exploration and exploitation, the Novelty Search (NS) is employed in every chief agent to encourage policies with high novelty while maximizing per-episode performance.

3D Face Synthesis Driven by Personality Impression

no code implementations • 27 Sep 2018 • Yining Lang, Wei Liang, Yujia Wang, Lap-Fai Yu

In this paper, we propose a novel approach to synthesize 3D faces based on personality impression for creating virtual characters.

Graphics