Search Results for author: Zheng Lin

Found 56 papers, 28 papers with code

Target Really Matters: Target-aware Contrastive Learning and Consistency Regularization for Few-shot Stance Detection

1 code implementation • COLING 2022 • Rui Liu, Zheng Lin, Huishan Ji, Jiangnan Li, Peng Fu, Weiping Wang

Despite the significant progress on this task, it is extremely time-consuming and budget-unfriendly to collect sufficient high-quality labeled data for every new target under fully-supervised learning, whereas unlabeled data can be collected easier.

TAKE: Topic-shift Aware Knowledge sElection for Dialogue Generation

1 code implementation • COLING 2022 • Chenxu Yang, Zheng Lin, Jiangnan Li, Fandong Meng, Weiping Wang, Lanrui Wang, Jie zhou

The knowledge selector generally constructs a query based on the dialogue context and selects the most appropriate knowledge to help response generation.

CLIO: Role-interactive Multi-event Head Attention Network for Document-level Event Extraction

no code implementations • COLING 2022 • Yubing Ren, Yanan Cao, Fang Fang, Ping Guo, Zheng Lin, Wei Ma, Yi Liu

Transforming the large amounts of unstructured text on the Internet into structured event knowledge is a critical, yet unsolved goal of NLP, especially when addressing document-level text.

Slot Dependency Modeling for Zero-Shot Cross-Domain Dialogue State Tracking

no code implementations • COLING 2022 • Qingyue Wang, Yanan Cao, Piji Li, Yanhe Fu, Zheng Lin, Li Guo

Zero-shot learning for Dialogue State Tracking (DST) focuses on generalizing to an unseen domain without the expense of collecting in domain data.

Past, Present, and Future: Conversational Emotion Recognition through Structural Modeling of Psychological Knowledge

1 code implementation • Findings (EMNLP) 2021 • Jiangnan Li, Zheng Lin, Peng Fu, Weiping Wang

Furthermore, we utilize CSK to enrich edges with knowledge representations and process the SKAIG with a graph transformer.

Ranked #9 on

Emotion Recognition in Conversation

on DailyDialog

Ranked #9 on

Emotion Recognition in Conversation

on DailyDialog

IFViT: Interpretable Fixed-Length Representation for Fingerprint Matching via Vision Transformer

no code implementations • 12 Apr 2024 • Yuhang Qiu, Honghui Chen, Xingbo Dong, Zheng Lin, Iman Yi Liao, Massimo Tistarelli, Zhe Jin

The first module, an interpretable dense registration module, establishes a Vision Transformer (ViT)-based Siamese Network to capture long-range dependencies and the global context in fingerprint pairs.

Automated Federated Pipeline for Parameter-Efficient Fine-Tuning of Large Language Models

no code implementations • 9 Apr 2024 • Zihan Fang, Zheng Lin, Zhe Chen, Xianhao Chen, Yue Gao, Yuguang Fang

Recently, there has been a surge in the development of advanced intelligent generative content (AIGC), especially large language models (LLMs).

Minimize Quantization Output Error with Bias Compensation

1 code implementation • 2 Apr 2024 • Cheng Gong, Haoshuai Zheng, Mengting Hu, Zheng Lin, Deng-Ping Fan, Yuzhi Zhang, Tao Li

Quantization is a promising method that reduces memory usage and computational intensity of Deep Neural Networks (DNNs), but it often leads to significant output error that hinder model deployment.

FedAC: An Adaptive Clustered Federated Learning Framework for Heterogeneous Data

no code implementations • 25 Mar 2024 • Yuxin Zhang, Haoyu Chen, Zheng Lin, Zhe Chen, Jin Zhao

Clustered federated learning (CFL) is proposed to mitigate the performance deterioration stemming from data heterogeneity in federated learning (FL) by grouping similar clients for cluster-wise model training.

AdaptSFL: Adaptive Split Federated Learning in Resource-constrained Edge Networks

no code implementations • 19 Mar 2024 • Zheng Lin, Guanqiao Qu, Wei Wei, Xianhao Chen, Kin K. Leung

In this paper, we provide a convergence analysis of SFL which quantifies the impact of model splitting (MS) and client-side model aggregation (MA) on the learning performance, serving as a theoretical foundation.

Previously on the Stories: Recap Snippet Identification for Story Reading

no code implementations • 11 Feb 2024 • Jiangnan Li, Qiujing Wang, Liyan Xu, Wenjie Pang, Mo Yu, Zheng Lin, Weiping Wang, Jie zhou

Similar to the "previously-on" scenes in TV shows, recaps can help book reading by recalling the readers' memory about the important elements in previous texts to better understand the ongoing plot.

Are Large Language Models Table-based Fact-Checkers?

no code implementations • 4 Feb 2024 • Hangwen Zhang, Qingyi Si, Peng Fu, Zheng Lin, Weiping Wang

Finally, we analyze some possible directions to promote the accuracy of TFV via LLMs, which is beneficial to further research of table reasoning.

Object Attribute Matters in Visual Question Answering

no code implementations • 20 Dec 2023 • Peize Li, Qingyi Si, Peng Fu, Zheng Lin, Yan Wang

In this paper, we propose a novel VQA approach from the perspective of utilizing object attribute, aiming to achieve better object-level visual-language alignment and multimodal scene understanding.

TeMO: Towards Text-Driven 3D Stylization for Multi-Object Meshes

no code implementations • 7 Dec 2023 • Xuying Zhang, Bo-Wen Yin, Yuming Chen, Zheng Lin, Yunheng Li, Qibin Hou, Ming-Ming Cheng

Particularly, a cross-modal graph is constructed to align the object points accurately and noun phrases decoupled from the 3D mesh and textual description.

Enhancing Empathetic and Emotion Support Dialogue Generation with Prophetic Commonsense Inference

no code implementations • 26 Nov 2023 • Lanrui Wang, Jiangnan Li, Chenxu Yang, Zheng Lin, Weiping Wang

The interest in Empathetic and Emotional Support conversations among the public has significantly increased.

FedSN: A Novel Federated Learning Framework over LEO Satellite Networks

no code implementations • 2 Nov 2023 • Zheng Lin, Zhe Chen, Zihan Fang, Xianhao Chen, Xiong Wang, Yue Gao

To this end, we propose FedSN as a general FL framework to tackle the above challenges, and fully explore data diversity on LEO satellites.

Multi-level Adaptive Contrastive Learning for Knowledge Internalization in Dialogue Generation

no code implementations • 13 Oct 2023 • Chenxu Yang, Zheng Lin, Lanrui Wang, Chong Tian, Liang Pang, Jiangnan Li, Qirong Ho, Yanan Cao, Weiping Wang

Knowledge-grounded dialogue generation aims to mitigate the issue of text degeneration by incorporating external knowledge to supplement the context.

An Empirical Study of Instruction-tuning Large Language Models in Chinese

1 code implementation • 11 Oct 2023 • Qingyi Si, Tong Wang, Zheng Lin, Xu Zhang, Yanan Cao, Weiping Wang

This paper will release a powerful Chinese LLMs that is comparable to ChatGLM.

Pushing Large Language Models to the 6G Edge: Vision, Challenges, and Opportunities

no code implementations • 28 Sep 2023 • Zheng Lin, Guanqiao Qu, Qiyuan Chen, Xianhao Chen, Zhe Chen, Kaibin Huang

In both aspects, considering the inherent resource limitations at the edge, we discuss various cutting-edge techniques, including split learning/inference, parameter-efficient fine-tuning, quantization, and parameter-sharing inference, to facilitate the efficient deployment of LLMs.

Optimal Resource Allocation for U-Shaped Parallel Split Learning

no code implementations • 17 Aug 2023 • Song Lyu, Zheng Lin, Guanqiao Qu, Xianhao Chen, Xiaoxia Huang, Pan Li

In this paper, we develop a novel parallel U-shaped split learning and devise the optimal resource optimization scheme to improve the performance of edge networks.

Split Learning in 6G Edge Networks

no code implementations • 21 Jun 2023 • Zheng Lin, Guanqiao Qu, Xianhao Chen, Kaibin Huang

With the proliferation of distributed edge computing resources, the 6G mobile network will evolve into a network for connected intelligence.

Referring Camouflaged Object Detection

1 code implementation • 13 Jun 2023 • Xuying Zhang, Bowen Yin, Zheng Lin, Qibin Hou, Deng-Ping Fan, Ming-Ming Cheng

We consider the problem of referring camouflaged object detection (Ref-COD), a new task that aims to segment specified camouflaged objects based on a small set of referring images with salient target objects.

DiffusEmp: A Diffusion Model-Based Framework with Multi-Grained Control for Empathetic Response Generation

no code implementations • 2 Jun 2023 • Guanqun Bi, Lei Shen, Yanan Cao, Meng Chen, Yuqiang Xie, Zheng Lin, Xiaodong He

Empathy is a crucial factor in open-domain conversations, which naturally shows one's caring and understanding to others.

Divide, Conquer, and Combine: Mixture of Semantic-Independent Experts for Zero-Shot Dialogue State Tracking

no code implementations • 1 Jun 2023 • Qingyue Wang, Liang Ding, Yanan Cao, Yibing Zhan, Zheng Lin, Shi Wang, DaCheng Tao, Li Guo

Zero-shot transfer learning for Dialogue State Tracking (DST) helps to handle a variety of task-oriented dialogue domains without the cost of collecting in-domain data.

Combo of Thinking and Observing for Outside-Knowledge VQA

1 code implementation • 10 May 2023 • Qingyi Si, Yuchen Mo, Zheng Lin, Huishan Ji, Weiping Wang

Some existing solutions draw external knowledge into the cross-modality space which overlooks the much vaster textual knowledge in natural-language space, while others transform the image into a text that further fuses with the textual knowledge into the natural-language space and completely abandons the use of visual features.

Efficient Parallel Split Learning over Resource-constrained Wireless Edge Networks

no code implementations • 26 Mar 2023 • Zheng Lin, Guangyu Zhu, Yiqin Deng, Xianhao Chen, Yue Gao, Kaibin Huang, Yuguang Fang

The increasingly deeper neural networks hinder the democratization of privacy-enhancing distributed learning, such as federated learning (FL), to resource-constrained devices.

Co-Salient Object Detection with Co-Representation Purification

1 code implementation • 14 Mar 2023 • Ziyue Zhu, Zhao Zhang, Zheng Lin, Xing Sun, Ming-Ming Cheng

Such irrelevant information in the co-representation interferes with its locating of co-salient objects.

COST-EFF: Collaborative Optimization of Spatial and Temporal Efficiency with Slenderized Multi-exit Language Models

1 code implementation • 27 Oct 2022 • Bowen Shen, Zheng Lin, Yuanxin Liu, Zhengxiao Liu, Lei Wang, Weiping Wang

Motivated by such considerations, we propose a collaborative optimization for PLMs that integrates static model compression and dynamic inference acceleration.

Question-Interlocutor Scope Realized Graph Modeling over Key Utterances for Dialogue Reading Comprehension

no code implementations • 26 Oct 2022 • Jiangnan Li, Mo Yu, Fandong Meng, Zheng Lin, Peng Fu, Weiping Wang, Jie zhou

Although these tasks are effective, there are still urging problems: (1) randomly masking speakers regardless of the question cannot map the speaker mentioned in the question to the corresponding speaker in the dialogue, and ignores the speaker-centric nature of utterances.

Compressing And Debiasing Vision-Language Pre-Trained Models for Visual Question Answering

1 code implementation • 26 Oct 2022 • Qingyi Si, Yuanxin Liu, Zheng Lin, Peng Fu, Weiping Wang

To this end, we systematically study the design of a training and compression pipeline to search the subnetworks, as well as the assignment of sparsity to different modality-specific modules.

Empathetic Dialogue Generation via Sensitive Emotion Recognition and Sensible Knowledge Selection

1 code implementation • 21 Oct 2022 • Lanrui Wang, Jiangnan Li, Zheng Lin, Fandong Meng, Chenxu Yang, Weiping Wang, Jie zhou

We use a fine-grained encoding strategy which is more sensitive to the emotion dynamics (emotion flow) in the conversations to predict the emotion-intent characteristic of response.

A Win-win Deal: Towards Sparse and Robust Pre-trained Language Models

1 code implementation • 11 Oct 2022 • Yuanxin Liu, Fandong Meng, Zheng Lin, Jiangnan Li, Peng Fu, Yanan Cao, Weiping Wang, Jie zhou

In response to the efficiency problem, recent studies show that dense PLMs can be replaced with sparse subnetworks without hurting the performance.

Towards Robust Visual Question Answering: Making the Most of Biased Samples via Contrastive Learning

1 code implementation • 10 Oct 2022 • Qingyi Si, Yuanxin Liu, Fandong Meng, Zheng Lin, Peng Fu, Yanan Cao, Weiping Wang, Jie zhou

However, these models reveal a trade-off that the improvements on OOD data severely sacrifice the performance on the in-distribution (ID) data (which is dominated by the biased samples).

Language Prior Is Not the Only Shortcut: A Benchmark for Shortcut Learning in VQA

1 code implementation • 10 Oct 2022 • Qingyi Si, Fandong Meng, Mingyu Zheng, Zheng Lin, Yuanxin Liu, Peng Fu, Yanan Cao, Weiping Wang, Jie zhou

To overcome this limitation, we propose a new dataset that considers varying types of shortcuts by constructing different distribution shifts in multiple OOD test sets.

Neutral Utterances are Also Causes: Enhancing Conversational Causal Emotion Entailment with Social Commonsense Knowledge

1 code implementation • 2 May 2022 • Jiangnan Li, Fandong Meng, Zheng Lin, Rui Liu, Peng Fu, Yanan Cao, Weiping Wang, Jie zhou

Conversational Causal Emotion Entailment aims to detect causal utterances for a non-neutral targeted utterance from a conversation.

Ranked #1 on

Causal Emotion Entailment

on RECCON

Ranked #1 on

Causal Emotion Entailment

on RECCON

Neural Label Search for Zero-Shot Multi-Lingual Extractive Summarization

no code implementations • ACL 2022 • Ruipeng Jia, Xingxing Zhang, Yanan Cao, Shi Wang, Zheng Lin, Furu Wei

In zero-shot multilingual extractive text summarization, a model is typically trained on English summarization dataset and then applied on summarization datasets of other languages.

Learning to Win Lottery Tickets in BERT Transfer via Task-agnostic Mask Training

1 code implementation • NAACL 2022 • Yuanxin Liu, Fandong Meng, Zheng Lin, Peng Fu, Yanan Cao, Weiping Wang, Jie zhou

Firstly, we discover that the success of magnitude pruning can be attributed to the preserved pre-training performance, which correlates with the downstream transferability.

Image Harmonization by Matching Regional References

no code implementations • 10 Apr 2022 • Ziyue Zhu, Zhao Zhang, Zheng Lin, Ruiqi Wu, Zhi Chai, Chun-Le Guo

To achieve visual consistency in composite images, recent image harmonization methods typically summarize the appearance pattern of global background and apply it to the global foreground without location discrepancy.

Interactive Style Transfer: All is Your Palette

no code implementations • 25 Mar 2022 • Zheng Lin, Zhao Zhang, Kang-Rui Zhang, Bo Ren, Ming-Ming Cheng

Our IST method can serve as a brush, dip style from anywhere, and then paint to any region of the target content image.

FocusCut: Diving Into a Focus View in Interactive Segmentation

2 code implementations • CVPR 2022 • Zheng Lin, Zheng-Peng Duan, Zhao Zhang, Chun-Le Guo, Ming-Ming Cheng

However, the global view makes the model lose focus from later clicks, and is not in line with user intentions.

Ranked #5 on

Interactive Segmentation

on SBD

Ranked #5 on

Interactive Segmentation

on SBD

Check It Again:Progressive Visual Question Answering via Visual Entailment

1 code implementation • ACL 2021 • Qingyi Si, Zheng Lin, Ming yu Zheng, Peng Fu, Weiping Wang

Besides, they only explore the interaction between image and question, ignoring the semantics of candidate answers.

Marginal Utility Diminishes: Exploring the Minimum Knowledge for BERT Knowledge Distillation

1 code implementation • ACL 2021 • Yuanxin Liu, Fandong Meng, Zheng Lin, Weiping Wang, Jie zhou

In this paper, however, we observe that although distilling the teacher's hidden state knowledge (HSK) is helpful, the performance gain (marginal utility) diminishes quickly as more HSK is distilled.

Check It Again: Progressive Visual Question Answering via Visual Entailment

1 code implementation • 8 Jun 2021 • Qingyi Si, Zheng Lin, Mingyu Zheng, Peng Fu, Weiping Wang

Besides, they only explore the interaction between image and question, ignoring the semantics of candidate answers.

ROSITA: Refined BERT cOmpreSsion with InTegrAted techniques

1 code implementation • 21 Mar 2021 • Yuanxin Liu, Zheng Lin, Fengcheng Yuan

Based on the empirical findings, our best compressed model, dubbed Refined BERT cOmpreSsion with InTegrAted techniques (ROSITA), is $7. 5 \times$ smaller than BERT while maintains $98. 5\%$ of the performance on five tasks of the GLUE benchmark, outperforming the previous BERT compression methods with similar parameter budget.

A Hierarchical Transformer with Speaker Modeling for Emotion Recognition in Conversation

1 code implementation • 29 Dec 2020 • Jiangnan Li, Zheng Lin, Peng Fu, Qingyi Si, Weiping Wang

It can be regarded as a personalized and interactive emotion recognition task, which is supposed to consider not only the semantic information of text but also the influences from speakers.

Ranked #34 on

Emotion Recognition in Conversation

on IEMOCAP

Ranked #34 on

Emotion Recognition in Conversation

on IEMOCAP

Learning Class-Transductive Intent Representations for Zero-shot Intent Detection

1 code implementation • 3 Dec 2020 • Qingyi Si, Yuanxin Liu, Peng Fu, Zheng Lin, Jiangnan Li, Weiping Wang

A critical problem behind these limitations is that the representations of unseen intents cannot be learned in the training stage.

Modeling Intra and Inter-modality Incongruity for Multi-Modal Sarcasm Detection

no code implementations • Findings of the Association for Computational Linguistics 2020 • Hongliang Pan, Zheng Lin, Peng Fu, Yatao Qi, Weiping Wang

Inspired by this, we propose a BERT architecture-based model, which concentrates on both intra and inter-modality incongruity for multi-modal sarcasm detection.

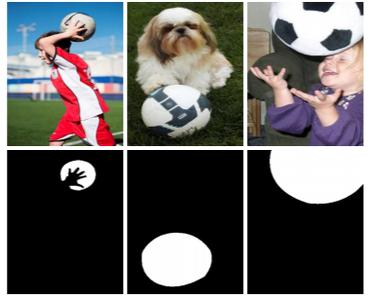

Re-thinking Co-Salient Object Detection

2 code implementations • 7 Jul 2020 • Deng-Ping Fan, Tengpeng Li, Zheng Lin, Ge-Peng Ji, Dingwen Zhang, Ming-Ming Cheng, Huazhu Fu, Jianbing Shen

CoSOD is an emerging and rapidly growing extension of salient object detection (SOD), which aims to detect the co-occurring salient objects in a group of images.

Ranked #7 on

Co-Salient Object Detection

on CoCA

Ranked #7 on

Co-Salient Object Detection

on CoCA

Interactive Image Segmentation With First Click Attention

2 code implementations • CVPR 2020 • Zheng Lin, Zhao Zhang, Lin-Zhuo Chen, Ming-Ming Cheng, Shao-Ping Lu

In the task of interactive image segmentation, users initially click one point to segment the main body of the target object and then provide more points on mislabeled regions iteratively for a precise segmentation.

Bilateral Attention Network for RGB-D Salient Object Detection

1 code implementation • 30 Apr 2020 • Zhao Zhang, Zheng Lin, Jun Xu, Wenda Jin, Shao-Ping Lu, Deng-Ping Fan

To better explore salient information in both foreground and background regions, this paper proposes a Bilateral Attention Network (BiANet) for the RGB-D SOD task.

Ranked #3 on

RGB-D Salient Object Detection

on RGBD135

Ranked #3 on

RGB-D Salient Object Detection

on RGBD135

Keyphrase Prediction With Pre-trained Language Model

no code implementations • 22 Apr 2020 • Rui Liu, Zheng Lin, Weiping Wang

Considering the different characteristics of extractive and generative methods, we propose to divide the keyphrase prediction into two subtasks, i. e., present keyphrase extraction (PKE) and absent keyphrase generation (AKG), to fully exploit their respective advantages.

Spatial Information Guided Convolution for Real-Time RGBD Semantic Segmentation

1 code implementation • 9 Apr 2020 • Lin-Zhuo Chen, Zheng Lin, Ziqin Wang, Yong-Liang Yang, Ming-Ming Cheng

S-Conv is competent to infer the sampling offset of the convolution kernel guided by the 3D spatial information, helping the convolutional layer adjust the receptive field and adapt to geometric transformations.

Ranked #20 on

Semantic Segmentation

on SUN-RGBD

(using extra training data)

Ranked #20 on

Semantic Segmentation

on SUN-RGBD

(using extra training data)

Unsupervised Pre-training for Natural Language Generation: A Literature Review

no code implementations • 13 Nov 2019 • Yuanxin Liu, Zheng Lin

They are classified into architecture-based methods and strategy-based methods, based on their way of handling the above obstacle.

Ranking and Sampling in Open-Domain Question Answering

no code implementations • IJCNLP 2019 • Yanfu Xu, Zheng Lin, Yuanxin Liu, Rui Liu, Weiping Wang, Dan Meng

Open-domain question answering (OpenQA) aims to answer questions based on a number of unlabeled paragraphs.

Rethinking RGB-D Salient Object Detection: Models, Data Sets, and Large-Scale Benchmarks

2 code implementations • 15 Jul 2019 • Deng-Ping Fan, Zheng Lin, Jia-Xing Zhao, Yun Liu, Zhao Zhang, Qibin Hou, Menglong Zhu, Ming-Ming Cheng

The use of RGB-D information for salient object detection has been extensively explored in recent years.

Ranked #4 on

RGB-D Salient Object Detection

on RGBD135

Ranked #4 on

RGB-D Salient Object Detection

on RGBD135