Search Results for author: Zhengzhe Liu

Found 19 papers, 12 papers with code

CNS-Edit: 3D Shape Editing via Coupled Neural Shape Optimization

no code implementations • 4 Feb 2024 • Jingyu Hu, Ka-Hei Hui, Zhengzhe Liu, Hao Zhang, Chi-Wing Fu

First, we design the coupled neural shape (CNS) representation for supporting 3D shape editing.

Make-A-Shape: a Ten-Million-scale 3D Shape Model

no code implementations • 20 Jan 2024 • Ka-Hei Hui, Aditya Sanghi, Arianna Rampini, Kamal Rahimi Malekshan, Zhengzhe Liu, Hooman Shayani, Chi-Wing Fu

We then make the representation generatable by a diffusion model by devising the subband coefficients packing scheme to layout the representation in a low-resolution grid.

EXIM: A Hybrid Explicit-Implicit Representation for Text-Guided 3D Shape Generation

1 code implementation • 3 Nov 2023 • Zhengzhe Liu, Jingyu Hu, Ka-Hei Hui, Xiaojuan Qi, Daniel Cohen-Or, Chi-Wing Fu

This paper presents a new text-guided technique for generating 3D shapes.

Texture Generation on 3D Meshes with Point-UV Diffusion

no code implementations • ICCV 2023 • Xin Yu, Peng Dai, Wenbo Li, Lan Ma, Zhengzhe Liu, Xiaojuan Qi

In this work, we focus on synthesizing high-quality textures on 3D meshes.

CLIPXPlore: Coupled CLIP and Shape Spaces for 3D Shape Exploration

no code implementations • 14 Jun 2023 • Jingyu Hu, Ka-Hei Hui, Zhengzhe Liu, Hao Zhang, Chi-Wing Fu

This paper presents CLIPXPlore, a new framework that leverages a vision-language model to guide the exploration of the 3D shape space.

You Only Need One Thing One Click: Self-Training for Weakly Supervised 3D Scene Understanding

1 code implementation • 26 Mar 2023 • Zhengzhe Liu, Xiaojuan Qi, Chi-Wing Fu

3D scene understanding, e. g., point cloud semantic and instance segmentation, often requires large-scale annotated training data, but clearly, point-wise labels are too tedious to prepare.

DreamStone: Image as Stepping Stone for Text-Guided 3D Shape Generation

2 code implementations • 24 Mar 2023 • Zhengzhe Liu, Peng Dai, Ruihui Li, Xiaojuan Qi, Chi-Wing Fu

The core of our approach is a two-stage feature-space alignment strategy that leverages a pre-trained single-view reconstruction (SVR) model to map CLIP features to shapes: to begin with, map the CLIP image feature to the detail-rich 3D shape space of the SVR model, then map the CLIP text feature to the 3D shape space through encouraging the CLIP-consistency between rendered images and the input text.

Neural Wavelet-domain Diffusion for 3D Shape Generation, Inversion, and Manipulation

no code implementations • 1 Feb 2023 • Jingyu Hu, Ka-Hei Hui, Zhengzhe Liu, Ruihui Li, Chi-Wing Fu

This paper presents a new approach for 3D shape generation, inversion, and manipulation, through a direct generative modeling on a continuous implicit representation in wavelet domain.

Command-Driven Articulated Object Understanding and Manipulation

no code implementations • CVPR 2023 • Ruihang Chu, Zhengzhe Liu, Xiaoqing Ye, Xiao Tan, Xiaojuan Qi, Chi-Wing Fu, Jiaya Jia

The key of Cart is to utilize the prediction of object structures to connect visual observations with user commands for effective manipulations.

MGFN: Magnitude-Contrastive Glance-and-Focus Network for Weakly-Supervised Video Anomaly Detection

1 code implementation • 28 Nov 2022 • Yingxian Chen, Zhengzhe Liu, Baoheng Zhang, Wilton Fok, Xiaojuan Qi, Yik-Chung Wu

Weakly supervised detection of anomalies in surveillance videos is a challenging task.

Anomaly Detection In Surveillance Videos

Anomaly Detection In Surveillance Videos

Video Anomaly Detection

Video Anomaly Detection

Sparse2Dense: Learning to Densify 3D Features for 3D Object Detection

1 code implementation • 23 Nov 2022 • Tianyu Wang, Xiaowei Hu, Zhengzhe Liu, Chi-Wing Fu

Importantly, we formulate the lightweight plug-in S2D module and the point cloud reconstruction module in SDet to densify 3D features and train SDet to produce 3D features, following the dense 3D features in DDet.

ISS: Image as Stepping Stone for Text-Guided 3D Shape Generation

2 code implementations • 9 Sep 2022 • Zhengzhe Liu, Peng Dai, Ruihui Li, Xiaojuan Qi, Chi-Wing Fu

Text-guided 3D shape generation remains challenging due to the absence of large paired text-shape data, the substantial semantic gap between these two modalities, and the structural complexity of 3D shapes.

Towards Implicit Text-Guided 3D Shape Generation

1 code implementation • CVPR 2022 • Zhengzhe Liu, Yi Wang, Xiaojuan Qi, Chi-Wing Fu

In this work, we explore the challenging task of generating 3D shapes from text.

TWIST: Two-Way Inter-Label Self-Training for Semi-Supervised 3D Instance Segmentation

no code implementations • CVPR 2022 • Ruihang Chu, Xiaoqing Ye, Zhengzhe Liu, Xiao Tan, Xiaojuan Qi, Chi-Wing Fu, Jiaya Jia

We explore the way to alleviate the label-hungry problem in a semi-supervised setting for 3D instance segmentation.

One Thing One Click: A Self-Training Approach for Weakly Supervised 3D Semantic Segmentation

2 code implementations • CVPR 2021 • Zhengzhe Liu, Xiaojuan Qi, Chi-Wing Fu

Point cloud semantic segmentation often requires largescale annotated training data, but clearly, point-wise labels are too tedious to prepare.

3D-to-2D Distillation for Indoor Scene Parsing

1 code implementation • CVPR 2021 • Zhengzhe Liu, Xiaojuan Qi, Chi-Wing Fu

First, we distill 3D knowledge from a pretrained 3D network to supervise a 2D network to learn simulated 3D features from 2D features during the training, so the 2D network can infer without requiring 3D data.

GeoNet++: Iterative Geometric Neural Network with Edge-Aware Refinement for Joint Depth and Surface Normal Estimation

2 code implementations • 13 Dec 2020 • Xiaojuan Qi, Zhengzhe Liu, Renjie Liao, Philip H. S. Torr, Raquel Urtasun, Jiaya Jia

Note that GeoNet++ is generic and can be used in other depth/normal prediction frameworks to improve the quality of 3D reconstruction and pixel-wise accuracy of depth and surface normals.

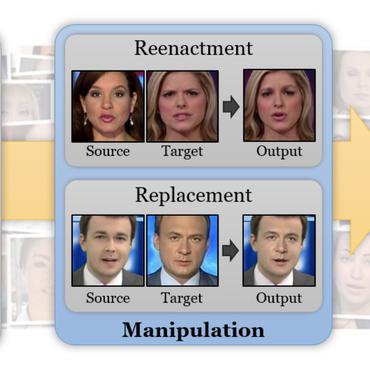

Global Texture Enhancement for Fake Face Detection in the Wild

1 code implementation • CVPR 2020 • Zhengzhe Liu, Xiaojuan Qi, Philip Torr

In this paper, we conduct an empirical study on fake/real faces, and have two important observations: firstly, the texture of fake faces is substantially different from real ones; secondly, global texture statistics are more robust to image editing and transferable to fake faces from different GANs and datasets.

GeoNet: Geometric Neural Network for Joint Depth and Surface Normal Estimation

1 code implementation • CVPR 2018 • Xiaojuan Qi, Renjie Liao, Zhengzhe Liu, Raquel Urtasun, Jiaya Jia

In this paper, we propose Geometric Neural Network (GeoNet) to jointly predict depth and surface normal maps from a single image.