Search Results for author: Zhoujun Cheng

Found 15 papers, 10 papers with code

OSWorld: Benchmarking Multimodal Agents for Open-Ended Tasks in Real Computer Environments

no code implementations • 11 Apr 2024 • Tianbao Xie, Danyang Zhang, Jixuan Chen, Xiaochuan Li, Siheng Zhao, Ruisheng Cao, Toh Jing Hua, Zhoujun Cheng, Dongchan Shin, Fangyu Lei, Yitao Liu, Yiheng Xu, Shuyan Zhou, Silvio Savarese, Caiming Xiong, Victor Zhong, Tao Yu

Autonomous agents that accomplish complex computer tasks with minimal human interventions have the potential to transform human-computer interaction, significantly enhancing accessibility and productivity.

What Are Tools Anyway? A Survey from the Language Model Perspective

no code implementations • 18 Mar 2024 • Zhiruo Wang, Zhoujun Cheng, Hao Zhu, Daniel Fried, Graham Neubig

Language models (LMs) are powerful yet mostly for text generation tasks.

OpenAgents: An Open Platform for Language Agents in the Wild

2 code implementations • 16 Oct 2023 • Tianbao Xie, Fan Zhou, Zhoujun Cheng, Peng Shi, Luoxuan Weng, Yitao Liu, Toh Jing Hua, Junning Zhao, Qian Liu, Che Liu, Leo Z. Liu, Yiheng Xu, Hongjin Su, Dongchan Shin, Caiming Xiong, Tao Yu

Language agents show potential in being capable of utilizing natural language for varied and intricate tasks in diverse environments, particularly when built upon large language models (LLMs).

Lemur: Harmonizing Natural Language and Code for Language Agents

1 code implementation • 10 Oct 2023 • Yiheng Xu, Hongjin Su, Chen Xing, Boyu Mi, Qian Liu, Weijia Shi, Binyuan Hui, Fan Zhou, Yitao Liu, Tianbao Xie, Zhoujun Cheng, Siheng Zhao, Lingpeng Kong, Bailin Wang, Caiming Xiong, Tao Yu

We introduce Lemur and Lemur-Chat, openly accessible language models optimized for both natural language and coding capabilities to serve as the backbone of versatile language agents.

Batch Prompting: Efficient Inference with Large Language Model APIs

2 code implementations • 19 Jan 2023 • Zhoujun Cheng, Jungo Kasai, Tao Yu

We extensively validate the effectiveness of batch prompting on ten datasets across commonsense QA, arithmetic reasoning, and NLI/NLU: batch prompting significantly~(up to 5x with six samples in batch) reduces the LLM (Codex) inference token and time costs while achieving better or comparable performance.

Reflection of Thought: Inversely Eliciting Numerical Reasoning in Language Models via Solving Linear Systems

no code implementations • 11 Oct 2022 • Fan Zhou, Haoyu Dong, Qian Liu, Zhoujun Cheng, Shi Han, Dongmei Zhang

Numerical reasoning over natural language has been a long-standing goal for the research community.

Binding Language Models in Symbolic Languages

1 code implementation • 6 Oct 2022 • Zhoujun Cheng, Tianbao Xie, Peng Shi, Chengzu Li, Rahul Nadkarni, Yushi Hu, Caiming Xiong, Dragomir Radev, Mari Ostendorf, Luke Zettlemoyer, Noah A. Smith, Tao Yu

We propose Binder, a training-free neural-symbolic framework that maps the task input to a program, which (1) allows binding a unified API of language model (LM) functionalities to a programming language (e. g., SQL, Python) to extend its grammar coverage and thus tackle more diverse questions, (2) adopts an LM as both the program parser and the underlying model called by the API during execution, and (3) requires only a few in-context exemplar annotations.

Ranked #4 on

Table-based Fact Verification

on TabFact

Ranked #4 on

Table-based Fact Verification

on TabFact

TaCube: Pre-computing Data Cubes for Answering Numerical-Reasoning Questions over Tabular Data

1 code implementation • 25 May 2022 • Fan Zhou, Mengkang Hu, Haoyu Dong, Zhoujun Cheng, Shi Han, Dongmei Zhang

Existing auto-regressive pre-trained language models (PLMs) like T5 and BART, have been well applied to table question answering by UNIFIEDSKG and TAPEX, respectively, and demonstrated state-of-the-art results on multiple benchmarks.

Table Pre-training: A Survey on Model Architectures, Pre-training Objectives, and Downstream Tasks

no code implementations • 24 Jan 2022 • Haoyu Dong, Zhoujun Cheng, Xinyi He, Mengyu Zhou, Anda Zhou, Fan Zhou, Ao Liu, Shi Han, Dongmei Zhang

Since a vast number of tables can be easily collected from web pages, spreadsheets, PDFs, and various other document types, a flurry of table pre-training frameworks have been proposed following the success of text and images, and they have achieved new state-of-the-arts on various tasks such as table question answering, table type recognition, column relation classification, table search, formula prediction, etc.

Understanding Pixel-level 2D Image Semantics with 3D Keypoint Knowledge Engine

no code implementations • 21 Nov 2021 • Yang You, Chengkun Li, Yujing Lou, Zhoujun Cheng, Liangwei Li, Lizhuang Ma, Weiming Wang, Cewu Lu

Pixel-level 2D object semantic understanding is an important topic in computer vision and could help machine deeply understand objects (e. g. functionality and affordance) in our daily life.

FORTAP: Using Formulas for Numerical-Reasoning-Aware Table Pretraining

1 code implementation • ACL 2022 • Zhoujun Cheng, Haoyu Dong, Ran Jia, Pengfei Wu, Shi Han, Fan Cheng, Dongmei Zhang

In this paper, we find that the spreadsheet formula, which performs calculations on numerical values in tables, is naturally a strong supervision of numerical reasoning.

HiTab: A Hierarchical Table Dataset for Question Answering and Natural Language Generation

1 code implementation • ACL 2022 • Zhoujun Cheng, Haoyu Dong, Zhiruo Wang, Ran Jia, Jiaqi Guo, Yan Gao, Shi Han, Jian-Guang Lou, Dongmei Zhang

HiTab provides 10, 686 QA pairs and descriptive sentences with well-annotated quantity and entity alignment on 3, 597 tables with broad coverage of table hierarchies and numerical reasoning types.

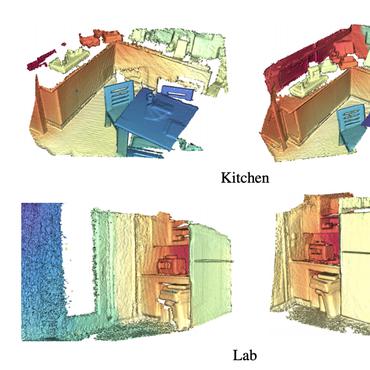

Semantic Correspondence via 2D-3D-2D Cycle

1 code implementation • 20 Apr 2020 • Yang You, Chengkun Li, Yujing Lou, Zhoujun Cheng, Lizhuang Ma, Cewu Lu, Weiming Wang

Visual semantic correspondence is an important topic in computer vision and could help machine understand objects in our daily life.

KeypointNet: A Large-scale 3D Keypoint Dataset Aggregated from Numerous Human Annotations

1 code implementation • CVPR 2020 • Yang You, Yujing Lou, Chengkun Li, Zhoujun Cheng, Liangwei Li, Lizhuang Ma, Weiming Wang, Cewu Lu

Detecting 3D objects keypoints is of great interest to the areas of both graphics and computer vision.

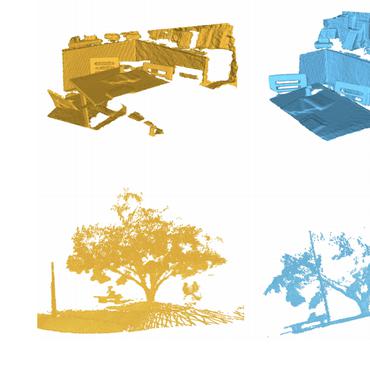

Human Correspondence Consensus for 3D Object Semantic Understanding

1 code implementation • ECCV 2020 • Yujing Lou, Yang You, Chengkun Li, Zhoujun Cheng, Liangwei Li, Lizhuang Ma, Weiming Wang, Cewu Lu

Semantic understanding of 3D objects is crucial in many applications such as object manipulation.