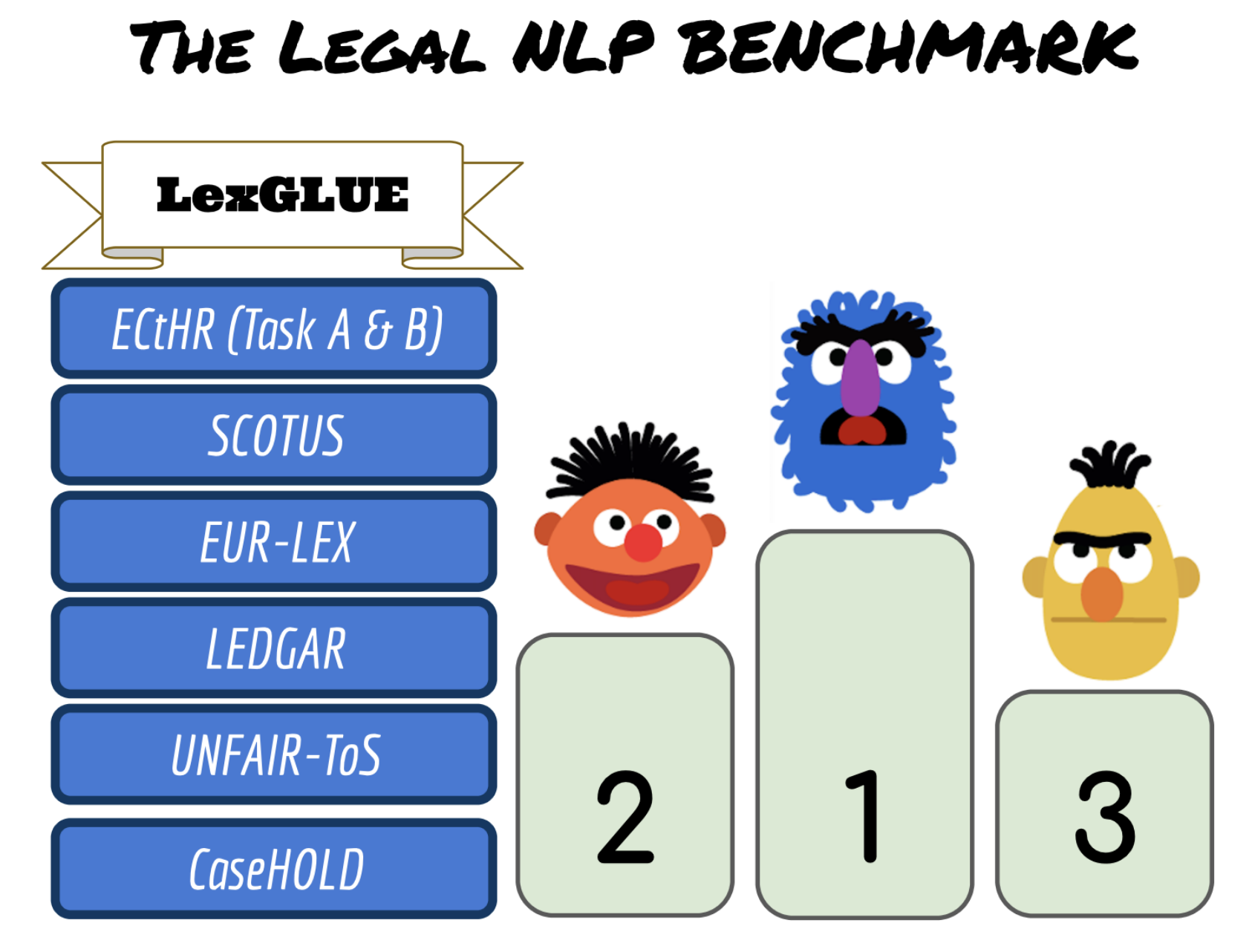

LexGLUE

Introduced by Chalkidis et al. in LexGLUE: A Benchmark Dataset for Legal Language Understanding in EnglishLegal General Language Understanding Evaluation (LexGLUE) benchmark is a collection of datasets for evaluating model performance across a diverse set of legal NLU tasks in a standardized way.

Papers

| Paper | Code | Results | Date | Stars |

|---|

Dataset Loaders

No data loaders found. You can

submit your data loader here.

No data loaders found. You can

submit your data loader here.