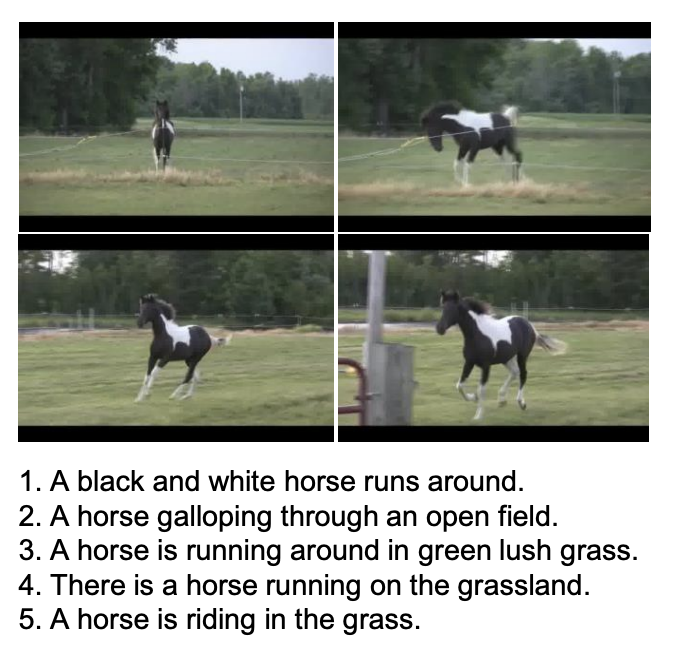

MSR-VTT

Introduced by Xu et al. in MSR-VTT: A Large Video Description Dataset for Bridging Video and LanguageMSR-VTT (Microsoft Research Video to Text) is a large-scale dataset for the open domain video captioning, which consists of 10,000 video clips from 20 categories, and each video clip is annotated with 20 English sentences by Amazon Mechanical Turks. There are about 29,000 unique words in all captions. The standard splits uses 6,513 clips for training, 497 clips for validation, and 2,990 clips for testing.

Source: Learning to Discretely Compose Reasoning Module Networksfor Video CaptioningBenchmarks

Papers

| Paper | Code | Results | Date | Stars |

|---|

Dataset Loaders

No data loaders found. You can

submit your data loader here.

No data loaders found. You can

submit your data loader here.