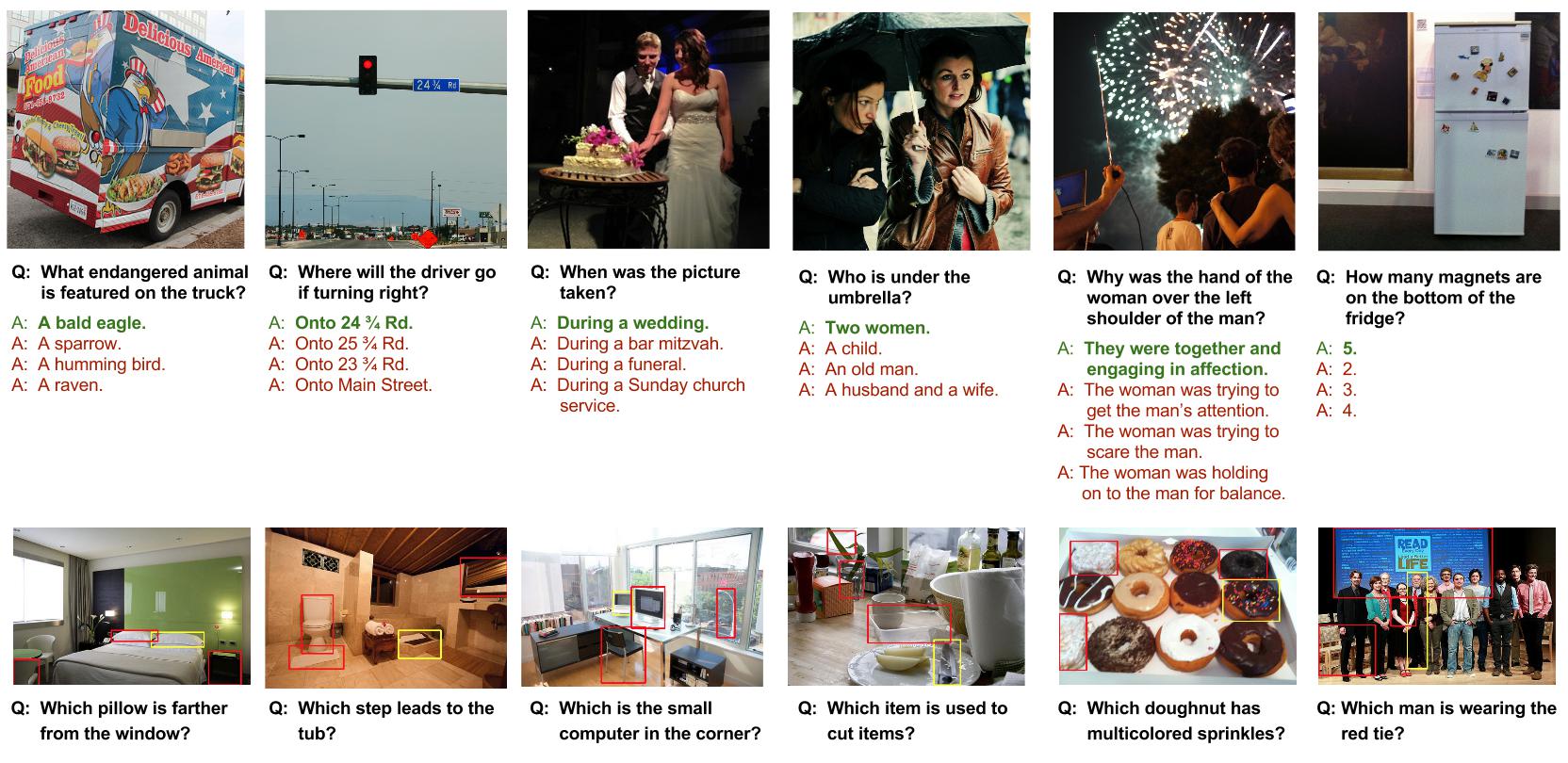

Visual7W is a large-scale visual question answering (QA) dataset, with object-level groundings and multimodal answers. Each question starts with one of the seven Ws, what, where, when, who, why, how and which. It is collected from 47,300 COCO images and it has 327,929 QA pairs, together with 1,311,756 human-generated multiple-choices and 561,459 object groundings from 36,579 categories.

Source: https://github.com/yukezhu/visual7w-toolkitPapers

| Paper | Code | Results | Date | Stars |

|---|

Dataset Loaders

No data loaders found. You can

submit your data loader here.

No data loaders found. You can

submit your data loader here.