Normalization

Normalization

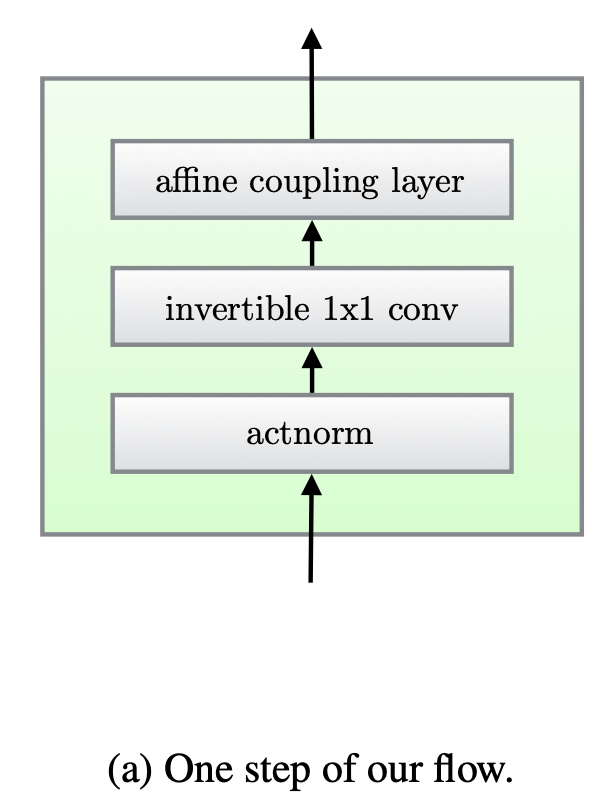

Activation Normalization

Introduced by Kingma et al. in Glow: Generative Flow with Invertible 1x1 ConvolutionsActivation Normalization is a type of normalization used for flow-based generative models; specifically it was introduced in the GLOW architecture. An ActNorm layer performs an affine transformation of the activations using a scale and bias parameter per channel, similar to batch normalization. These parameters are initialized such that the post-actnorm activations per-channel have zero mean and unit variance given an initial minibatch of data. This is a form of data dependent initilization. After initialization, the scale and bias are treated as regular trainable parameters that are independent of the data.

Source: Glow: Generative Flow with Invertible 1x1 ConvolutionsPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Image Dehazing | 2 | 11.11% |

| Image Enhancement | 2 | 11.11% |

| Pseudo Label | 1 | 5.56% |

| Offline RL | 1 | 5.56% |

| Benchmarking | 1 | 5.56% |

| Molecular Docking | 1 | 5.56% |

| Pose Prediction | 1 | 5.56% |

| Low-Light Image Enhancement | 1 | 5.56% |

| Zero-Shot Learning | 1 | 5.56% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |