Dimensionality Reduction

Dimensionality Reduction

Autoencoders

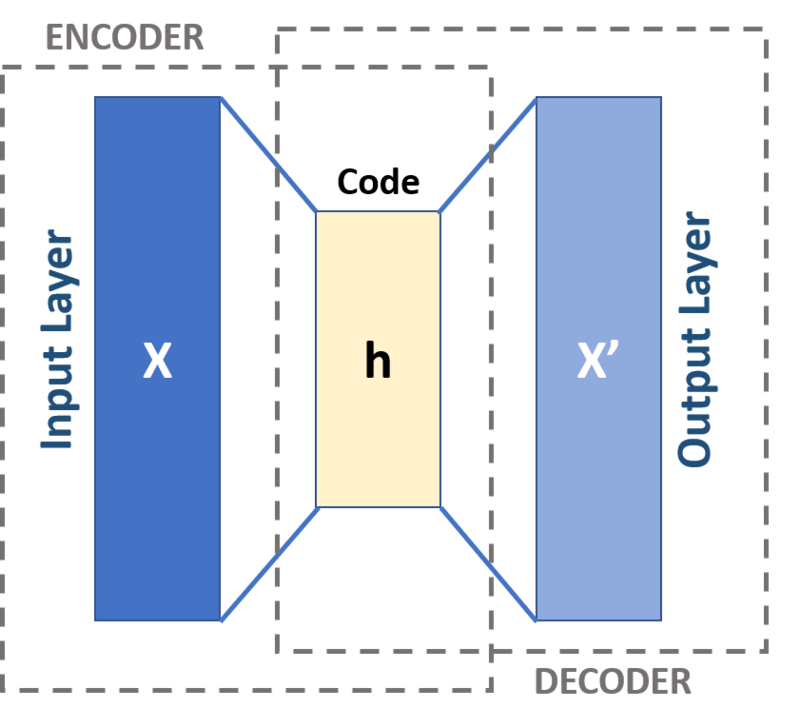

An autoencoder is a type of artificial neural network used to learn efficient data codings in an unsupervised manner. The aim of an autoencoder is to learn a representation (encoding) for a set of data, typically for dimensionality reduction, by training the network to ignore signal “noise”. Along with the reduction side, a reconstructing side is learnt, where the autoencoder tries to generate from the reduced encoding a representation as close as possible to its original input, hence its name.

Extracted from: Wikipedia

Image source: Wikipedia

Papers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Anomaly Detection | 32 | 11.19% |

| Dimensionality Reduction | 11 | 3.85% |

| Denoising | 10 | 3.50% |

| Unsupervised Anomaly Detection | 9 | 3.15% |

| Clustering | 9 | 3.15% |

| Video Anomaly Detection | 7 | 2.45% |

| Decision Making | 6 | 2.10% |

| Image Generation | 6 | 2.10% |

| Self-Supervised Learning | 6 | 2.10% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |