Normalization

Normalization

BatchChannel Normalization

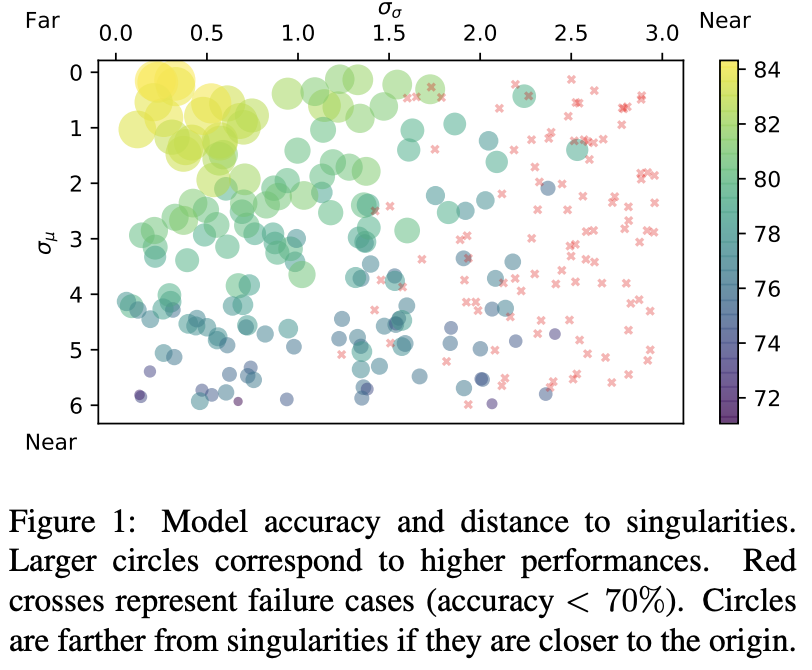

Introduced by Qiao et al. in Rethinking Normalization and Elimination Singularity in Neural NetworksBatch-Channel Normalization, or BCN, uses batch knowledge to prevent channel-normalized models from getting too close to "elimination singularities". Elimination singularities correspond to the points on the training trajectory where neurons become consistently deactivated. They cause degenerate manifolds in the loss landscape which will slow down training and harm model performances.

Source: Rethinking Normalization and Elimination Singularity in Neural NetworksPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Image Classification | 1 | 25.00% |

| Instance Segmentation | 1 | 25.00% |

| Object Detection | 1 | 25.00% |

| Semantic Segmentation | 1 | 25.00% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |