Ensembling

Ensembling

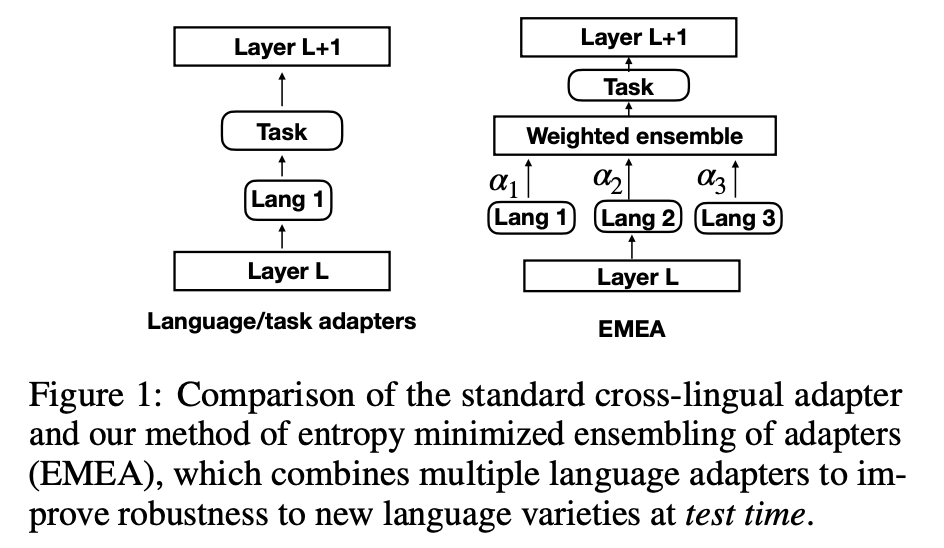

Entropy Minimized Ensemble of Adapters

Introduced by Wang et al. in Efficient Test Time Adapter Ensembling for Low-resource Language VarietiesEntropy Minimized Ensemble of Adapters, or EMEA, is a method that optimizes the ensemble weights of the pretrained language adapters for each test sentence by minimizing the entropy of its predictions. The intuition behind the method is that a good adapter weight $\alpha$ for a test input $x$ should make the model more confident in its prediction for $x$, that is, it should lead to lower model entropy over the input

Source: Efficient Test Time Adapter Ensembling for Low-resource Language VarietiesPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Machine Translation | 1 | 14.29% |

| NMT | 1 | 14.29% |

| Translation | 1 | 14.29% |

| Cross-Lingual Transfer | 1 | 14.29% |

| Named Entity Recognition (NER) | 1 | 14.29% |

| Part-Of-Speech Tagging | 1 | 14.29% |

| Sentence | 1 | 14.29% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |