Regularization

Regularization

Entropy Regularization

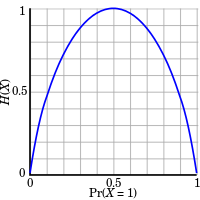

Introduced by Mnih et al. in Asynchronous Methods for Deep Reinforcement LearningEntropy Regularization is a type of regularization used in reinforcement learning. For on-policy policy gradient based methods like A3C, the same mutual reinforcement behaviour leads to a highly-peaked $\pi\left(a\mid{s}\right)$ towards a few actions or action sequences, since it is easier for the actor and critic to overoptimise to a small portion of the environment. To reduce this problem, entropy regularization adds an entropy term to the loss to promote action diversity:

$$H(X) = -\sum\pi\left(x\right)\log\left(\pi\left(x\right)\right) $$

Image Credit: Wikipedia

Source: Asynchronous Methods for Deep Reinforcement LearningPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Reinforcement Learning (RL) | 147 | 17.80% |

| Autonomous Driving | 112 | 13.56% |

| Autonomous Vehicles | 43 | 5.21% |

| Imitation Learning | 33 | 4.00% |

| Decision Making | 28 | 3.39% |

| Object Detection | 25 | 3.03% |

| Semantic Segmentation | 19 | 2.30% |

| Continuous Control | 16 | 1.94% |

| Language Modelling | 13 | 1.57% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |