Sample Re-Weighting

Sample Re-Weighting

Fast Sample Re-Weighting

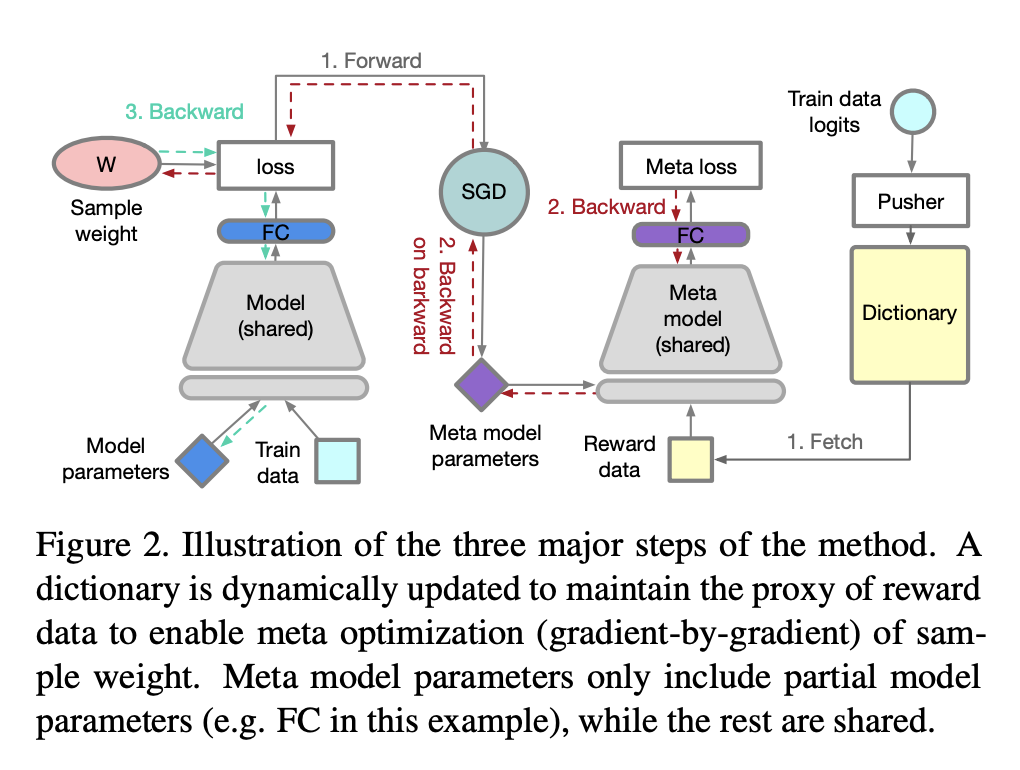

Introduced by Zhang et al. in Learning Fast Sample Re-weighting Without Reward DataFast Sample Re-Weighting, or FSR, is a sample re-weighting strategy to tackle problems such as dataset biases, noisy labels and imbalanced classes. It leverages a dictionary (essentially an extra buffer) to monitor the training history reflected by the model updates during meta optimization periodically, and utilises a valuation function to discover meaningful samples from training data as the proxy of reward data. The unbiased dictionary keeps being updated and provides reward signals to optimize sample weights. Additionally, instead of maintaining model states for both model and sample weight updates separately, feature sharing is enabled for saving the computation cost used for maintaining respective states.

Source: Learning Fast Sample Re-weighting Without Reward DataPapers

| Paper | Code | Results | Date | Stars |

|---|

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |