Feedforward Networks

Feedforward Networks

Feedforward Network

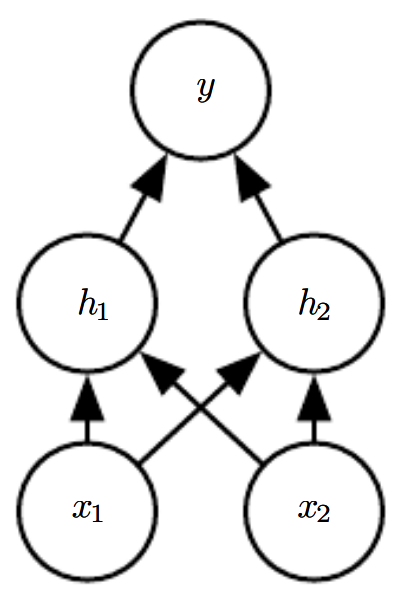

A Feedforward Network, or a Multilayer Perceptron (MLP), is a neural network with solely densely connected layers. This is the classic neural network architecture of the literature. It consists of inputs $x$ passed through units $h$ (of which there can be many layers) to predict a target $y$. Activation functions are generally chosen to be non-linear to allow for flexible functional approximation.

Image Source: Deep Learning, Goodfellow et al

Papers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Object Detection | 68 | 9.25% |

| Self-Supervised Learning | 60 | 8.16% |

| Image Generation | 37 | 5.03% |

| Semantic Segmentation | 29 | 3.95% |

| Image Classification | 18 | 2.45% |

| Disentanglement | 15 | 2.04% |

| Face Swapping | 13 | 1.77% |

| Language Modelling | 11 | 1.50% |

| Image Manipulation | 10 | 1.36% |

Dense Connections

Dense Connections