Large Batch Optimization

Large Batch Optimization

LAMB

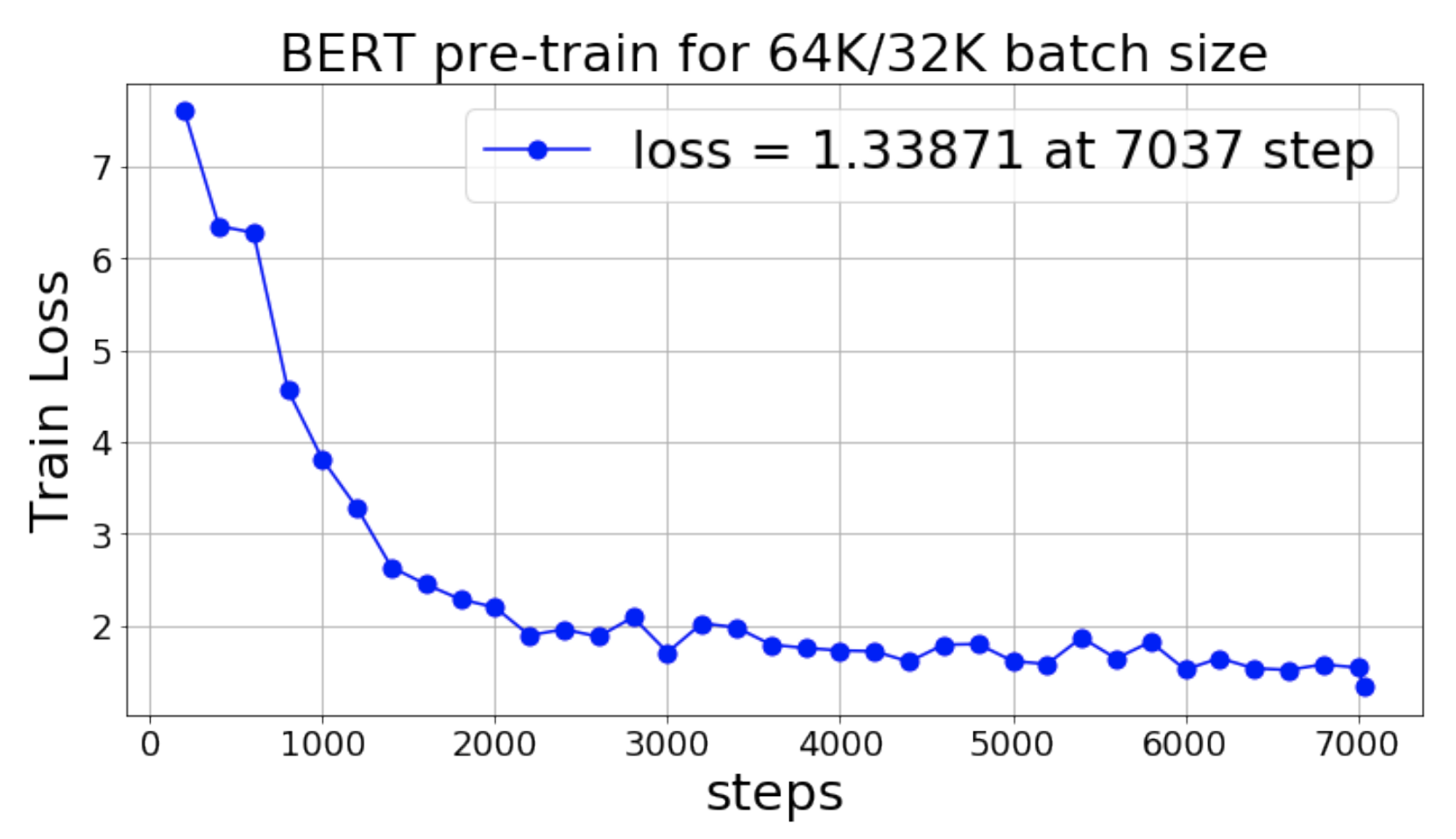

Introduced by You et al. in Large Batch Optimization for Deep Learning: Training BERT in 76 minutesLAMB is a a layerwise adaptive large batch optimization technique. It provides a strategy for adapting the learning rate in large batch settings. LAMB uses Adam as the base algorithm and then forms an update as:

$$r_{t} = \frac{m_{t}}{\sqrt{v_{t}} + \epsilon}$$ $$x_{t+1}^{\left(i\right)} = x_{t}^{\left(i\right)} - \eta_{t}\frac{\phi\left(|| x_{t}^{\left(i\right)} ||\right)}{|| m_{t}^{\left(i\right)} || }\left(r_{t}^{\left(i\right)}+\lambda{x_{t}^{\left(i\right)}}\right) $$

Unlike LARS, the adaptivity of LAMB is two-fold: (i) per dimension normalization with respect to the square root of the second moment used in Adam and (ii) layerwise normalization obtained due to layerwise adaptivity.

Source: Large Batch Optimization for Deep Learning: Training BERT in 76 minutesPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Language Modelling | 29 | 9.57% |

| Sentence | 23 | 7.59% |

| Question Answering | 15 | 4.95% |

| Text Classification | 13 | 4.29% |

| Sentiment Analysis | 13 | 4.29% |

| Natural Language Understanding | 9 | 2.97% |

| Named Entity Recognition (NER) | 8 | 2.64% |

| NER | 7 | 2.31% |

| Image Classification | 7 | 2.31% |

Adam

Adam