Self-Supervised Learning

Self-Supervised Learning

MoCo v2

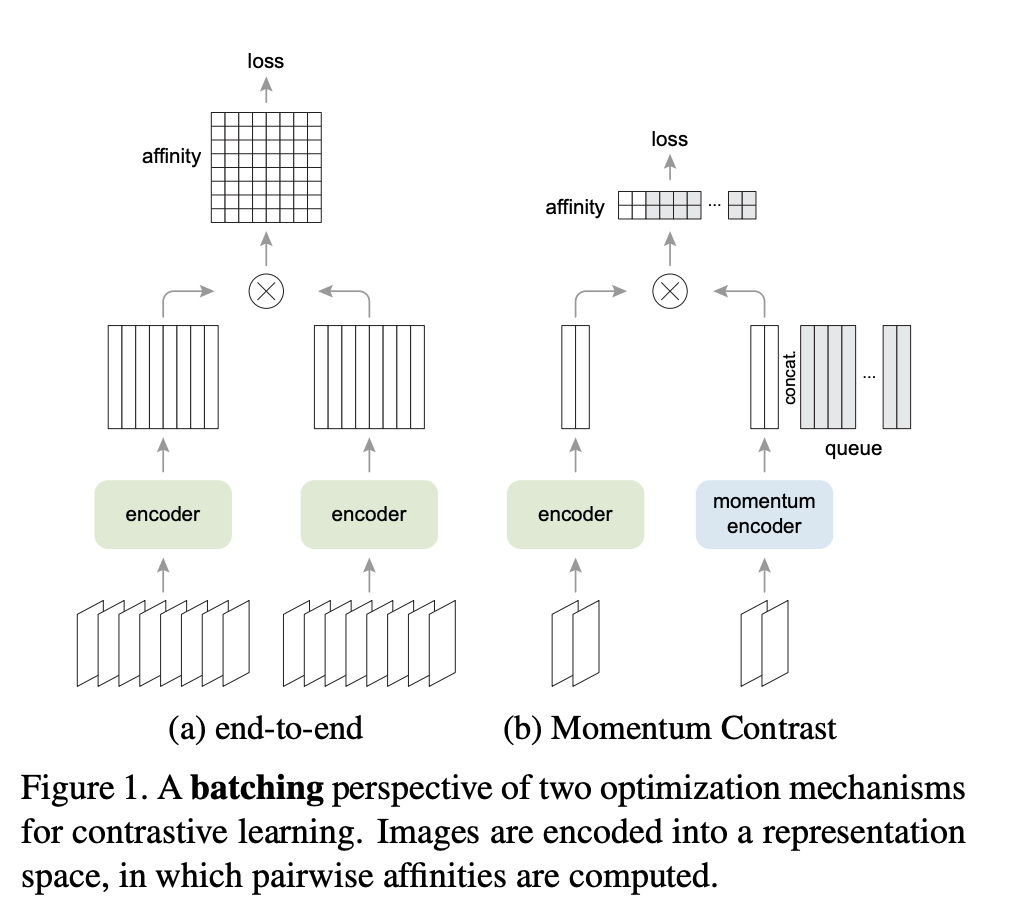

Introduced by Chen et al. in Improved Baselines with Momentum Contrastive LearningMoCo v2 is an improved version of the Momentum Contrast self-supervised learning algorithm. Motivated by the findings presented in the SimCLR paper, authors:

- Replace the 1-layer fully connected layer with a 2-layer MLP head with ReLU for the unsupervised training stage.

- Include blur augmentation.

- Use cosine learning rate schedule.

These modifications enable MoCo to outperform the state-of-the-art SimCLR with a smaller batch size and fewer epochs.

Source: Improved Baselines with Momentum Contrastive LearningPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Self-Supervised Learning | 16 | 22.54% |

| Image Classification | 7 | 9.86% |

| Semantic Segmentation | 6 | 8.45% |

| Object Detection | 5 | 7.04% |

| Classification | 3 | 4.23% |

| Language Modelling | 2 | 2.82% |

| Instance Segmentation | 2 | 2.82% |

| Self-Supervised Image Classification | 2 | 2.82% |

| Clustering | 2 | 2.82% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

Feedforward Network

Feedforward Network

|

Feedforward Networks | |

InfoNCE

InfoNCE

|

Loss Functions | |

Random Gaussian Blur

Random Gaussian Blur

|

Image Data Augmentation |