Point Cloud Models

Point Cloud Models

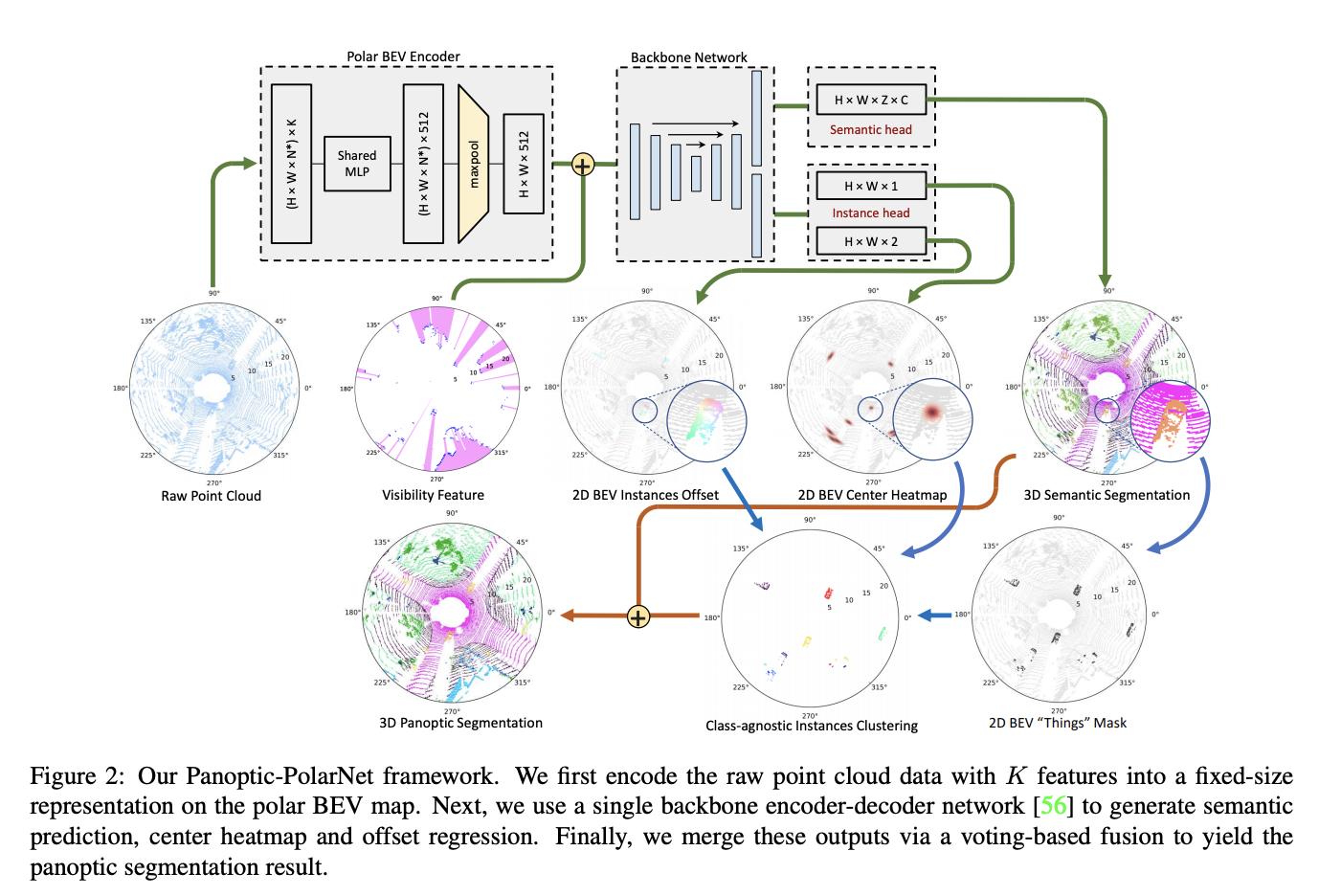

Panoptic-PolarNet

Introduced by Zhou et al. in Panoptic-PolarNet: Proposal-free LiDAR Point Cloud Panoptic SegmentationPanoptic-PolarNet is a point cloud segmentation framework for LiDAR point clouds. It learns both semantic segmentation and class-agnostic instance clustering in a single inference network using a polar Bird's Eye View (BEV) representation, enabling the authors to circumvent the issue of occlusion among instances in urban street scenes. We first encode the raw point cloud data with $K$ features into a fixed-size representation on the polar BEV map. Next, we use a single backbone encoder-decoder network to generate semantic prediction, center heatmap and offset regression. Finally, we merge these outputs via a voting-based fusion to yield the panoptic segmentation result.

Source: Panoptic-PolarNet: Proposal-free LiDAR Point Cloud Panoptic SegmentationPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Clustering | 1 | 25.00% |

| Instance Segmentation | 1 | 25.00% |

| Panoptic Segmentation | 1 | 25.00% |

| Semantic Segmentation | 1 | 25.00% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |