Distributed Methods

Distributed Methods

PyTorch DDP

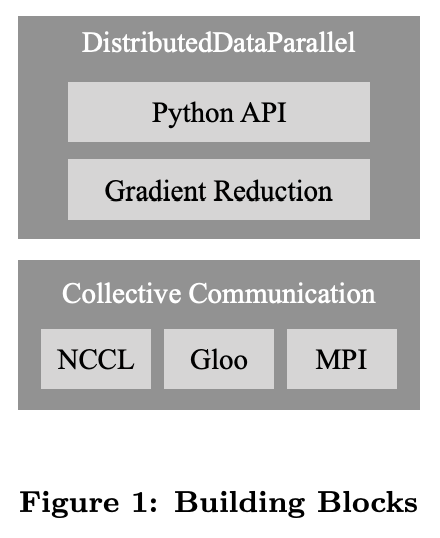

Introduced by Li et al. in PyTorch Distributed: Experiences on Accelerating Data Parallel TrainingPyTorch DDP (Distributed Data Parallel) is a distributed data parallel implementation for PyTorch. To guarantee mathematical equivalence, all replicas start from the same initial values for model parameters and synchronize gradients to keep parameters consistent across training iterations. To minimize the intrusiveness, the implementation exposes the same forward API as the user model, allowing applications to seamlessly replace subsequent occurrences of a user model with the distributed data parallel model object with no additional code changes. Several techniques are integrated into the design to deliver high-performance training, including bucketing gradients, overlapping communication with computation, and skipping synchronization.

Source: PyTorch Distributed: Experiences on Accelerating Data Parallel TrainingPapers

| Paper | Code | Results | Date | Stars |

|---|

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |