Activation Functions

Activation Functions

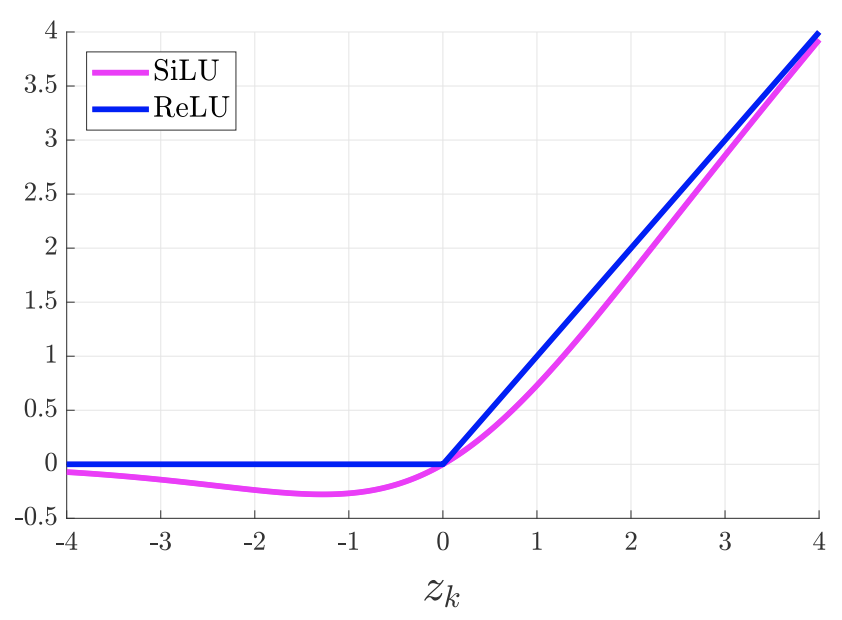

Sigmoid Linear Unit

Introduced by Elfwing et al. in Sigmoid-Weighted Linear Units for Neural Network Function Approximation in Reinforcement LearningSigmoid Linear Units, or SiLUs, are activation functions for neural networks. The activation of the SiLU is computed by the sigmoid function multiplied by its input, or $$ x\sigma(x).$$

See Gaussian Error Linear Units (GELUs) where the SiLU was originally coined, and see Sigmoid-Weighted Linear Units for Neural Network Function Approximation in Reinforcement Learning and Swish: a Self-Gated Activation Function where the SiLU was experimented with later.

Source: Sigmoid-Weighted Linear Units for Neural Network Function Approximation in Reinforcement LearningPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Image Classification | 6 | 30.00% |

| Activation Function Synthesis | 2 | 10.00% |

| Learning Theory | 2 | 10.00% |

| Instance Segmentation | 2 | 10.00% |

| Object Detection | 2 | 10.00% |

| Quantization | 1 | 5.00% |

| Graph Attention | 1 | 5.00% |

| Autonomous Driving | 1 | 5.00% |

| Semantic Segmentation | 1 | 5.00% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |