Attention Mechanisms

Attention Mechanisms

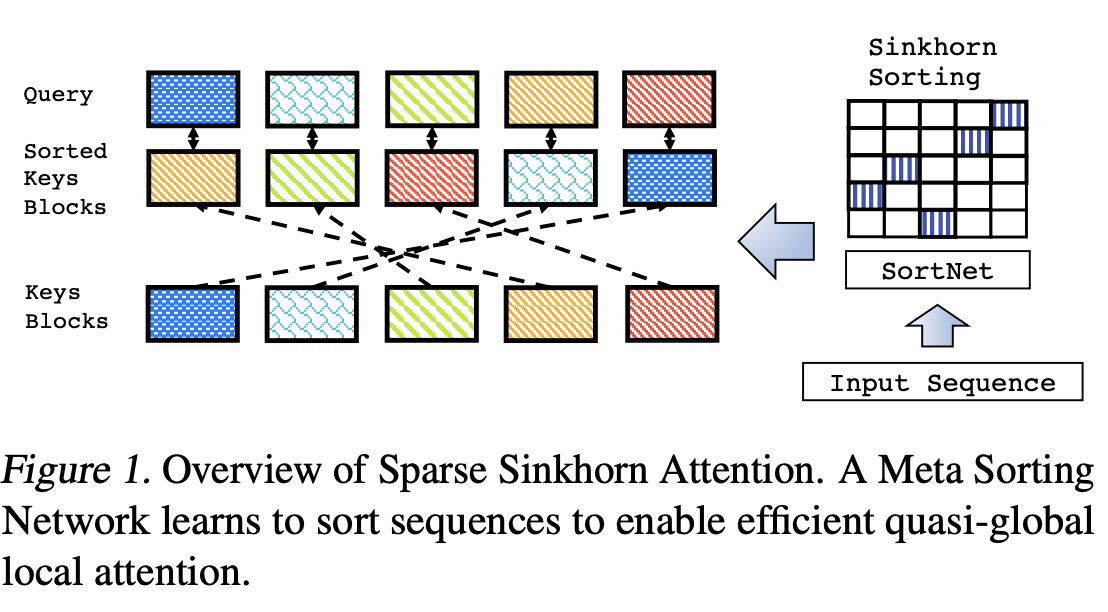

Sparse Sinkhorn Attention

Introduced by Tay et al. in Sparse Sinkhorn AttentionSparse Sinkhorn Attention is an attention mechanism that reduces the memory complexity of the dot-product attention mechanism and is capable of learning sparse attention outputs. It is based on the idea of differentiable sorting of internal representations within the self-attention module. SSA incorporates a meta sorting network that learns to rearrange and sort input sequences. Sinkhorn normalization is used to normalize the rows and columns of the sorting matrix. The actual SSA attention mechanism then acts on the block sorted sequences.

Source: Sparse Sinkhorn AttentionPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| 3D Human Pose Estimation | 1 | 12.50% |

| 3D Multi-Person Pose Estimation | 1 | 12.50% |

| 3D Pose Estimation | 1 | 12.50% |

| Pose Estimation | 1 | 12.50% |

| Document Classification | 1 | 12.50% |

| Image Generation | 1 | 12.50% |

| Language Modelling | 1 | 12.50% |

| Natural Language Inference | 1 | 12.50% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

Feedforward Network

Feedforward Network

|

Feedforward Networks | |

ReLU

ReLU

|

Activation Functions | |

Softmax

Softmax

|

Output Functions |