Normalization

Normalization

Sparse Switchable Normalization

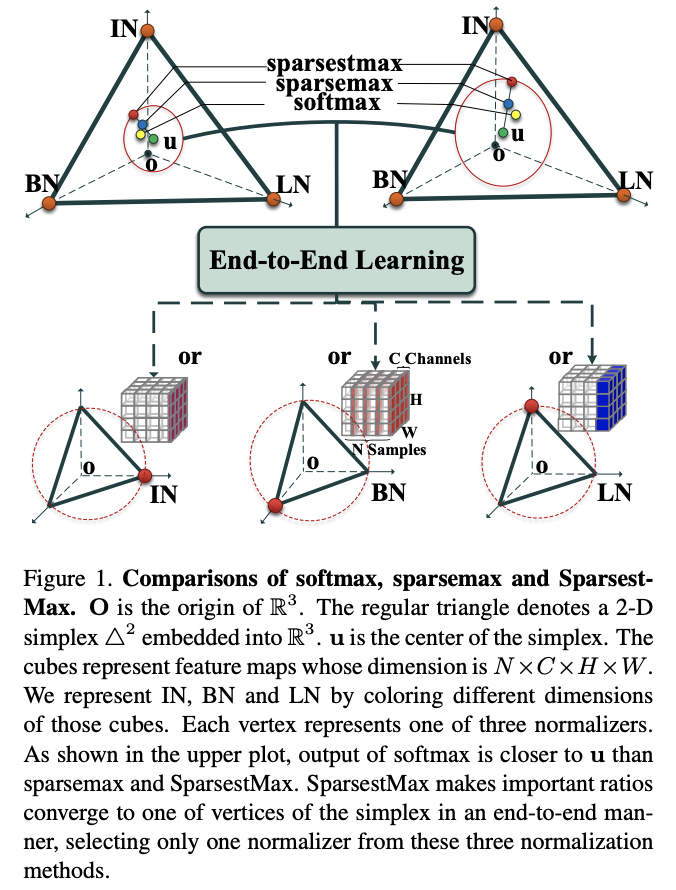

Introduced by Shao et al. in SSN: Learning Sparse Switchable Normalization via SparsestMaxSparse Switchable Normalization (SSN) is a variant on Switchable Normalization where the importance ratios are constrained to be sparse. Unlike $\ell_1$ and $\ell_0$ constraints that impose difficulties in optimization, the constrained optimization problem is turned into feed-forward computation through SparseMax, which is a sparse version of softmax.

Source: SSN: Learning Sparse Switchable Normalization via SparsestMaxPapers

| Paper | Code | Results | Date | Stars |

|---|

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

Batch Normalization

Batch Normalization

|

Normalization | |

Instance Normalization

Instance Normalization

|

Normalization | |

Layer Normalization

Layer Normalization

|

Normalization | |

Sparsemax

Sparsemax

|

Output Functions |