Activation Functions

Activation Functions

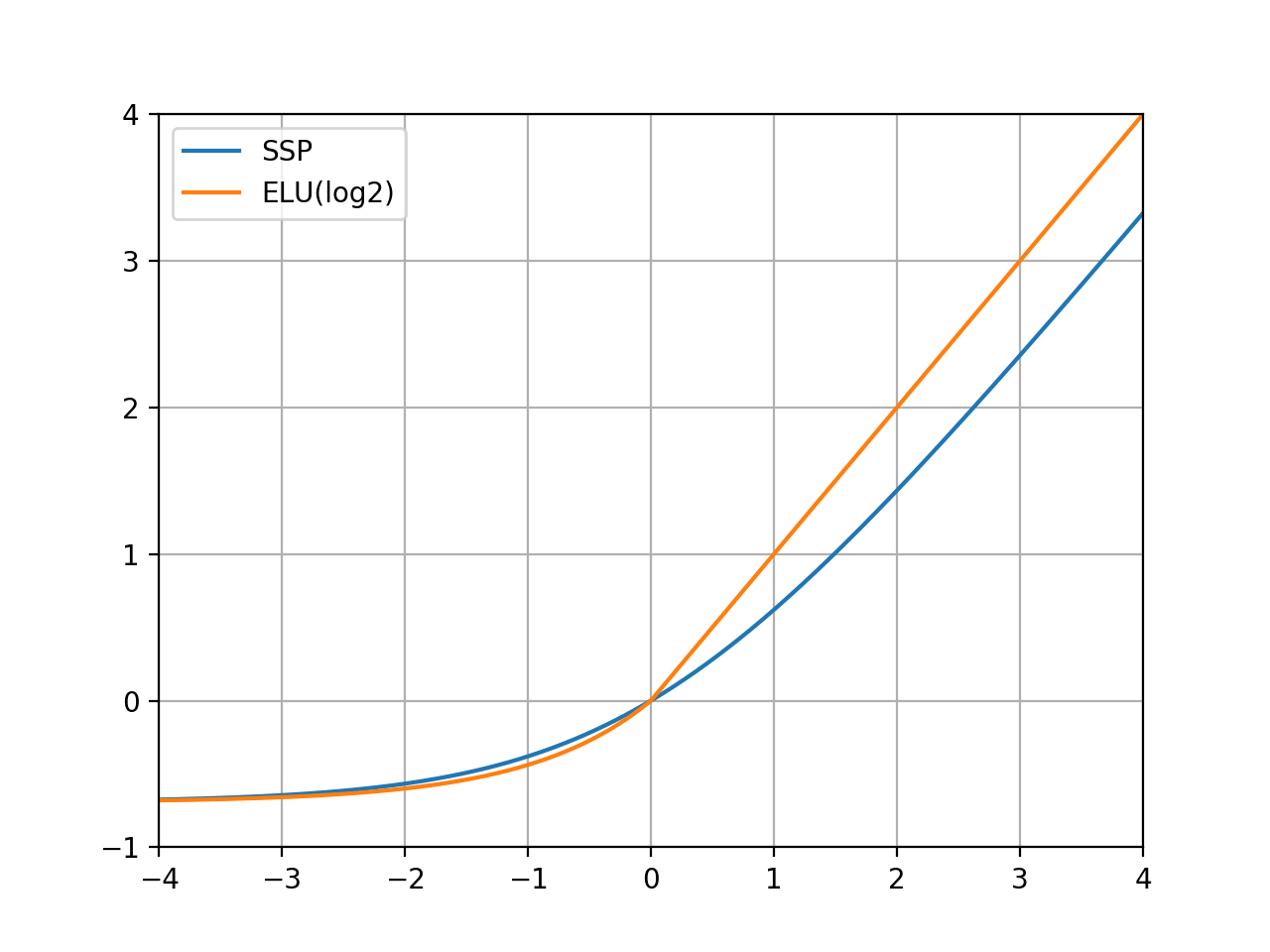

Shifted Softplus

Introduced by Schütt et al. in SchNet: A continuous-filter convolutional neural network for modeling quantum interactionsShifted Softplus is an activation function ${\rm ssp}(x) = \ln( 0.5 e^{x} + 0.5 )$, which SchNet employs as non-linearity throughout the network in order to obtain a smooth potential energy surface. The shifting ensures that ${\rm ssp}(0) = 0$ and improves the convergence of the network. This activation function shows similarity to ELUs, while having infinite order of continuity.

Source: SchNet: A continuous-filter convolutional neural network for modeling quantum interactionsPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Formation Energy | 3 | 27.27% |

| Property Prediction | 2 | 18.18% |

| BIG-bench Machine Learning | 2 | 18.18% |

| Robust Design | 1 | 9.09% |

| 3D Pose Estimation | 1 | 9.09% |

| Drug Discovery | 1 | 9.09% |

| Total Energy | 1 | 9.09% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |