ReFusion: 3D Reconstruction in Dynamic Environments for RGB-D Cameras Exploiting Residuals

Mapping and localization are essential capabilities of robotic systems. Although the majority of mapping systems focus on static environments, the deployment in real-world situations requires them to handle dynamic objects. In this paper, we propose an approach for an RGB-D sensor that is able to consistently map scenes containing multiple dynamic elements. For localization and mapping, we employ an efficient direct tracking on the truncated signed distance function (TSDF) and leverage color information encoded in the TSDF to estimate the pose of the sensor. The TSDF is efficiently represented using voxel hashing, with most computations parallelized on a GPU. For detecting dynamics, we exploit the residuals obtained after an initial registration, together with the explicit modeling of free space in the model. We evaluate our approach on existing datasets, and provide a new dataset showing highly dynamic scenes. These experiments show that our approach often surpass other state-of-the-art dense SLAM methods. We make available our dataset with the ground truth for both the trajectory of the RGB-D sensor obtained by a motion capture system and the model of the static environment using a high-precision terrestrial laser scanner. Finally, we release our approach as open source code.

PDF Abstract

Bonn RGB-D Dynamic

Bonn RGB-D Dynamic

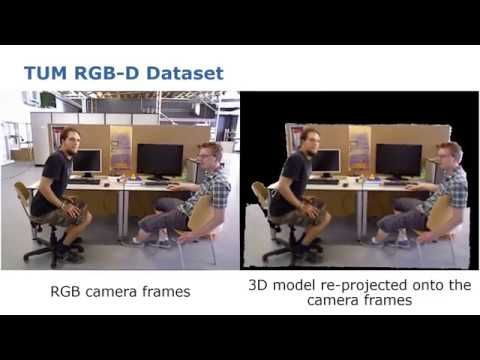

TUM RGB-D

TUM RGB-D