3D-LaneNet: End-to-End 3D Multiple Lane Detection

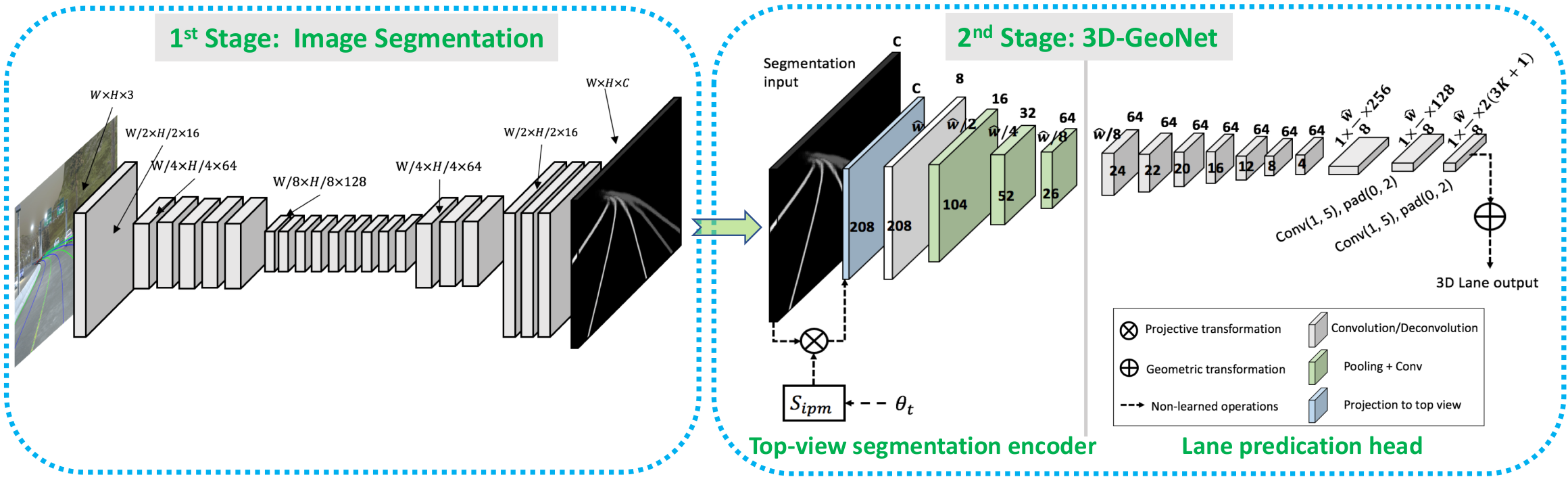

We introduce a network that directly predicts the 3D layout of lanes in a road scene from a single image. This work marks a first attempt to address this task with on-board sensing without assuming a known constant lane width or relying on pre-mapped environments. Our network architecture, 3D-LaneNet, applies two new concepts: intra-network inverse-perspective mapping (IPM) and anchor-based lane representation. The intra-network IPM projection facilitates a dual-representation information flow in both regular image-view and top-view. An anchor-per-column output representation enables our end-to-end approach which replaces common heuristics such as clustering and outlier rejection, casting lane estimation as an object detection problem. In addition, our approach explicitly handles complex situations such as lane merges and splits. Results are shown on two new 3D lane datasets, a synthetic and a real one. For comparison with existing methods, we test our approach on the image-only tuSimple lane detection benchmark, achieving performance competitive with state-of-the-art.

PDF Abstract ICCV 2019 PDF ICCV 2019 Abstract

OpenLane

OpenLane