Few-NERD: A Few-Shot Named Entity Recognition Dataset

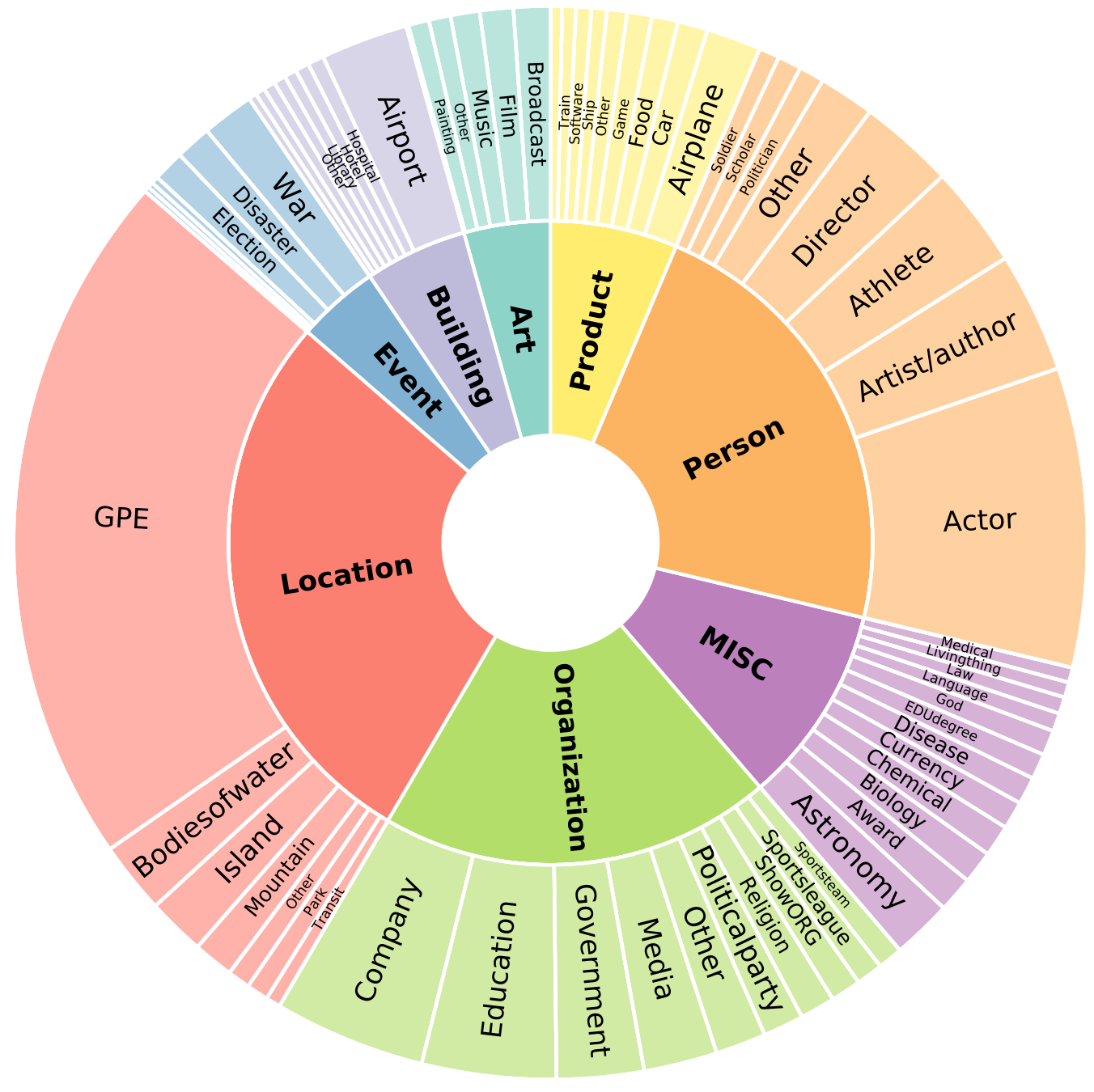

Recently, considerable literature has grown up around the theme of few-shot named entity recognition (NER), but little published benchmark data specifically focused on the practical and challenging task. Current approaches collect existing supervised NER datasets and re-organize them to the few-shot setting for empirical study. These strategies conventionally aim to recognize coarse-grained entity types with few examples, while in practice, most unseen entity types are fine-grained. In this paper, we present Few-NERD, a large-scale human-annotated few-shot NER dataset with a hierarchy of 8 coarse-grained and 66 fine-grained entity types. Few-NERD consists of 188,238 sentences from Wikipedia, 4,601,160 words are included and each is annotated as context or a part of a two-level entity type. To the best of our knowledge, this is the first few-shot NER dataset and the largest human-crafted NER dataset. We construct benchmark tasks with different emphases to comprehensively assess the generalization capability of models. Extensive empirical results and analysis show that Few-NERD is challenging and the problem requires further research. We make Few-NERD public at https://ningding97.github.io/fewnerd/.

PDF Abstract ACL 2021 PDF ACL 2021 AbstractCode

Results from the Paper

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Few-shot NER | Few-NERD (INTER) | NNShot | 5 way 1~2 shot | 47.24±1.00 | # 10 | |

| 5 way 5~10 shot | 55.64±0.63 | # 11 | ||||

| 10 way 1~2 shot | 38.87±0.21 | # 10 | ||||

| 10 way 5~10 shot | 49.57±2.73 | # 10 | ||||

| Few-shot NER | Few-NERD (INTER) | StructShot | 5 way 1~2 shot | 51.88±0.69 | # 9 | |

| 5 way 5~10 shot | 57.32±0.63 | # 10 | ||||

| 10 way 1~2 shot | 43.34±0.10 | # 9 | ||||

| 10 way 5~10 shot | 49.57±3.08 | # 10 | ||||

| Few-shot NER | Few-NERD (INTER) | ProtoBERT | 5 way 1~2 shot | 38.83±1.49 | # 11 | |

| 5 way 5~10 shot | 58.79±0.44 | # 9 | ||||

| 10 way 1~2 shot | 32.45±0.79 | # 11 | ||||

| 10 way 5~10 shot | 52.92±0.37 | # 9 | ||||

| Few-shot NER | Few-NERD (INTRA) | NNShot | 5 way 1~2 shot | 25.78±0.91 | # 10 | |

| 5 way 5~10 shot | 36.18±0.79 | # 11 | ||||

| 10 way 1~2 shot | 18.27±0.41 | # 10 | ||||

| 10 way 5~10 shot | 27.38±0.53 | # 10 | ||||

| Few-shot NER | Few-NERD (INTRA) | StructShot | 5 way 1~2 shot | 30.21±0.90 | # 9 | |

| 5 way 5~10 shot | 38.00±1.29 | # 10 | ||||

| 10 way 1~2 shot | 21.03±1.13 | # 9 | ||||

| 10 way 5~10 shot | 26.42±0.60 | # 11 | ||||

| Few-shot NER | Few-NERD (INTRA) | ProtoBERT | 5 way 1~2 shot | 20.76±0.84 | # 11 | |

| 5 way 5~10 shot | 42.54±0.94 | # 9 | ||||

| 10 way 1~2 shot | 15.05±0.44 | # 11 | ||||

| 10 way 5~10 shot | 35.40±0.13 | # 9 |

Few-NERD

Few-NERD