Bottom-Up Abstractive Summarization

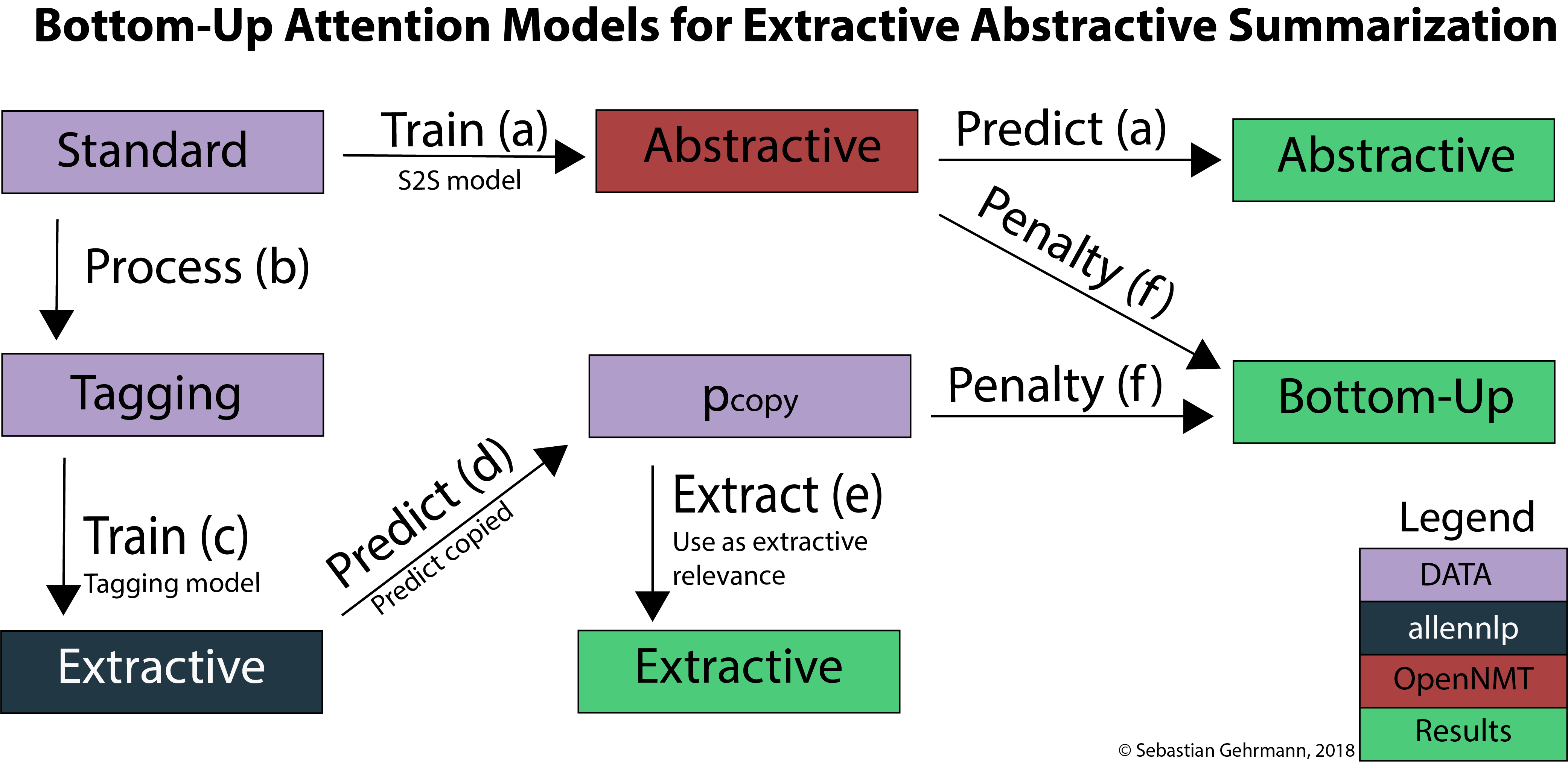

Neural network-based methods for abstractive summarization produce outputs that are more fluent than other techniques, but which can be poor at content selection. This work proposes a simple technique for addressing this issue: use a data-efficient content selector to over-determine phrases in a source document that should be part of the summary. We use this selector as a bottom-up attention step to constrain the model to likely phrases. We show that this approach improves the ability to compress text, while still generating fluent summaries. This two-step process is both simpler and higher performing than other end-to-end content selection models, leading to significant improvements on ROUGE for both the CNN-DM and NYT corpus. Furthermore, the content selector can be trained with as little as 1,000 sentences, making it easy to transfer a trained summarizer to a new domain.

PDF Abstract EMNLP 2018 PDF EMNLP 2018 Abstract

CNN/Daily Mail

CNN/Daily Mail

New York Times Annotated Corpus

New York Times Annotated Corpus

Multi-News

Multi-News