A Case Based Reasoning Approach for Answer Reranking in Question Answering

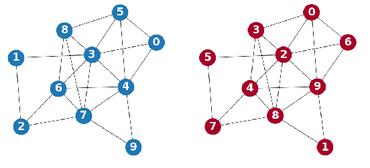

In this document I present an approach to answer validation and reranking for question answering (QA) systems. A cased-based reasoning (CBR) system judges answer candidates for questions from annotated answer candidates for earlier questions. The promise of this approach is that user feedback will result in improved answers of the QA system, due to the growing case base. In the paper, I present the adequate structuring of the case base and the appropriate selection of relevant similarity measures, in order to solve the answer validation problem. The structural case base is built from annotated MultiNet graphs, which provide representations for natural language expressions, and corresponding graph similarity measures. I cover a priori relations to experienced answer candidates for former questions. I compare the CBR System results to current approaches in an experiment integrating CBR into an existing framework for answer validation and reranking. This integration is achieved by adding CBR-related features to the input of a learned ranking model that determines the final answer ranking. In the experiments based on QA@CLEF questions, the best learned models make heavy use of CBR features. Observing the results with a continually growing case base, I present a positive effect of the size of the case base on the accuracy of the CBR subsystem.

PDF Abstract