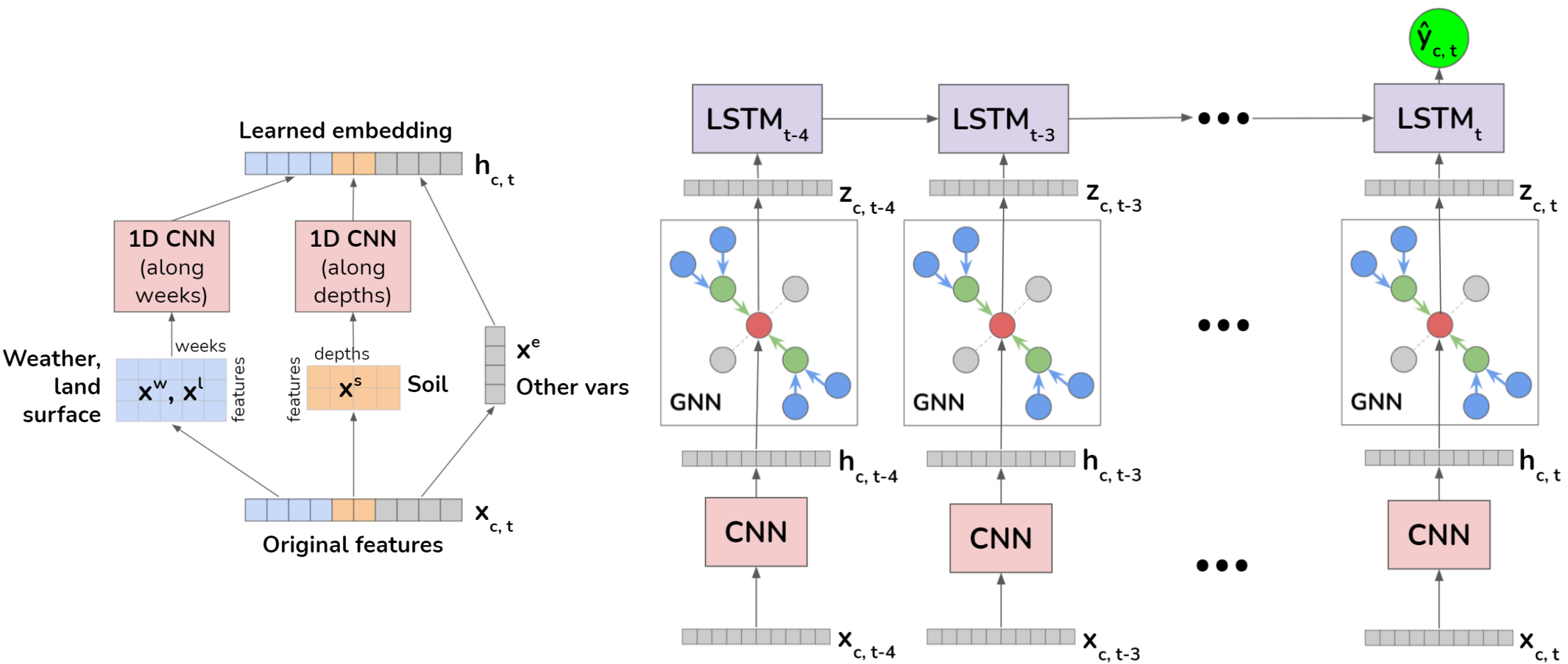

A GNN-RNN Approach for Harnessing Geospatial and Temporal Information: Application to Crop Yield Prediction

Climate change is posing new challenges to crop-related concerns including food insecurity, supply stability and economic planning. As one of the central challenges, crop yield prediction has become a pressing task in the machine learning field. Despite its importance, the prediction task is exceptionally complicated since crop yields depend on various factors such as weather, land surface, soil quality as well as their interactions. In recent years, machine learning models have been successfully applied in this domain. However, these models either restrict their tasks to a relatively small region, or only study over a single or few years, which makes them hard to generalize spatially and temporally. In this paper, we introduce a novel graph-based recurrent neural network for crop yield prediction, to incorporate both geographical and temporal knowledge in the model, and further boost predictive power. Our method is trained, validated, and tested on over 2000 counties from 41 states in the US mainland, covering years from 1981 to 2019. As far as we know, this is the first machine learning method that embeds geographical knowledge in crop yield prediction and predicts the crop yields at county level nationwide. We also laid a solid foundation for the comparison with other machine learning baselines by applying well-known linear models, tree-based models, deep learning methods and comparing their performance. Experiments show that our proposed method consistently outperforms the existing state-of-the-art methods on various metrics, validating the effectiveness of geospatial and temporal information.

PDF Abstract